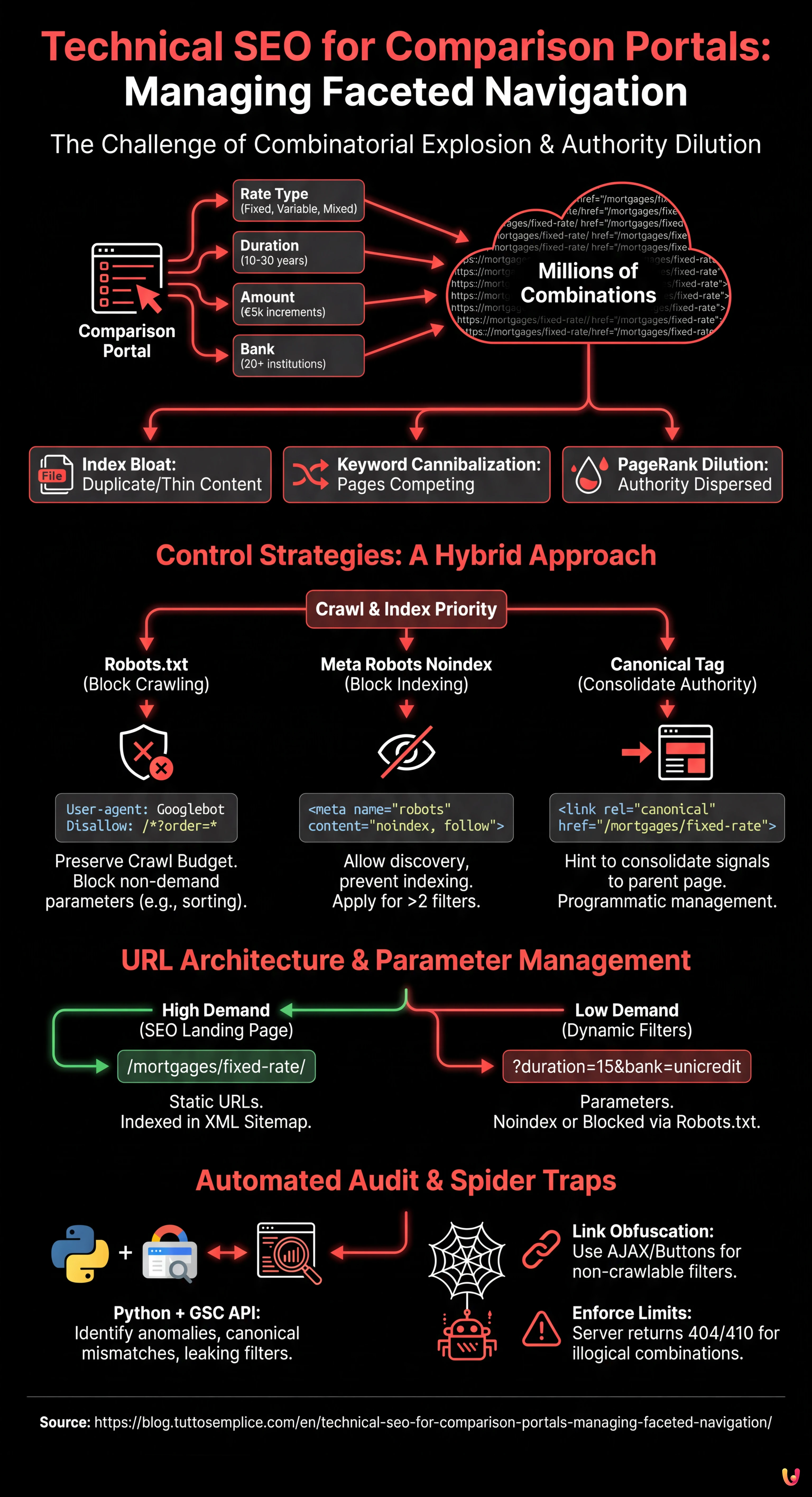

In the digital landscape of 2026, where artificial intelligence and Large Language Models (LLMs) increasingly influence how search engines process information, information architecture remains the fundamental pillar for high-traffic sites. For large aggregators, the main challenge lies in Technical SEO for Comparison Portals: Managing Faceted Navigation. This technical guide is designed for CTOs, SEO Managers, and developers working on Fintech portals (such as the MutuiperlaCasa.com case study) or real estate sites, where millions of filter combinations can turn into the worst nightmare for Crawl Budget.

1. The Mathematical Problem: Combinatorics and Authority Dilution

Faceted navigation allows users to filter results based on multiple attributes. On a mortgage portal, a user might select:

- Rate Type: Fixed, Variable, Mixed.

- Duration: 10, 15, 20, 25, 30 years.

- Amount: Increments of €5,000.

- Bank: 20+ institutions.

Mathematically, this generates a combinatorial explosion. If Googlebot attempted to crawl every possible URL permutation generated by these filters, the Crawl Budget would be exhausted scanning low-value pages (e.g., “Mixed rate mortgage, 13 years, Bank X, amount €125,000”), leaving high-conversion core pages out of the index. This phenomenon leads to:

- Index Bloat: Google’s index fills up with duplicate pages or “thin content”.

- Keyword Cannibalization: Thousands of pages compete for the same queries.

- PageRank Dilution: Domain authority is dispersed over useless URLs.

2. Control Strategies: Robots.txt, Noindex, and Canonical

There is no single solution. Correct management requires a hybrid approach based on crawling and indexing priority.

A. The Robots.txt File: The First Line of Defense

For comparison portals, robots.txt is essential for preserving crawl resources. It is necessary to block parameters that do not generate search demand or that create duplicate content.

Practical example: In a mortgage portal, sorting (price ascending/descending) does not change the content, only the order. This must be blocked.

User-agent: Googlebot

Disallow: /*?order=*

Disallow: /*?price_min=*

Disallow: /*?price_max=*Note: Blocking via robots.txt prevents crawling, but does not necessarily remove pages from the index if they are linked externally. However, it is the most effective method for saving Crawl Budget.

B. Meta Robots “noindex, follow”

For filter combinations that we want Googlebot to discover (to follow links to products) but not index, we use the noindex tag.

Golden Rule: Apply noindex when the user applies more than 2 filters simultaneously. A “Fixed Rate Mortgages” page has SEO value. A “Fixed Rate Mortgages + 20 Years + Intesa Sanpaolo” page is likely too granular and should be excluded from the index.

C. Programmatic Canonical Tag

The canonical tag is a hint, not a directive. In comparison portals, it must be managed programmatically to consolidate authority towards the “parent” page.

If a user lands on /mortgages/fixed-rate?session_id=123, the canonical must strictly point to /mortgages/fixed-rate. However, excessive use of canonicals on vastly different pages (e.g., canonicalizing a filtered page to the general category) may be ignored by Google if the content differs too much.

3. URL Architecture and Parameter Management

According to Google Search Central best practices, using standard parameters (?key=value) is often preferable for faceted navigation compared to simulated static URLs (/value1/value2), because it allows Google to better understand the dynamic structure.

The Logic of MutuiperlaCasa.com

In our operational scenario, we implemented a Selective URL Rewriting logic:

- SEO Landing Page (High Demand): We transform parameters into static URLs.

Ex:?type=fixedbecomes/mortgages/fixed-rate/. These pages are present in the XML Sitemap and are indexable. - Dynamic Filters (Low Demand): Remain as parameters.

Ex:?duration=15&bank=unicredit. These pages havenoindexor are blocked via robots.txt depending on volume.

4. Automated Audit with Python and GSC API

Manually managing millions of URLs is impossible. In 2026, using Python to query the Google Search Console APIs is a standard for Technical SEOs. Below, we present a script to identify “Spider Traps” and orphan pages caused by filters.

Prerequisites

- Google Cloud Platform account with Search Console API enabled.

- Python Libraries:

pandas,google-auth,google-searchconsole.

The Analysis Script

This script extracts the coverage status of filtered URLs to identify anomalies (e.g., parameters that should be blocked but are being indexed).

import pandas as pd

import websearch_google_search_console as gsc

# Authentication (replace with your own credentials)

account = gsc.authenticate(client_config='client_secrets.json')

webproperty = account['https://www.mutuiperlacasa.com/']

# 1. Data Extraction (Inspection API)

# Note: The API has quota limits, use sparingly or on samples

urls_to_check = [

'https://www.mutuiperlacasa.com/mortgages?rate=fixed&duration=30',

'https://www.mutuiperlacasa.com/mortgages?rate=variable&order=asc',

# ... list of suspicious URLs generated from server logs

]

results = []

for url in urls_to_check:

try:

inspection = webproperty.inspect(url)

results.append({

'url': url,

'index_status': inspection.index_status_result.status,

'robots_txt_state': inspection.index_status_result.robots_txt_state,

'indexing_state': inspection.index_status_result.indexing_state,

'user_canonical': inspection.index_status_result.user_canonical,

'google_canonical': inspection.index_status_result.google_canonical

})

except Exception as e:

print(f"Error on {url}: {e}")

# 2. Data Analysis with Pandas

df = pd.DataFrame(results)

# Identify URLs where Google chose a different canonical than declared

canonical_mismatch = df[df['user_canonical'] != df['google_canonical']]

print("Canonical Mismatch Found:")

print(canonical_mismatch)

# Identify indexed URLs that should be blocked

leaking_filters = df[(df['url'].str.contains('order=')) & (df['index_status'] == 'INDEXED')]

print("Filters 'order' indexed by mistake:")

print(leaking_filters)

Interpreting the Results

If the script detects that URLs containing order=asc are in the INDEXED state, it means that the robots.txt rules were not applied retroactively or that there are massive internal links pointing to these resources. In this case, the corrective action is to implement a temporary noindex tag to remove them, before blocking them again.

5. Managing Spider Traps and Infinite Loops

One of the biggest risks in comparison portals is the generation of infinite calendars or price filters (e.g., /price/100-200, /price/101-201). To solve this problem:

- Link Obfuscation: Use techniques such as loading filters via AJAX or

<button>elements (instead of<a href>) for filters that should not be followed by bots. Although Googlebot can execute JavaScript, it tends not to interact with elements that do not look like standard navigation links unless forced. - Enforce Limits: Server-side, if a URL contains invalid parameters or illogical combinations, it must return a

404or410status code, not a blank page with a200status (Soft 404).

Conclusions and Operational Checklist

Managing faceted navigation for comparison portals is not a “set and forget” activity. It requires constant monitoring. Here is the definitive checklist for 2026:

- Mapping: List all URL parameters generated by the CMS.

- Prioritization: Decide which combinations have search volume (Index) and which do not (Noindex/Block).

- Implementation: Configure

robots.txtfor budget saving andnoindexfor index cleanup. - Automation: Run monthly Python scripts to verify that Google respects directives.

- Log Analysis: Analyze server logs to see where Googlebot spends its time. If 40% of hits are on

?order=pages, you have a budget problem.

By adopting these engineering strategies, complex portals like MutuiperlaCasa.com can dominate the SERPs, ensuring that every Googlebot crawl translates into real business value.

Frequently Asked Questions

Faceted navigation generates an exponential number of URL combinations, often leading to Crawl Budget exhaustion and the phenomenon of Index Bloat. This prevents Google from crawling important high-conversion pages, dilutes PageRank across useless resources, and creates keyword cannibalization among thousands of similar pages.

Optimal management requires a hybrid approach: the robots.txt file must block parameters that do not generate search demand, such as sorting by price, to save crawl resources. The meta tag noindex, on the other hand, should be applied to pages we want bots to discover to follow links, but which are too granular to be indexed, such as those with more than two active filters.

It depends on search volume. The best strategy is Selective URL Rewriting: high-demand combinations (High Demand) must be transformed into static URLs and included in the Sitemap to maximize ranking. Low-demand filters (Low Demand) should remain as standard parameters (?key=value) and be managed with noindex or blocked to avoid dispersing authority.

To prevent Googlebot from getting trapped in infinite loops, such as unlimited price filters or calendars, it is crucial to use link obfuscation. It is recommended to load these filters via AJAX or use button elements instead of classic a href tags. Additionally, the server must return 404 or 410 status codes for illogical parameter combinations.

To manage large volumes of URLs, it is necessary to use Python scripts that query the Google Search Console APIs. This allows for programmatically extracting coverage status, identifying discrepancies between the declared canonical and the one chosen by Google, and detecting parameters that are indexed by mistake despite blocking rules.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.