It begins with a feeling of uncanny convenience. You open your music application, and the playlist is exactly what you wanted to hear, even if you hadn’t realized it yet. You open your news feed, and every headline resonates with your current worldview. You type the beginning of an email, and the software finishes your sentence with better phrasing than you could have mustered. In 2026, Artificial Intelligence has achieved a level of predictive capability that feels less like computation and more like telepathy. We have spent decades teaching machines to understand us, to anticipate our needs, and to remove the friction from our daily lives. But in this pursuit of frictionless perfection, we have inadvertently engineered a subtle but profound crisis. We have built a cage of our own preferences, and the lock is a mathematical concept known as optimization.

The Mathematics of Desire

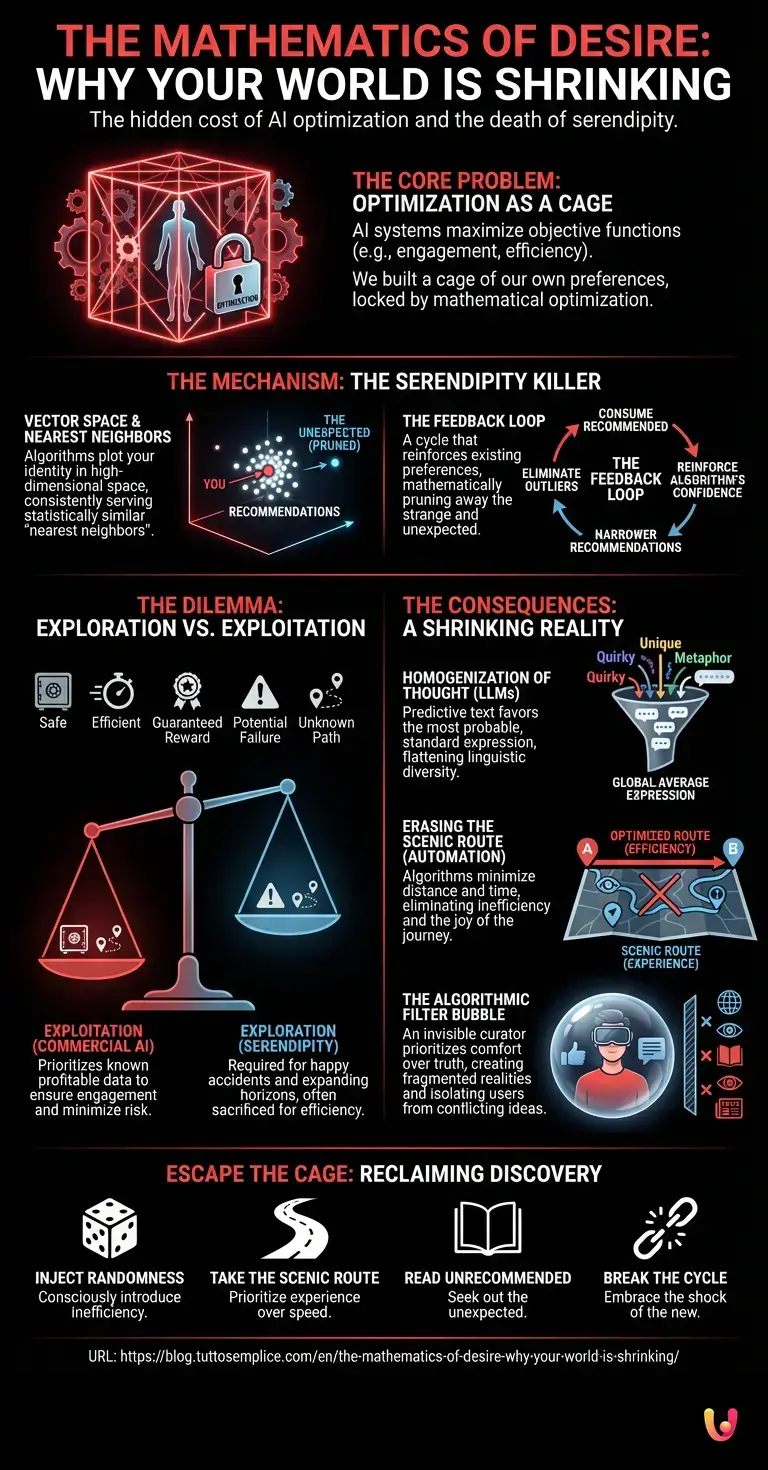

To understand why your world is shrinking, one must look under the hood of the systems governing your digital reality. Whether we are discussing machine learning recommendation engines, navigation apps, or the LLMs (Large Language Models) that power our communication, they all share a common directive: the maximization of an objective function. In simple terms, the AI is given a goal—usually defined as engagement, click-through rate, or efficiency—and it adjusts its internal parameters to achieve that goal with ruthless precision.

In the early days of the internet, discovery was a chaotic, manual process. You surfed the web, often landing on pages that were irrelevant, strange, or challenging. Today, neural networks analyze billions of data points regarding your past behavior to construct a high-dimensional vector space of your identity. If you enjoy a specific genre of sci-fi novel, the algorithm plots your position in this mathematical space and looks for the nearest neighbors—items that are statistically similar to what you have already consumed.

This is where the “Serendipity Killer” begins its work. By consistently serving you the statistical nearest neighbor, the algorithm creates a feedback loop. You consume what is recommended, which reinforces the algorithm’s confidence that you only like that specific thing, which leads to even narrower recommendations. The outliers—the strange, the unexpected, the things you might have loved if only you had stumbled upon them—are mathematically pruned away because they represent a risk to the optimization metric.

The Exploration-Exploitation Dilemma

In the field of computer science and robotics, there is a fundamental problem known as the “Exploration-Exploitation Trade-off.” Imagine a robot programmed to find oil. Once it finds a rich drilling site, it faces a choice: should it keep drilling at this known, profitable location (exploitation), or should it move to a new, unknown area in hopes of finding an even bigger deposit (exploration)?

Exploitation is safe, efficient, and guarantees a reward. Exploration is risky, inefficient, and often leads to failure. Modern commercial AI is heavily weighted toward exploitation. Platforms like streaming services or social media networks are businesses; they cannot afford the “risk” of showing you content you might dislike, because you might close the app. Therefore, they exploit your known preferences to keep you engaged.

The consequence is the death of serendipity. Serendipity—the occurrence of happy, accidental discoveries—requires inefficiency. It requires taking the wrong turn, clicking the wrong link, or picking up the wrong book. In a world governed by automation designed to eliminate error, we are simultaneously eliminating the mechanism by which human beings expand their horizons. We are being insulated from the shock of the new.

The Homogenization of Thought

The impact of this optimization extends far beyond entertainment; it is reshaping how we think and communicate. With the ubiquity of LLMs in 2026, a significant portion of global text is now co-authored or generated by AI. These models work on probability; they predict the most likely next word in a sequence based on the average of their training data.

By definition, these models gravitate toward the mean. They favor the most probable, standard, and “correct” way of expressing an idea. When we rely on these tools to draft our emails, write our reports, or even structure our creative fiction, we are subtly converging toward a global average of expression. The quirky metaphors, the unusual syntax, and the linguistic accidents that often define great literature or innovative thinking are treated by the model as statistical anomalies to be smoothed out.

This creates a cultural echo chamber. If everyone uses the same tools that prioritize the same probabilistic outcomes, the diversity of human thought begins to flatten. We are not just seeing what we like; we are beginning to sound like everyone else.

When Automation Erases the Scenic Route

The phenomenon is not limited to the digital screen; it has bled into the physical world through robotics and logistical automation. Consider the evolution of GPS navigation. Early navigation required a map and human judgment. You might choose a route because it looked interesting. Today, algorithms calculate the route with the lowest latency, accounting for traffic, weather, and road conditions.

This seems purely beneficial until you realize that the algorithm sends everyone down the same “optimal” paths, turning quiet neighborhoods into thoroughfares and ensuring that no one ever takes the scenic route unless they explicitly force the system to do so. The machine views the journey solely as a distance to be minimized, whereas the human might view the journey as an experience to be savored. The algorithm cannot quantify the value of a beautiful view or a roadside curiosity, so it optimizes them out of existence.

In manufacturing and logistics, automation ensures that supply chains are lean and perfect. However, innovation often comes from the slack in the system—the spare parts lying around, the idle time used for tinkering. By optimizing for 100% efficiency, we remove the “slack” required for experimentation. We have built a world that runs perfectly but has no room for the happy accidents that drive progress.

The Algorithmic Filter Bubble

The most dangerous aspect of the Serendipity Killer is its invisibility. You do not notice the books you aren’t recommended. You do not see the news articles that are filtered out because they don’t align with your engagement history. You are unaware of the music that might have changed your life because it was statistically too far from your current listening habits.

This creates a false sense of completeness. We believe we are seeing the world, but we are actually seeing a mirror. The neural networks are not windows; they are curators that prioritize comfort over truth and familiarity over growth. This leads to a fragmentation of reality, where different groups of people live in entirely different algorithmic realities, unable to comprehend the viewpoints of others because their respective algorithms have never exposed them to those conflicting ideas.

In Brief (TL;DR)

Advanced algorithms prioritize efficiency and engagement, creating a digital reality that reinforces existing preferences while filtering out the unexpected.

Commercial AI favors exploiting known interests over exploring new territory, effectively killing serendipity to ensure continuous user engagement.

Reliance on predictive models creates a cultural echo chamber where human expression converges toward a statistical average of thought.

Conclusion

The loss of serendipity is the hidden cost of the AI revolution. We have traded the chaos of discovery for the comfort of prediction. While Artificial Intelligence has solved the problem of information overload by filtering out the noise, we must remember that “noise” is often where life happens. To reclaim our world, we must occasionally be willing to fight the algorithm—to click the link that seems irrelevant, to take the route that is five minutes slower, and to read the book that no machine would ever think to recommend. We must consciously inject randomness back into our lives, for it is only in the unexpected that we truly find something new.

Frequently Asked Questions

AI algorithms function as a mechanism that prioritizes engagement by displaying content statistically similar to your past behavior. This creates a feedback loop where you are only exposed to familiar concepts, effectively pruning away unexpected or challenging experiences that are essential for personal growth and discovery.

This computer science concept refers to the choice between utilizing known profitable data, known as exploitation, or testing new and unknown options, known as exploration. Commercial platforms heavily favor exploitation to ensure user retention, which unfortunately minimizes the risk-taking required for happy accidents and new discoveries.

LLMs operate based on probability, predicting the most likely next word based on the average of their vast training data. Consequently, they favor standard and statistically correct expressions over unique metaphors or unusual syntax, causing human communication to converge toward a global average when these tools are used frequently.

Optimization engines maximize specific metrics like efficiency or clicks by analyzing user history and serving only highly relevant content. This process acts as an invisible curator that filters out conflicting viewpoints or distinct genres, isolating users in a fragmented reality where they rarely encounter ideas that challenge their existing worldview.

To counter the narrowing effect of predictive technology, it is necessary to consciously inject randomness and inefficiency into daily life. This includes actions like taking slower scenic routes, reading books that algorithms would not recommend, and actively seeking out the unexpected to break the cycle of automated comfort.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.