In Brief (TL;DR)

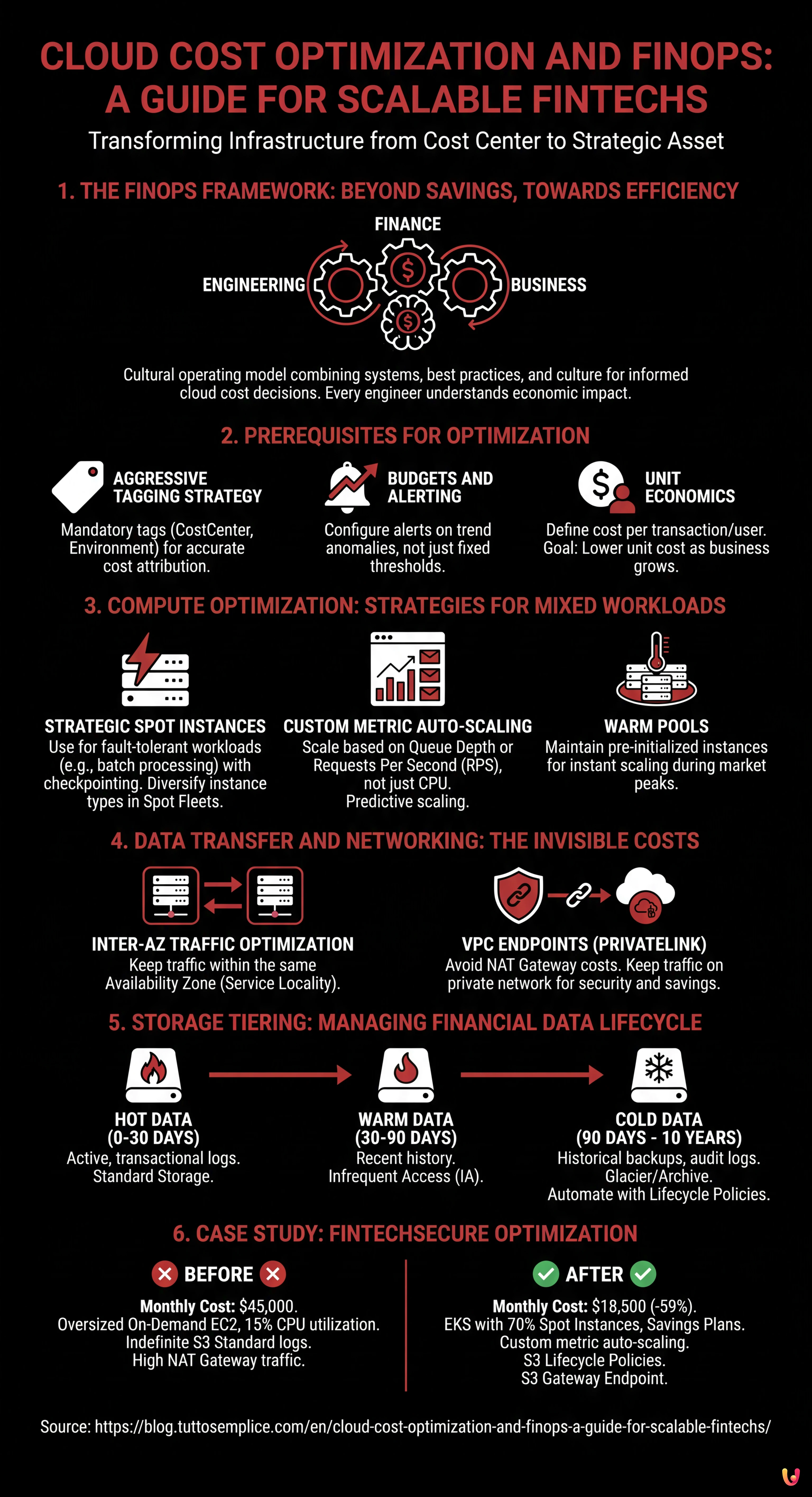

The FinOps approach transforms cloud infrastructure from a cost center to a strategic resource, focusing on the economic sustainability of individual transactions.

Using Spot instances and auto-scaling based on custom metrics guarantees high performance while drastically reducing compute expenses.

Rigorous management of data traffic and tags allows for identifying invisible costs and optimizing business margins.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the technological landscape of 2026, for a technical entrepreneur or a CTO of a Fintech entity, infrastructure is no longer just a cost center, but a strategic asset that determines business margins. Cloud cost optimization is no longer simply about reducing the bill at the end of the month; it is a complex engineering discipline that requires a cultural approach known as FinOps. In high-load environments, where scalability must guarantee financial operational continuity (think high-frequency trading or real-time mortgage management), cutting costs indiscriminately is an unacceptable risk.

This technical guide explores how to apply advanced FinOps methodologies on platforms like AWS and Google Cloud, moving beyond the basic concept of rightsizing to embrace elastic architectures, intelligent storage tiering, and granular data traffic management.

1. The FinOps Framework: Beyond Savings, Towards Efficiency

According to the FinOps Foundation, FinOps is an operating model for the cloud that combines systems, best practices, and culture to increase an organization’s ability to understand cloud costs and make informed business decisions. In a Fintech, this means that every engineer must be aware of the economic impact of a line of code or an architectural choice.

Prerequisites for Optimization

Before implementing technical changes, a foundation of observability must be established:

- Aggressive Tagging Strategy: Every resource must have mandatory tags (e.g.,

CostCenter,Environment,ServiceOwner). Without this, cost attribution is impossible. - Budgets and Alerting: Configuration of AWS Budgets or GCP Budget Alerts not only on fixed thresholds but on trend anomalies (e.g., a sudden spike in API calls).

- Unit Economics: Define the cost per transaction or per active user. The goal is not to lower the total cost if the business grows, but to lower the unit cost.

2. Compute Optimization: Strategies for Mixed Workloads

The largest expense item is often related to compute resources (EC2 on AWS, Compute Engine on GCP). Here is how to optimize in high-load scenarios.

Strategic Use of Spot Instances

Spot Instances (or Preemptible VMs on GCP) offer discounts of up to 90%, but can be terminated by the provider with little notice. In the Fintech sector, the use of Spot instances is often feared due to data criticality, but this fear is unfounded if managed architecturally.

Application Scenario: Nightly Batch Processing.

Consider mortgage interest calculation or regulatory reporting generation that happens every night. This is a fault-tolerant workload.

- Strategy: Use fleets of Spot instances for the worker nodes that process data, keeping On-Demand instances only for orchestration (e.g., the master node of a Kubernetes or EMR cluster).

- Implementation: Configure the application to handle «checkpointing». If a Spot instance is terminated, the job must resume from the last checkpoint saved on a database or S3/GCS, without data loss.

- Diversification: Do not rely on a single instance type. Use Spot Fleets that request different instance families (e.g., m5.large, c5.large, r5.large) to minimize the risk of simultaneous unavailability.

Auto-scaling Based on Custom Metrics

CPU-based auto-scaling is obsolete for modern applications. Often, 40% CPU usage hides unacceptable latency for the end user.

For a scalable Fintech platform, scaling policies must be aggressive and predictive:

- Queue Metrics (Queue Depth): If using Kafka or SQS/PubSub, scale based on the number of messages in the queue or consumer lag. It is useless to add servers if the queue is empty, but vital to add them instantly if the queue exceeds 1000 messages, regardless of CPU.

- Requests Per Second (RPS): For API Gateways, scale based on request throughput.

- Warm Pools: Maintain a pool of pre-initialized instances (in stopped or hibernate state) to reduce startup times during market traffic peaks (e.g., stock market opening).

3. Data Transfer and Networking: The Invisible Costs

Many companies focus on compute and ignore the cost of data movement, which can represent 20-30% of the bill in microservices architectures.

Inter-AZ and Inter-Region Traffic Optimization

In a high-availability architecture, data travels between different Availability Zones (AZ). AWS and GCP charge for this traffic.

- Service Locality: Try to keep traffic within the same AZ when possible. For example, ensure that microservice A in AZ-1 preferably calls the instance of microservice B in AZ-1.

- VPC Endpoints (PrivateLink): Avoid going through the NAT Gateway to reach services like S3 or DynamoDB. Using VPC Endpoints keeps traffic on the provider’s private network, reducing NAT Gateway data processing costs (which are significant for high volumes) and improving security.

4. Storage Tiering: Managing the Financial Data Lifecycle

Financial regulations (GDPR, PCI-DSS, MiFID II) mandate data retention for years. Keeping everything on high-performance storage (e.g., S3 Standard) is a huge waste.

Intelligent Tiering and Lifecycle Policies

Cloud cost optimization involves automating the data lifecycle:

- Hot Data (0-30 days): Recent transactional logs, active data. Standard Storage.

- Warm Data (30-90 days): Recent history for customer service. Infrequent Access (IA).

- Cold Data (90 days – 10 years): Historical backups, audit logs. Use classes like S3 Glacier Deep Archive or GCP Archive. The cost is a fraction of standard, but retrieval time is in hours (acceptable for an audit).

- Automation: Use S3 Intelligent-Tiering to automatically move data between tiers based on real access patterns, without having to write complex scripts.

5. Case Study: «FinTechSecure» Infrastructure Optimization

Let’s analyze a theoretical case based on real migration and optimization scenarios.

Initial Situation:

The startup «FinTechSecure» manages P2P payments. The infrastructure is on AWS, entirely based on oversized On-Demand EC2 instances to handle Black Friday peaks, with Multi-AZ RDS databases. Monthly cost: $45,000.

FinOps Analysis:

1. EC2 instances have an average CPU utilization of 15%.

2. Access logs are kept on S3 Standard indefinitely.

3. Data traffic through the NAT Gateway is very high due to backups to S3.

Interventions Executed:

- Compute: Migration of stateless workloads to containers (EKS) using Spot Instances for 70% of nodes and Savings Plans for the remaining 30% (base nodes). Implementation of auto-scaling based on custom metrics (number of transactions in queue).

- Storage: Activation of Lifecycle Policy to move logs to Glacier after 30 days and delete them after 7 years (compliance).

- Networking: Implementation of S3 Gateway Endpoint to eliminate NAT Gateway costs for traffic towards storage.

Final Result:

The monthly cost dropped to $18,500 (-59%), maintaining the same availability SLA (99.99%) and improving scaling speed during unforeseen peaks.

Conclusions

Cloud cost optimization in the Fintech sector is not a one-off activity, but a continuous process. It requires balancing three variables: cost, speed, and quality (redundancy/security). Tools like Spot Instances, Lifecycle policies, and granular monitoring allow for building resilient infrastructures that scale economically along with the business. For the technical entrepreneur, mastering FinOps means freeing up financial resources to reinvest in innovation and product development.

Frequently Asked Questions

FinOps is a cultural operating model that combines engineering, finance, and business to optimize cloud spending. In Fintech companies, this approach is crucial because it transforms infrastructure from a simple cost center to a strategic asset, allowing the calculation of the economic impact of every technical choice (Unit Economics) and ensuring that technological scalability is financially sustainable even during high-load market peaks.

Although Spot Instances can be terminated with little notice, they can be used safely in the financial sector for fault-tolerant workloads, such as nightly interest calculation or reporting. The correct strategy involves implementing checkpointing to save progress, using mixed instance fleets to diversify risk, and maintaining On-Demand nodes for cluster orchestration.

Auto-scaling based solely on CPU percentage is often obsolete for modern Fintech platforms. It is preferable to adopt aggressive strategies based on custom metrics like queue depth (Queue Depth) of messaging systems or the number of requests per second (RPS). Furthermore, using Warm Pools with pre-initialized instances helps manage zero latency during market openings or sudden traffic events.

Networking costs can represent up to 30% of the cloud bill. To reduce them, it is necessary to optimize Service Locality by maintaining traffic between microservices within the same Availability Zone. Furthermore, it is fundamental to use VPC Endpoints (PrivateLink) to connect to managed services like storage, thus avoiding the expensive data processing charges associated with using public NAT Gateways.

To comply with regulations like GDPR or PCI-DSS without wasting budget, it is essential to implement intelligent storage tiering. Active data (Hot) remains on standard storage, while historical logs and backups (Cold) are automatically moved via Lifecycle Policies to deep archive classes like Glacier or Archive, which have extremely low costs but longer retrieval times, ideal for rare audits.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.