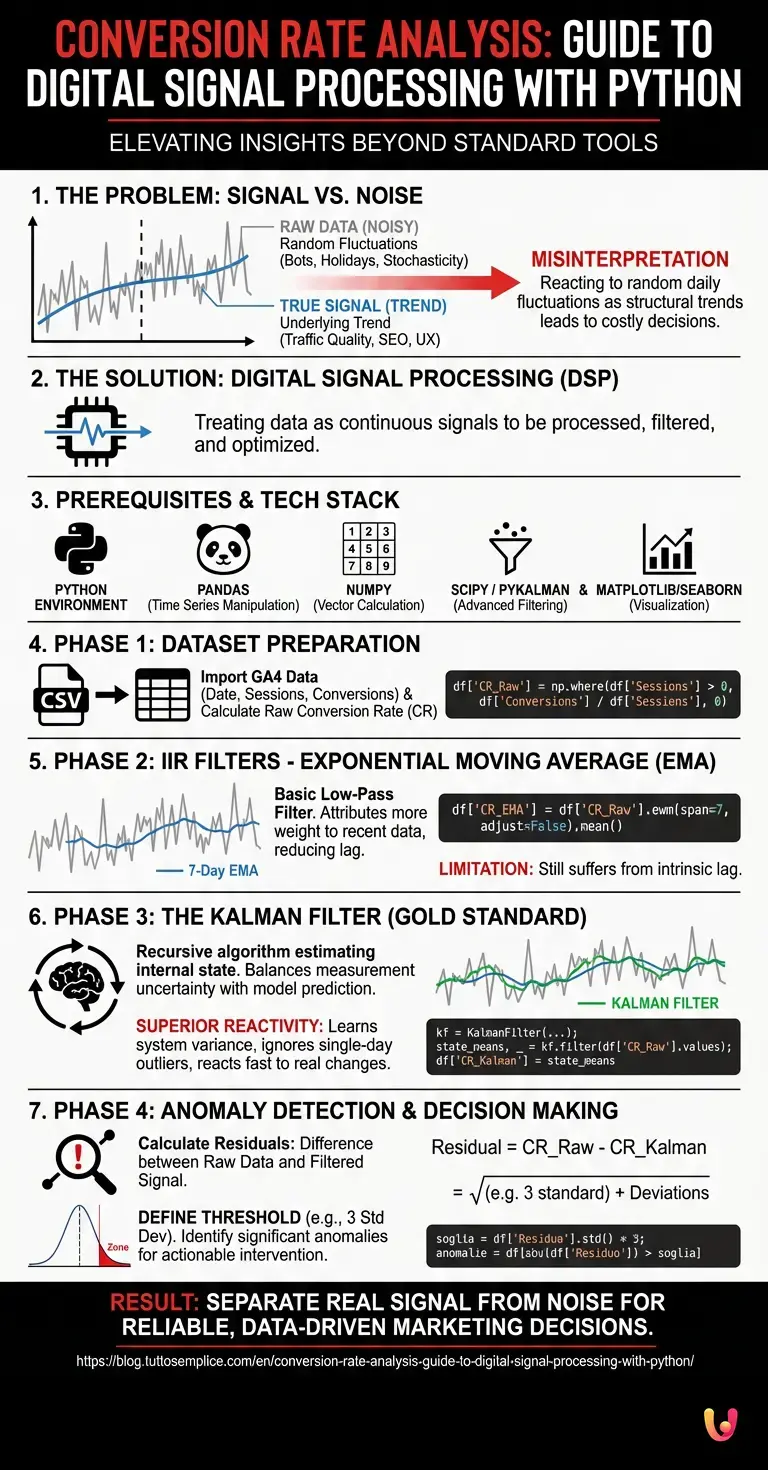

In today’s digital marketing landscape, conversion rate analysis often suffers from a fundamental problem: the misinterpretation of variance. Too often, marketers react to random daily fluctuations as if they were structural trends, leading to hasty and costly decisions. To elevate the quality of insight, we must look beyond standard tools and draw from electronic engineering. In this technical guide, we will explore how to treat data exported from Google Analytics 4 not as simple numbers on a spreadsheet, but as continuous signals to be processed, filtered, and optimized.

The Problem: Signal vs. Noise in Marketing

When we observe a graph of conversion trends over time, what we see is the sum of two components:

- The Signal: The true underlying trend determined by traffic quality, SEO, and site UX.

- The Noise: Random variations due to bots, holidays, weather, or simple stochasticity of human behavior.

The classic approach is limited to calculating simple averages. The advanced approach, derived from Digital Signal Processing (DSP), applies mathematical filters to break down noise and reveal the true nature of the signal. This allows us to answer the question: “Is yesterday’s drop a statistical anomaly or did the site stop working?”.

Prerequisites and Tech Stack

To follow this guide, a configured Python development environment is required. We will use the following libraries, the de facto standards in data science:

- Pandas: For time series manipulation.

- NumPy: For vector numerical calculation.

- SciPy / PyKalman: For implementing advanced filtering algorithms.

- Matplotlib/Seaborn: For signal visualization.

Ensure you have a CSV export of your daily data (Sessions and Conversions) from GA4.

Phase 1: Dataset Preparation

The first step is to import the data and calculate the raw conversion rate (CR). Raw data is often “dirty” and discontinuous.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

# Load data (simulation of a GA4 export)

# The CSV must have columns: 'Date', 'Sessions', 'Conversions'

df = pd.read_csv('ga4_data_export.csv', parse_dates=['Date'])

df.set_index('Date', inplace=True)

# Calculate Daily Conversion Rate (CR)

# Handle division by zero in case of days with no traffic

df['CR_Raw'] = np.where(df['Sessions'] > 0, df['Conversions'] / df['Sessions'], 0)

print(df.head())

Phase 2: Infinite Impulse Response (IIR) Filters – The Exponential Moving Average

The first level of cleaning is the application of an Exponential Moving Average (EMA). Unlike the simple moving average (SMA), the EMA attributes more weight to recent data, reducing lag in trend detection. In DSP terms, this acts as a basic low-pass filter.

# Applying an EMA with a 7-day span (weekly cycle)

df['CR_EMA'] = df['CR_Raw'].ewm(span=7, adjust=False).mean()

# Visualization

plt.figure(figsize=(12,6))

plt.plot(df.index, df['CR_Raw'], label='Raw CR (Noisy)', alpha=0.3, color='gray')

plt.plot(df.index, df['CR_EMA'], label='7-Day EMA (Clean Signal)', color='blue')

plt.title('Conversion Rate Analysis: Raw vs EMA')

plt.legend()

plt.show()

The EMA is useful for quick visualizations, but it still suffers from intrinsic lag. If the conversion rate crashes today, the EMA will take a few days to fully reflect the change.

Phase 3: The Kalman Filter (The Gold Standard Approach)

Here we enter the territory of advanced engineering. The Kalman Filter is a recursive algorithm that estimates the internal state of a linear dynamic system starting from a series of noisy measurements. It is the same algorithm used for missile navigation and GPS tracking.

In conversion rate analysis, the Kalman filter considers the CR not as a fixed number, but as a probabilistic estimate that constantly updates, balancing measurement uncertainty (today’s data) with the model’s prediction (historical trend).

Python Implementation of the Kalman Filter

We will use the pykalman library (or a simplified custom implementation) to apply this concept.

from pykalman import KalmanFilter

# Filter Configuration

# Transition Covariance: how fast we expect the true CR to change

# Observation Covariance: how much noise is in the daily data

kf = KalmanFilter(transition_matrices=[1],

observation_matrices=[1],

initial_state_mean=df['CR_Raw'].mean(),

initial_state_covariance=1,

observation_covariance=0.01,

transition_covariance=0.001)

# State calculation (the filtered signal)

state_means, state_covariances = kf.filter(df['CR_Raw'].values)

df['CR_Kalman'] = state_means

Why does Kalman beat the Moving Average?

Observing the results, you will notice that the Kalman line (CR_Kalman) is incredibly more reactive to real changes compared to the moving average, yet it almost completely ignores single-day outliers (e.g., a bot attack inflating sessions and lowering CR). The filter “learns” the system’s variance.

Phase 4: Anomaly Detection and Decision Making

Now that we have a clean signal, we can calculate the residuals, which is the difference between the raw data and the filtered signal. This is fundamental for automatic alerting.

# Calculate residuals

df['Residuo'] = df['CR_Raw'] - df['CR_Kalman']

# Define anomaly threshold (e.g., 3 standard deviations)

soglia = df['Residuo'].std() * 3

# Identify anomalous days

anomalie = df[abs(df['Residuo']) > soglia]

print(f"Days with significant anomalies: {len(anomalie)}")

If the residual exceeds the threshold, it means something happened that cannot be explained by normal statistical noise. Only in this case should the SEO Specialist or Marketing Manager intervene.

Conclusions and SEO Applications

Applying signal theory to conversion rate analysis transforms the way we interpret web data. Instead of chasing ghosts or reacting to the panic of a single negative day, we obtain:

- Clarity: Visualization of the true growth trend stripped of seasonality.

- Automation: Alert systems based on real standard deviations, not feelings.

- Attribution: Ability to correlate On-Page changes (e.g., Core Web Vitals update) with structural signal variations.

Integrating Python scripts of this type into corporate dashboards or Looker Studio reports (via BigQuery) represents the future of Web Analytics: fewer opinions, more math.

Frequently Asked Questions

The classic approach to data analysis often suffers from a misinterpretation of variance, confusing random daily fluctuations with structural trends. By applying mathematical filters derived from electronic engineering to GA4 data, it is possible to separate the real signal, determined by SEO and UX, from background noise caused by bots or stochasticity, obtaining more reliable insights.

The Kalman Filter is a superior recursive algorithm because it estimates the system state by balancing the uncertainty of today’s measurement with historical prediction. Unlike moving averages which can suffer from delays or lag, this method is extremely reactive to real trend changes but almost completely ignores single-day outliers, offering a more precise view.

To identify a significant anomaly, residuals are calculated, which is the mathematical difference between the raw data and the signal filtered by the algorithm. If this value exceeds a predefined statistical threshold, usually calculated on three standard deviations, one is facing an anomalous event requiring technical or strategic intervention, excluding false positives.

To replicate the described tech stack, Pandas is essential for time series manipulation and NumPy for vector numerical calculation. For the advanced algorithmic part, SciPy or PyKalman are used for filters, while Matplotlib and Seaborn are fundamental for graphically visualizing the distinction between the clean signal and noisy raw data.

The Exponential Moving Average, or EMA, differs from the simple average because it assigns greater weight to more recent data. This mechanism acts as a low-pass filter that drastically reduces the delay in trend detection, allowing marketers to react faster to structural changes without being deceived by daily volatility.

Still have doubts about Conversion Rate Analysis: Guide to Digital Signal Processing with Python?

Type your specific question here to instantly find the official reply from Google.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.