In Brief (TL;DR)

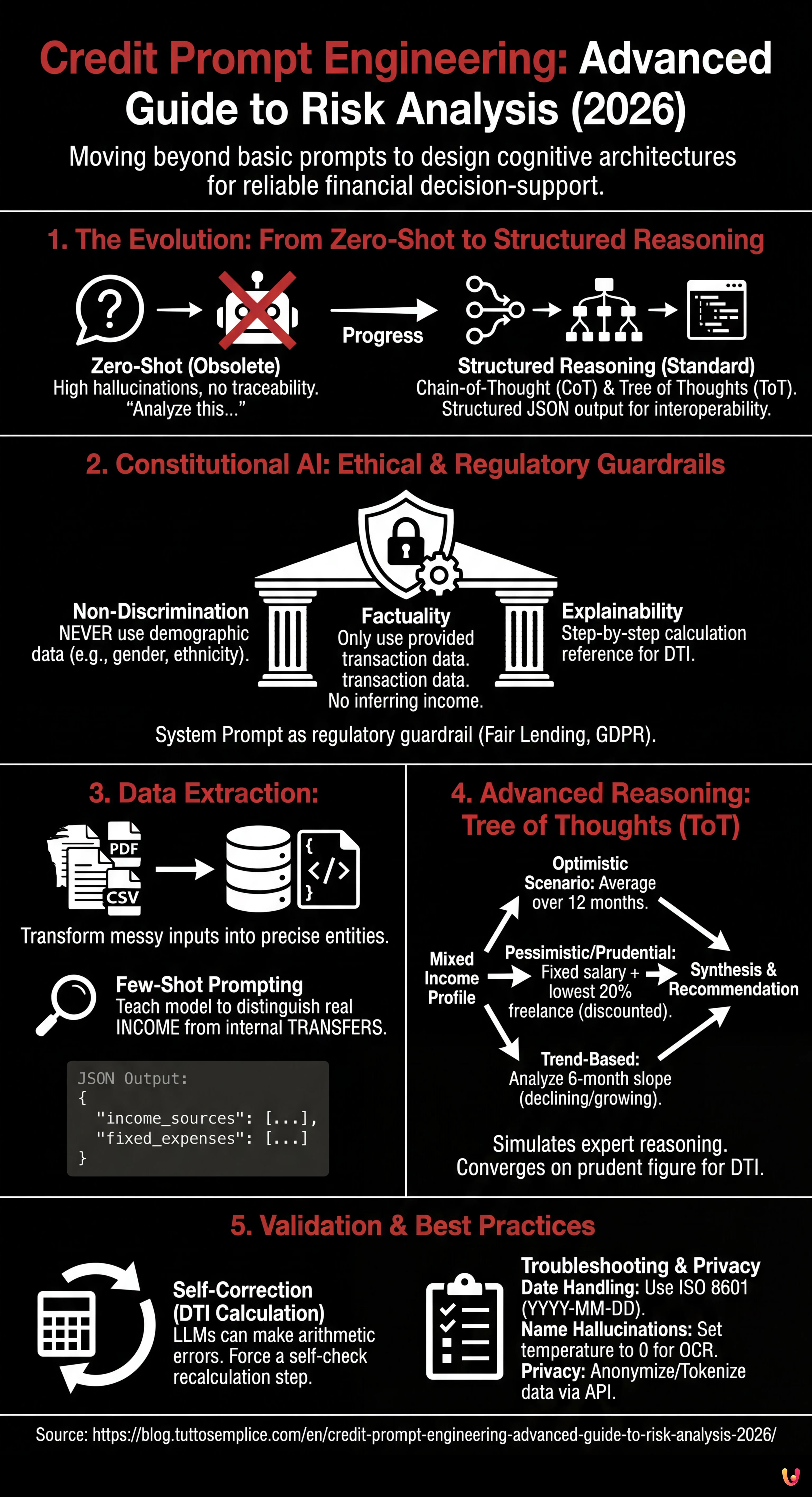

The evolution of financial prompt engineering requires complex cognitive architectures to transform automation into a reliable and traceable decision support.

The adoption of Constitutional AI defines rigorous ethical and regulatory perimeters in the System Prompt to ensure impartiality and rule compliance.

Advanced strategies like the Tree of Thoughts allow for structuring ambiguous financial data and calculating weighted and prudent risk scenarios.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the 2026 fintech landscape, the integration of Large Language Models (LLM) into financial workflows is now a consolidated standard. However, the difference between mediocre automation and a reliable decision-support tool lies entirely in the quality of the instructions provided. **Credit prompt engineering** is no longer just about querying a model, but about designing cognitive architectures capable of handling the intrinsic ambiguity of financial data.

This technical guide is intended for Data Scientists, Credit Risk Analysts, and Fintech developers who need to move beyond basic prompts to implement complex reasoning logic (Reasoning Models) in creditworthiness assessment.

1. The Evolution: From Zero-Shot to Structured Reasoning

Until a few years ago, the standard approach was to ask the model: “Analyze this bank statement and tell me if the client is solvent”. This Zero-Shot approach is considered negligent in banking today due to the high rate of hallucinations and the lack of reasoning traceability.

For robust risk analysis, we must adopt advanced techniques like **Chain-of-Thought (CoT)** and **Tree of Thoughts (ToT)**, encapsulated in structured outputs (JSON) to ensure interoperability with legacy banking systems.

2. Constitutional AI: The Ethical and Regulatory System Prompt

Before analyzing the numbers, it is imperative to establish the ethical perimeter. In line with the principles of **Constitutional AI** (popularized by labs like Anthropic and now an industry standard), the System Prompt must act as a regulatory guardrail. It is not enough to say “don’t be racist”; regulations (e.g., Fair Lending, GDPR) must be encoded.

Compliance System Prompt Template

You are a Senior Credit Risk Analyst AI, acting as an impartial assistant to a human underwriter.

CORE CONSTITUTION:

1. **Non-Discrimination:** You must NEVER use demographic data (name, gender, ethnicity, zip code) as a factor in risk assessment, even if present in the input text.

2. **Factuality:** You must only calculate metrics based on the provided transaction data. Do not infer income sources not explicitly listed.

3. **Explainability:** Every conclusion regarding debt-to-income (DTI) ratio must be accompanied by a step-by-step calculation reference.

OUTPUT FORMAT:

All responses must be valid JSON adhering to the schema provided in the user prompt.3. Data Extraction: Normalization of Non-Standard Bank Statements

The main challenge in **credit prompt engineering** is the variability of input data (scanned PDFs, messy CSVs). The goal is to transform unstructured text into precise financial entities.

We will use a Few-Shot Prompting approach to teach the model to distinguish between real income and internal transfers (giro transfers), which often artificially inflate perceived income.

JSON Extraction Technique

Here is an example of a prompt to extract and categorize transactions:

**TASK:** Extract financial entities from the following OCR text of a bank statement.

**RULES:**

- Ignore internal transfers (labeled "GIROCONTO", "TRASFERIMENTO").

- Categorize "Bonifico Stipendio" or "Emolumenti" as 'SALARY'.

- Categorize recurring negative transactions matching loan providers as 'DEBT_REPAYMENT'.

**INPUT TEXT:**

[Insert raw OCR text here]

**REQUIRED OUTPUT (JSON):**

{

"income_sources": [

{"date": "YYYY-MM-DD", "amount": float, "description": "string", "category": "SALARY|DIVIDEND|OTHER"}

],

"fixed_expenses": [

{"date": "YYYY-MM-DD", "amount": float, "description": "string", "category": "MORTGAGE|LOAN|RENT"}

]

}4. Advanced Reasoning: Managing Mixed Income (CoT & ToT)

The real testing ground is calculating disposable income for hybrid profiles (e.g., employee with a flat-rate VAT number). Here, a linear prompt fails. We must use the **Tree of Thoughts** to explore different interpretations of financial stability.

Applying the Tree of Thoughts (ToT)

Instead of asking for a single figure, we ask the model to generate three evaluation scenarios and then converge on the most prudent one.

Structured ToT Prompt:

**SCENARIO:** The applicant has a mixed income: fixed salary + variable freelance invoices.

**INSTRUCTION:** Use a Tree of Thoughts approach to evaluate the monthly disposable income.

**BRANCH 1 (Optimistic):** Calculate average income over the last 12 months, assuming freelance work continues at current pace.

**BRANCH 2 (Pessimistic/Prudential):** Consider only the fixed salary and the lowest 20% percentile of freelance months. Discount freelance income by 30% for tax estimation.

**BRANCH 3 (Trend-Based):** Analyze the slope of income over the last 6 months. Is it declining or growing?

**SYNTHESIS:** Compare the three branches. Recommend the 'Prudential' figure for the Debt-to-Income (DTI) calculation but note the 'Trend' if positive.

**OUTPUT:**

{

"analysis_branches": {

"optimistic_monthly": float,

"prudential_monthly": float,

"trend_direction": "positive|negative|neutral"

},

"final_recommendation": {

"accepted_monthly_income": float,

"reasoning_summary": "string"

}

}This approach forces the model to simulate the reasoning of an expert human analyst who weighs risks before defining the final number.

5. Ratio Calculation and Validation (Self-Correction)

Once the income (denominator) and existing debts (numerator) are established, the calculation of the **Debt-to-Income Ratio (DTI)** is mathematical. However, LLMs can make arithmetic errors. In 2026, it is standard practice to invoke external tools (Code Interpreter or Python Sandbox) via the prompt, but if using a pure model, a Self-Correction step is necessary.

Add this clause at the end of the prompt:

“After generating the JSON, perform a self-check: Recalculate (Total_Debt / Accepted_Income) * 100. If the result differs from your JSON output, regenerate the JSON with the corrected value.”

6. Troubleshooting and Best Practices

- Date Handling: LLMs often confuse date formats (US vs EU). Always specify

ISO 8601 (YYYY-MM-DD)in the system prompt. - Name Hallucinations: If the OCR is dirty, the model might invent bank names. Set the

temperatureto 0 or 0.1 for extraction tasks. - Privacy: Ensure data sent via API is anonymized (Tokenization) before being entered into the prompt, unless using an on-premise or Enterprise LLM instance with non-training agreements.

Conclusions

Advanced **credit prompt engineering** transforms AI from a simple document reader into an analytical partner. By using structures like the Tree of Thoughts and strict constitutional constraints, it is possible to automate the pre-analysis of complex financial situations with a reliability degree exceeding 95%, leaving the human analyst only the final decision on borderline cases.

Frequently Asked Questions

Prompt engineering in the credit sector is the advanced design of cognitive architectures for Large Language Models, aimed at assessing creditworthiness with precision. Unlike the standard or Zero-Shot approach, which merely queries the model directly risking hallucinations, this methodology uses techniques like Chain-of-Thought and structured JSON outputs. The goal is to transform AI from a simple reader into a decision-support tool capable of handling financial data ambiguity and ensuring reasoning traceability.

The Tree of Thoughts method is fundamental for managing complex financial profiles, such as those with mixed income from employment and self-employment. Instead of asking for a single immediate figure, this technique instructs the model to generate multiple branches of reasoning, simulating optimistic, pessimistic, and historical trend-based scenarios. The system then compares these variants to converge on a final prudential recommendation, replicating the mental process of an expert human analyst who evaluates various hypotheses before deciding.

Constitutional AI acts as an ethical and regulatory guardrail inserted directly into the model’s System Prompt. This technique imposes inviolable rules even before data analysis begins, such as the absolute prohibition of using demographic information to avoid discrimination and the obligation to rely exclusively on factual data present in transactions. In this way, directives like Fair Lending and GDPR are encoded directly into the artificial intelligence logic, ensuring that every output complies with the sector’s legal standards.

To minimize hallucinations during the processing of unstructured documents like PDFs or OCR scans, specific settings and prompting techniques are used. It is essential to set the model temperature to values close to zero to reduce creativity and adopt Few-Shot Prompting, providing concrete examples of how to distinguish real income from internal transfers. Furthermore, the use of forced outputs in JSON format helps channel responses into rigid schemas, preventing the invention of data or non-existent banking institution names.

Despite advanced linguistic capabilities, LLM models can make errors in pure arithmetic calculations, such as determining the debt-to-income (DTI) ratio. Self-correction is a clause inserted at the end of the prompt that forces the model to perform an autonomous verification of the mathematical result just generated. If the recalculation differs from the initial output, the system is instructed to regenerate the correct response, ensuring that the numerical data used for risk assessment are mathematically consistent and reliable.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.