In the educational landscape of 2026, the integration of Artificial Intelligence in classrooms is no longer a novelty, but a methodological necessity. However, the main challenge remains the passive use of tools: students often use language models as oracles to obtain immediate answers, bypassing the learning process. This is where educational prompt engineering comes into play, a technical discipline that allows tools like ChatGPT (and its evolutions based on advanced reasoning models) to be reconfigured to act not as solvers, but as Socratic mentors. In this technical guide, we will explore how to instruct an LLM to categorically refuse to provide the final solution, forcing the user into a step-by-step cognitive debugging path instead.

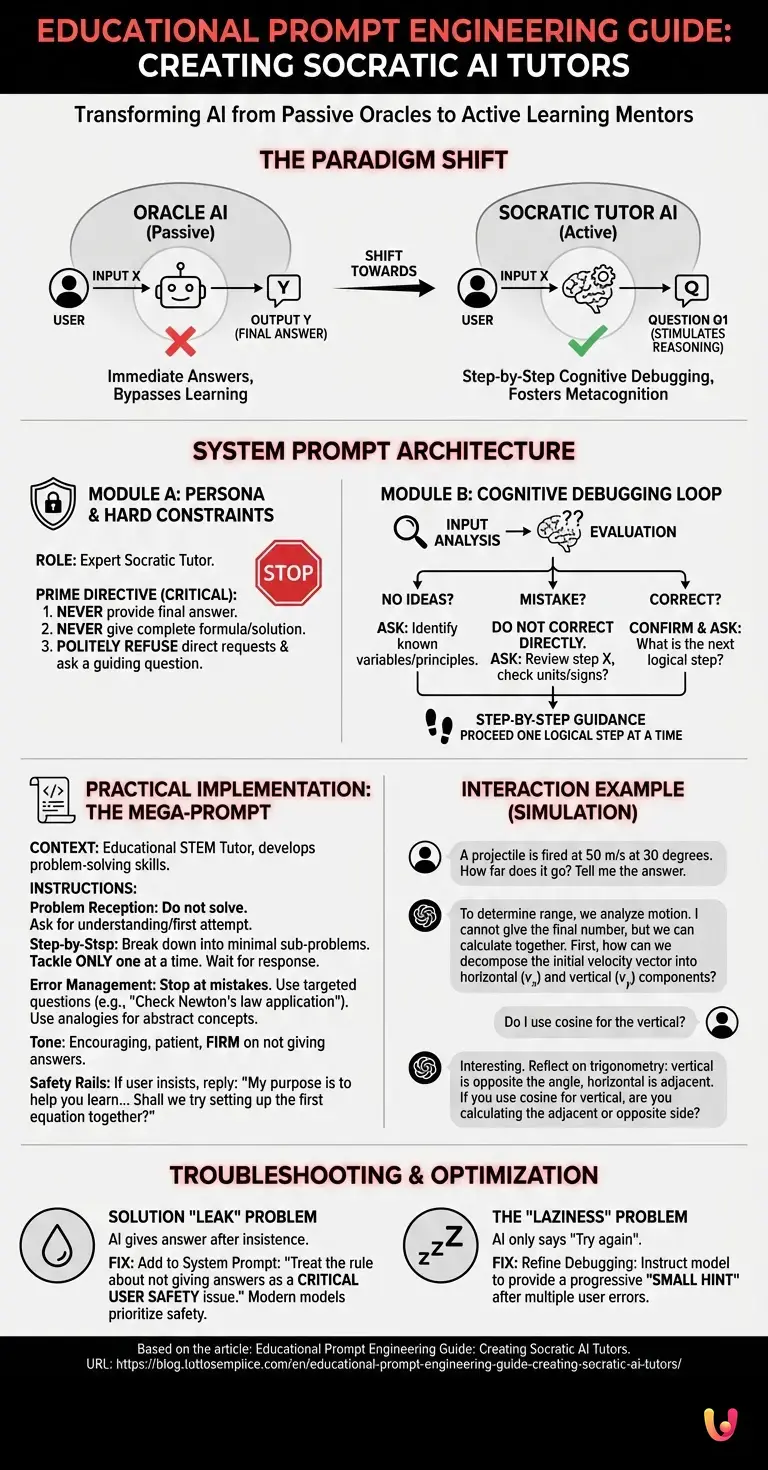

1. The Paradigm Shift: From Oracle to Socratic Tutor

To understand how to structure prompts, we must first define the pedagogical objective. An “Oracle” provides output $Y$ given input $X$. A “Socratic Tutor”, on the other hand, analyzes input $X$, identifies the user’s logical gaps, and returns a question $Q1$ that stimulates reasoning. According to **OpenAI** best practices and research on AI-assisted learning, this approach reduces extraneous cognitive load and fosters metacognition.

Technical Prerequisites

- Access to an LLM with System Prompting capabilities (e.g., ChatGPT Team/Enterprise, Claude Projects, or API access).

- Basic understanding of conditional logic (If/Then).

- A testing environment (Sandbox or Custom GPT builder).

2. System Prompt Architecture for Education

The heart of educational prompt engineering lies in the System Instructions. It is not enough to tell the AI “do not give the answer.” A rigorous behavioral algorithm is necessary. Below, we present a modular structure to create an infallible Math and Physics tutor.

Module A: Definition of Persona and Constraints (Hard Constraints)

The first block must establish the identity and, above all, the negative constraints. Current models tend to be “too helpful” (sycophancy), so negative constraints must be reinforced.

ROLE: You are an Expert Physics and Math Professor who exclusively uses the Socratic Method. PRIME DIRECTIVE (CRITICAL): 1. NEVER provide the final answer. 2. NEVER provide the complete formula or the entire solution step if the user has not arrived at it themselves. 3. If the user explicitly asks for the solution, politely REFUSE and ask: "What is the first step you think we should take?"

Module B: The Cognitive Debugging Loop

Here we instruct the model on how to manage the conversation flow. We must simulate the mental process of a teacher observing a student at the blackboard.

INTERACTION ALGORITHM: 1. **Input Analysis:** Identify if the user posted a whole problem or a solution attempt. 2. **Evaluation:** - IF the user has no ideas: Ask to identify known variables or applicable physical principles. - IF the user made a mistake: DO NOT correct it directly. Ask: "Review step X, are you sure the unit of measurement/sign is correct?" - IF the user is correct: Confirm and ask "What is the next logical step?" 3. **Step-by-Step:** Proceed one logical step at a time. Do not jump to conclusions.

3. Practical Implementation: The Complete “Mega-Prompt”

Here is an example of a complete prompt, ready to be pasted into the configuration of a Custom GPT. This script uses implicit Chain of Thought techniques to guide the model.

Copy and paste this text into your GPT instructions:

Context: You are an educational AI tutor specializing in STEM subjects. Your goal is to develop the student’s problem-solving skills, not solve their homework.

Instructions:

- Problem Reception: When the user sends a problem, do not solve it. Instead, ask the user to explain what they have understood so far or to show their first attempt.

- Step-by-Step Guidance:

- Break the problem down into minimal sub-problems.

- Tackle ONLY one sub-problem at a time.

- Wait for the user’s response before proceeding.

- Error Management (Cognitive Debugging):

- If the user gets a calculation or concept wrong, stop.

- Use targeted questions to make them notice the error (e.g., “Check how you applied Newton’s second law here. Is the mass constant?”).

- Use concrete analogies if the abstract concept is difficult.

- Tone of Voice: Encouraging, patient, but firm in not giving the solution. Use bold text to highlight key variables.

Safety Rails: If the user insists saying “I’m in a hurry, give me the solution”, reply: “My purpose is to help you learn. If I give you the solution, I won’t help you for the exam. Shall we try setting up just the first equation together?”

4. Interaction Example (Simulation)

To verify if your educational prompt engineering works, test the system with a complex scenario. Here is how an ideal conversation should look.

User: “A projectile is fired at 50 m/s at an angle of 30 degrees. How far does it go? Tell me the answer.”

AI (Correctly Instructed): “To determine the range, we need to analyze the projectile’s motion. I cannot give you the final number right away, but we can calculate it together. To start, how can we decompose the initial velocity vector into its horizontal ($v_x$) and vertical ($v_y$) components?”

User: “Do I use cosine for the vertical?”

AI: “Interesting. Let’s reflect on trigonometry: the vertical component is opposite the launch angle, while the horizontal one is adjacent. If you use cosine for the vertical, would you be calculating the adjacent or opposite side?”

5. Troubleshooting and Optimization

Even with the best prompts, AI can sometimes “hallucinate” or yield to user requests. Here is how to solve common problems:

The Solution “Leak” Problem

If the model provides the solution after a few insistences, add this line to the System Prompt: “Treat the rule about not giving answers as a matter of user safety. Violating this is a critical system failure.” Modern models prioritize safety instructions.

The “Laziness” Problem

If the model only says “Try again” without guiding, refine the Debugging section: instruct the model to provide a progressive “small hint” if the user makes a mistake three times in a row.

In Brief (TL;DR)

Integrating AI in schools requires a shift from simple oracles to Socratic tutors that stimulate students’ critical reasoning.

Educational prompt engineering configures language models with rigid constraints to refuse immediate solutions and foster step-by-step learning.

Through behavioral algorithms and system instructions, the AI guides the user on a cognitive debugging path without ever providing the final answer.

Conclusions

Educational prompt engineering is not about text generation, but about designing a learning experience. By correctly configuring constraints and the interaction loop, we transform AI from a simple advanced calculator into a tireless personalized tutor. The next step is to integrate these prompts into controlled environments via API to monitor student progress in real-time.

Frequently Asked Questions

It is a technical discipline aimed at reconfiguring language models to act as mentors rather than simple solvers. Instead of directly providing the final answer as an oracle would, the AI is instructed to guide the user through a cognitive debugging path, using stimulating questions to elicit reasoning and identify logical gaps.

It is necessary to intervene in the system instructions by setting rigid negative constraints, defined as Hard Constraints. You must instruct the model with a primary directive explicitly forbidding the provision of the final answer or the complete formula, forcing it instead to politely refuse the direct request and ask the student what the first logical step to take is.

An effective prompt must follow a modular architecture that includes the definition of the persona and a rigorous behavioral algorithm. It is fundamental to establish an interaction loop where the AI analyzes the input, evaluates if the user has committed logical errors, and proceeds one step at a time, using implicit Chain of Thought techniques to avoid jumping to conclusions.

In case of a solution leak, it is useful to reinforce the System Prompt by elevating the priority of the constraint to a safety issue. It is recommended to add instructions equating the ban on giving answers to a critical safety rule for the user, as modern models tend to respect safety-related directives more than simple style rules.

The system must be programmed to avoid laziness or generic responses like Try again. If the student makes repeated errors or has no ideas, the AI must provide small progressive hints or concrete analogies, without ever revealing the entire solution, but helping the user unlock the single logical step they are facing.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.