In Brief (TL;DR)

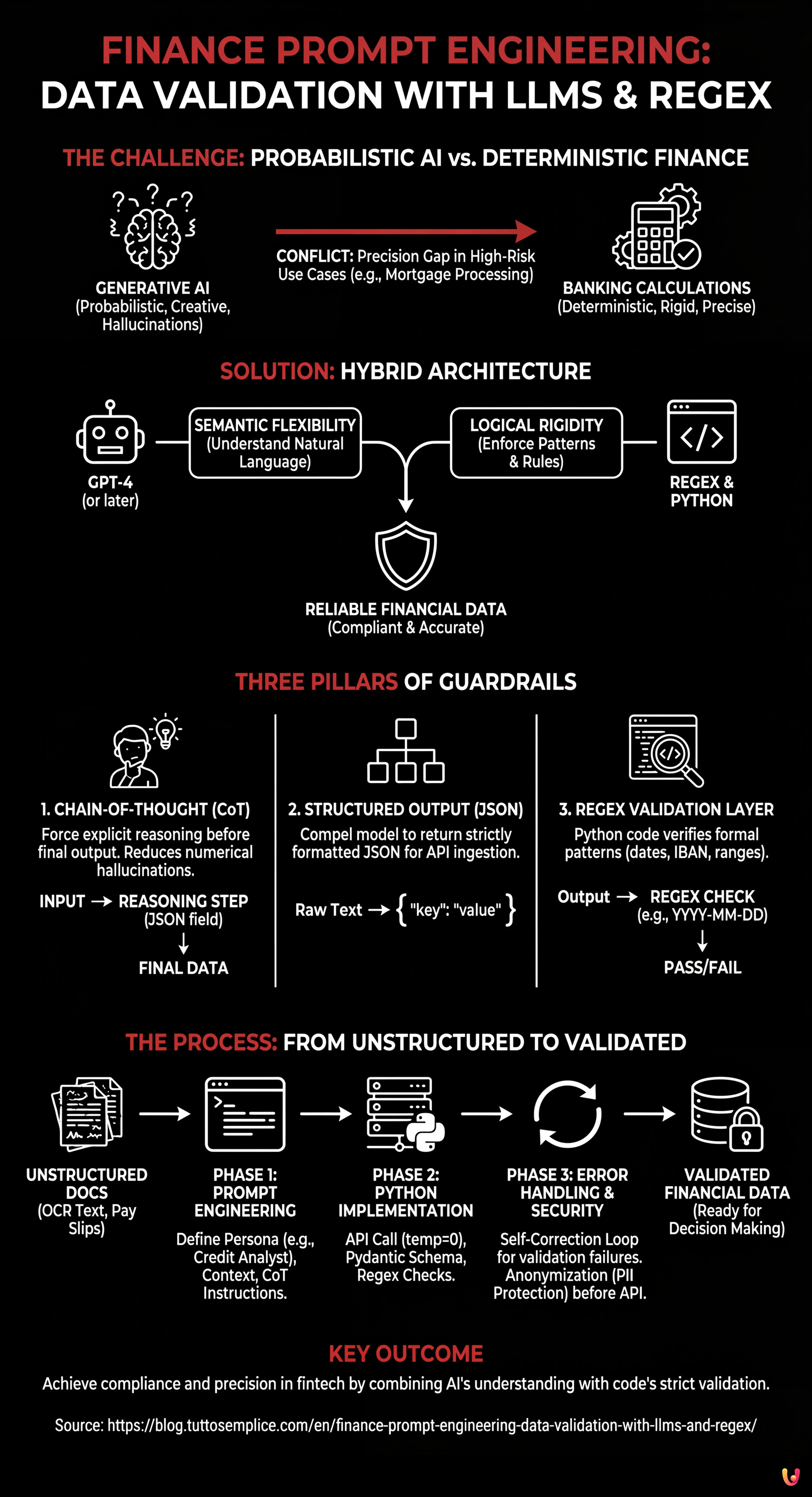

Overcoming the probabilistic nature of generative models is essential for reliably integrating artificial intelligence into critical decision-making processes.

A hybrid approach combines the semantic flexibility of LLMs with the logical rigidity of Regular Expressions to validate data.

The use of Chain-of-Thought and structured JSON outputs ensures precision and compliance in the automatic extraction of complex financial information.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the fintech landscape of 2026, the adoption of Generative Artificial Intelligence is no longer a novelty, but an operational standard. However, the real challenge lies not in implementing a chatbot, but in the reliable integration of LLMs (Large Language Models) into critical decision-making processes. In this technical guide, we will explore finance prompt engineering with an engineering approach, focusing on a specific and high-risk use case: data extraction and validation for mortgage processing.

We will address the main problem of AI in the financial sector: the probabilistic nature of generative models versus the deterministic necessity of banking calculations. As we will see, the solution lies in a hybrid architecture that combines the semantic flexibility of models like GPT-4 (or later) with the logical rigidity of Regular Expressions (Regex) and programmatic controls.

The Paradox of Precision: Why LLMs Get the Math Wrong

Anyone who has worked with generative AI knows that models are excellent at understanding natural language but mediocre at complex arithmetic or strictly adhering to non-standard output formats. In a YMYL (Your Money Your Life) context, an error in calculating the debt-to-income ratio is not an acceptable hallucination; it is a compliance risk and a potential economic loss.

Finance prompt engineering is not just about writing elegant sentences for the model. It involves designing a system of Guardrails (safety barriers) that force the model to operate within defined boundaries. The approach we will use is based on three pillars:

- Chain-of-Thought (CoT): Forcing the model to make its reasoning explicit before providing the final data.

- Structured Output (JSON): Compelling the model to return structured data for API ingestion.

- Regex Validation Layer: A Python code layer that verifies if the LLM output respects formal patterns (e.g., IBAN, Tax ID, date formats).

Phase 1: Engineering the Prompt for Unstructured Documents

Let’s imagine we need to extract data from a pay slip or a scanned real estate appraisal (OCR). The text is dirty, messy, and full of abbreviations. A generic prompt would fail. We need to build a structured prompt.

The “Persona” and “Context Setting” Technique

The prompt must clearly define the model’s role. We are not asking for a summary; we are asking for an ETL (Extract, Transform, Load) data extraction.

SYSTEM ROLE:

You are a Senior Credit Analyst specializing in mortgage processing. Your task is to extract critical financial data from unstructured text originating from OCR documentation.

OBJECTIVE:

Identify and normalize the Net Monthly Income and recurring expenses for the debt-to-income ratio calculation.

CONSTRAINTS:

1. Do not invent data. If a data point is missing, return "null".

2. Ignore one-off bonuses, focus on ordinary compensation.

3. The output MUST be exclusively in valid JSON format.Implementing Chain-of-Thought (CoT)

To increase accuracy, we use the Chain-of-Thought technique. We ask the model to “reason” in a separate JSON field before extracting the value. This drastically reduces hallucinations regarding numbers.

Example of user prompt structure:

INPUT TEXT:

[Insert pay slip OCR text here...]

INSTRUCTIONS:

Analyze the text step by step.

1. Identify all positive items (base salary, contingency).

2. Identify tax and social security deductions.

3. Exclude expense reimbursements or non-recurring bonuses.

4. Calculate the net if not explicitly stated, otherwise extract the "Net for the month".

OUTPUT FORMAT (JSON):

{

"reasoning": "Text string where you explain the logical reasoning followed to identify the net amount.",

"net_income_value": Float or null,

"currency": "EUR",

"document_date": "YYYY-MM-DD"

}Phase 2: Python Implementation and Hybrid Validation

Finance prompt engineering is useless without a backend to support it. This is where the hybrid approach comes into play. We do not blindly trust the LLM’s JSON. We pass it through a validator based on Regex and Pydantic.

Python Code for API Integration

Below is an example of how to structure the API call (using standard libraries like openai and pydantic for type validation) and integrate the Regex check.

import openai

import json

import re

from pydantic import BaseModel, ValidationError, validator

from typing import Optional

# Definition of expected data schema (Guardrail #1)

class FinancialData(BaseModel):

reasoning: str

net_income_value: float

currency: str

document_date: str

# Regex validator for the date (Guardrail #2)

@validator('document_date')

def date_format_check(cls, v):

pattern = r'^d{4}-d{2}-d{2}$'

if not re.match(pattern, v):

raise ValueError('Invalid date format. Required YYYY-MM-DD')

return v

# Logical validator for income (Guardrail #3)

@validator('net_income_value')

def realistic_income_check(cls, v):

if v 50000: # Safety thresholds for manual alert

raise ValueError('Income value out of standard parameters (Anomaly Detection)')

return v

def extract_financial_data(ocr_text):

prompt = f"""

Analyze the following banking OCR text and extract the required data.

TEXT: {ocr_text}

Return ONLY a JSON object.

"""

try:

response = openai.ChatCompletion.create(

model="gpt-4-turbo", # Or equivalent 2026 model

messages=[

{"role": "system", "content": "You are a rigorous financial data extractor."},

{"role": "user", "content": prompt}

],

temperature=0.0 # Temperature at 0 for maximum determinism

)

raw_content = response.choices[0].message.content

# Parsing and Validation

data_dict = json.loads(raw_content)

validated_data = FinancialData(**data_dict)

return validated_data

except json.JSONDecodeError:

return "Error: The LLM did not produce valid JSON."

except ValidationError as e:

return f"Data Validation Error: {e}"

except Exception as e:

return f"Generic Error: {e}"

# Usage example

# result = extract_financial_data("Pay slip for January... Net to pay: 2,450.00 euros...")

Phase 3: Managing Hallucinations and Correction Loops

What happens if validation fails? In an advanced production system, we implement a Self-Correction Loop. If Pydantic raises an exception (e.g., incorrect date format), the system can automatically send a new request to the LLM including the error received.

Example of Automatic Correction Prompt:

“You generated a JSON with an error. The field ‘document_date’ did not respect the YYYY-MM-DD format. Correct the value and return the JSON again.”

Privacy and Security Considerations (YMYL)

When applying prompt engineering to finance, managing PII (Personally Identifiable Information) data is critical. Before sending any OCR text to a public API (even if enterprise), it is best practice to apply an Anonymization Pre-Processing technique.

Using local Regex (thus not AI), names, tax codes, and addresses can be masked by replacing them with tokens (e.g., [CUSTOMER_NAME_1]). The LLM will analyze the financial structure without exposing the mortgage applicant’s real identity, maintaining GDPR compliance.

Conclusions: The Future of Mortgage Processing

The integration of finance prompt engineering, traditional programming logic, and Regex validation represents the only viable path to bringing AI into core banking processes. It is not about replacing the human analyst, but providing them with pre-validated and normalized data, reducing data entry time by 80% and allowing them to focus on credit risk assessment.

The key to success is not a smarter model, but more robust prompt engineering and a stricter control system.

Frequently Asked Questions

Prompt engineering is essential for transforming the probabilistic nature of generative models into the deterministic outputs required by banks. Through the use of guardrails and structured instructions, risks of hallucinations are mitigated, ensuring that data extraction for critical processes, such as mortgage processing, meets rigorous compliance and precision standards.

The solution lies in a hybrid approach that combines the semantic capability of AI with the logical rigidity of Regular Expressions (Regex) and programmatic controls. Instead of asking the model to perform complex calculations, it is used to extract structured data which is subsequently validated and processed by a Python code layer, ensuring the accuracy required in the financial field.

The Chain-of-Thought technique improves data extraction accuracy by forcing the model to make its logical reasoning explicit before providing the final result. In the case of unstructured documents like pay slips, this method compels the AI to identify positive and negative items step-by-step, significantly reducing interpretation errors and false positives in numerical values.

To ensure privacy and GDPR compliance, it is necessary to apply an anonymization pre-processing technique. Before sending data to the model API, local scripts are used to mask identifiable information (PII) such as names and tax codes, allowing the AI to analyze the financial context without ever exposing the applicant’s real identity.

The Self-Correction Loop is an automated mechanism that manages model output errors. If the validator (e.g., Pydantic) detects an incorrect JSON format or an out-of-bounds value, the system resends the prompt to the LLM including the encountered error, asking the model to specifically correct that parameter. This iterative cycle drastically increases the success rate in automatic extraction.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.