In Brief (TL;DR)

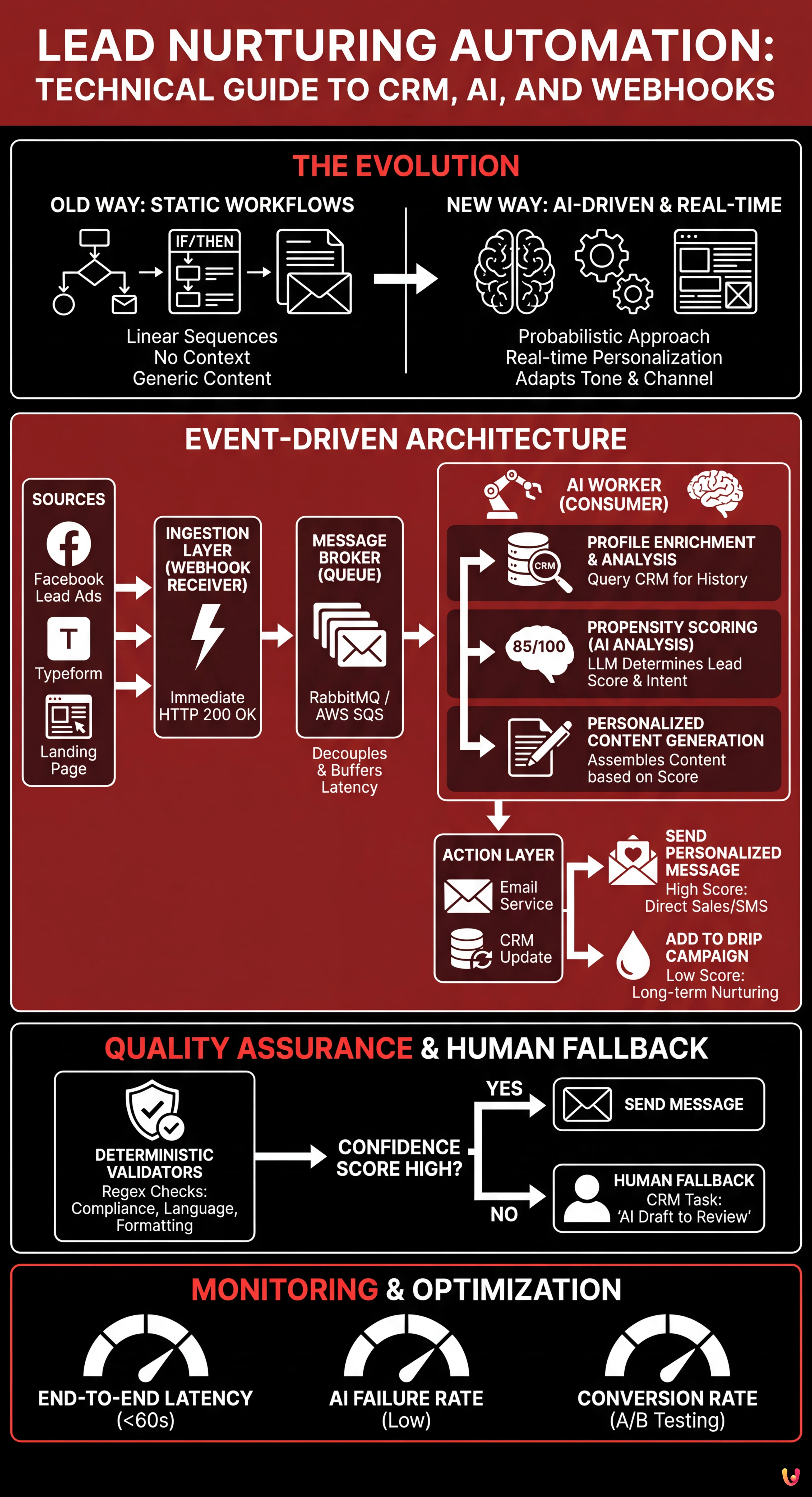

Lead nurturing automation evolves from static workflows to dynamic systems integrating generative AI to personalize communication in real-time.

An event-driven architecture with webhooks and message queues is essential to manage AI latency without compromising user experience.

Implementing intelligent workers allows for data analysis, lead scoring calculation, and contextualized response generation at scale.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the digital landscape of 2026, lead nurturing automation is no longer about simple “if/then” email sequences based on static triggers. Competition in the Fintech and B2B sectors demands a level of personalization and responsiveness that old linear workflows cannot guarantee. Today, the goal is to engineer systems capable of “reasoning” about the user profile in real-time, adapting the tone, content, and communication channel instantly.

This technical guide explores how to build a robust architecture that integrates a custom CRM, Generative Artificial Intelligence (LLM), and Webhooks. We will analyze how to manage the intrinsic latency of AI calls using Message Queues and how to implement security mechanisms to ensure automation does not compromise brand reputation.

The Evolution of Lead Nurturing Automation: Beyond Static Workflows

Traditionally, lead nurturing automation relied on predefined decision trees. If a user downloaded a whitepaper, they received email A. If they clicked a link, they received email B. This approach, while functional, lacks context. It doesn’t know who the user is, only what they did.

Integration with Generative AI allows moving from a deterministic approach to a probabilistic and generative one. The system does not select a pre-written template; it assembles or rewrites it based on:

- Demographic and firmographic data (enriched in real-time).

- Historical behavior in the CRM.

- Sentiment analysis of previous interactions.

- Purchase propensity calculated in the moment.

System Architecture: Event-Driven Design

To integrate AI into a nurturing flow without blocking the user experience or overloading the CRM, it is necessary to adopt an Event-Driven Architecture. We cannot afford to wait the 3-10 seconds required for an LLM to generate a complex response during a synchronous call.

Key Components

- Ingestion Layer (Webhook Receiver): A lightweight API endpoint that receives lead data.

- Message Broker (RabbitMQ / AWS SQS): Decouples data reception from processing.

- AI Worker (Consumer): The service that picks up the message, queries the AI, and prepares the action.

- Action Layer (CRM/Email Service): Executes the sending or record update.

Step 1: Lead Ingestion via Webhook

The entry point is a Webhook. Whether the lead comes from Facebook Lead Ads, Typeform, or a custom Landing Page, the system must react immediately with an HTTP 200 OK to confirm receipt, delegating heavy processing to a later time.

Here is a conceptual example in Python (Flask) of how to structure the endpoint:

from flask import Flask, request, jsonify

import pika # Client for RabbitMQ

import json

app = Flask(__name__)

@app.route('/webhook/lead-in', methods=['POST'])

def receive_lead():

data = request.json

# Basic data validation

if not data.get('email'):

return jsonify({'error': 'Missing email'}), 400

# Instead of processing, we send to the queue

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='ai_nurturing_queue', durable=True)

channel.basic_publish(

exchange='',

routing_key='ai_nurturing_queue',

body=json.dumps(data),

properties=pika.BasicProperties(

delivery_mode=2, # Makes the message persistent

))

connection.close()

return jsonify({'status': 'queued'}), 200Step 2: Latency Management with Message Queues

The use of a queue (like RabbitMQ or Amazon SQS) is fundamental for the scalability of lead nurturing automation. If 1000 leads arrive simultaneously during a campaign, attempting to generate 1000 AI responses in parallel would lead to:

- Rate limiting by the AI provider (OpenAI, Anthropic, etc.).

- Web server timeouts.

- Data loss.

The queue acts as a buffer. The “Workers” (background processes) pick up leads one at a time or in batches, respecting API limits.

Step 3: The AI Worker and Nurturing Logic

Here is where the magic happens. The Worker must perform three distinct operations:

A. Profile Enrichment and Analysis

Before generating content, the system queries the CRM (via API) to see if the lead already exists. If it is a returning lead, the AI needs to know. “Welcome back Marco” is much more powerful than a generic “Hello”.

B. Propensity Scoring (AI Analysis)

We use AI not just to write, but to analyze. We pass lead data (Job Title, Company, Source, Form answers) to the LLM with a specific system prompt to determine the “Lead Score”.

Example of Analysis Prompt:

“Analyze the following lead data. You are a Fintech sales expert. Assign a score from 1 to 100 on the conversion probability for the ‘Green Mortgage’ product. Return a JSON with {score: int, reasoning: string, suggested_tone: string}.”

C. Personalized Content Generation

Based on the score, the system decides the path:

- Score < 30: Long-term educational nurturing (Generic email).

- Score 30-70: Active nurturing (Personalized email with specific Case Study for the lead’s sector).

- Score > 70: Hot Lead (SMS + Email inviting to direct demo).

Here is how the Worker logic might look:

def process_lead(ch, method, properties, body):

lead_data = json.loads(body)

# 1. Propensity Analysis via AI

analysis = ai_client.chat.completions.create(

model="gpt-4-turbo",

messages=[{"role": "system", "content": "Analizza questo lead..."},

{"role": "user", "content": json.dumps(lead_data)}],

response_format={ "type": "json_object" }

)

result = json.loads(analysis.choices[0].message.content)

# 2. Message Generation

if result['score'] > 70:

email_body = generate_sales_email(lead_data, result['reasoning'])

send_email(lead_data['email'], email_body)

notify_sales_team_slack(lead_data)

else:

add_to_drip_campaign(lead_data['email'], segment="low_intent")

ch.basic_ack(delivery_tag=method.delivery_tag)Step 4: Quality Assurance and Human Fallback

A lead nurturing automation system based on AI cannot be left unsupervised. “Hallucinations” are rare but possible. To mitigate risks in regulated sectors like Fintech:

Deterministic Validators

Before sending the generated email, the text must pass through a regex validator or a second, smaller and cheaper AI model that checks for:

- Non-compliant financial promises (e.g., “Guaranteed earnings”).

- Offensive or inappropriate language.

- Macroscopic formatting errors.

Fallback Mechanism

If the generation “Confidence Score” is low or if the validator detects an anomaly, the message is NOT sent. Instead, a task is created in the CRM (e.g., Salesforce or HubSpot) assigned to a human operator with the label: “AI draft to review”. This ensures automation supports humans, rather than blindly replacing them.

Monitoring and Optimization

Engineering does not end with deployment. It is necessary to monitor technical and business metrics:

- End-to-End Latency: How much time passes from the Webhook to the email sending? (Target: < 60 seconds).

- AI Failure Rate: How many generations are blocked by validators?

- Conversion Rate: Do AI-generated emails convert better than static templates? (Continuous A/B Testing).

Conclusions

Lead nurturing automation in 2026 is as much a software architecture exercise as it is a marketing one. Integrating CRM, Webhooks, and AI requires careful management of asynchronous data flows. However, the result is a system capable of dialoguing with thousands of potential customers as if each were the only one, drastically increasing operational efficiency and acquisition campaign ROI.

Frequently Asked Questions

AI integration transforms lead nurturing from a static process based on decision trees to a probabilistic and generative approach. Instead of sending predefined templates, the system analyzes demographic and behavioral data in real-time to assemble personalized content, adapting tone and message to the specific user context.

Message queues, such as RabbitMQ or AWS SQS, are fundamental for managing the intrinsic latency of calls to LLM models without blocking the user experience. They act as a buffer that decouples data reception from processing, preventing server timeouts and data loss during high traffic peaks.

A robust architecture consists of four key elements: an Ingestion Layer receiving data via Webhook, a Message Broker managing the request queue, an AI Worker performing analysis and content generation, and an Action Layer integrated into the CRM to finalize sending or record updating.

To mitigate risks, especially in regulated sectors like Fintech, deterministic validators are used to filter prohibited terms or non-compliant promises. Additionally, a Human Fallback mechanism is implemented: if the generation confidence level is low, the message is saved as a draft in the CRM for human review instead of being sent directly.

The system sends profile data and interaction history to an LLM model with a specific system prompt. The AI analyzes this information to assign a numerical conversion probability score, allowing contacts to be automatically routed towards long-term educational paths or direct sales contact.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.