In Brief (TL;DR)

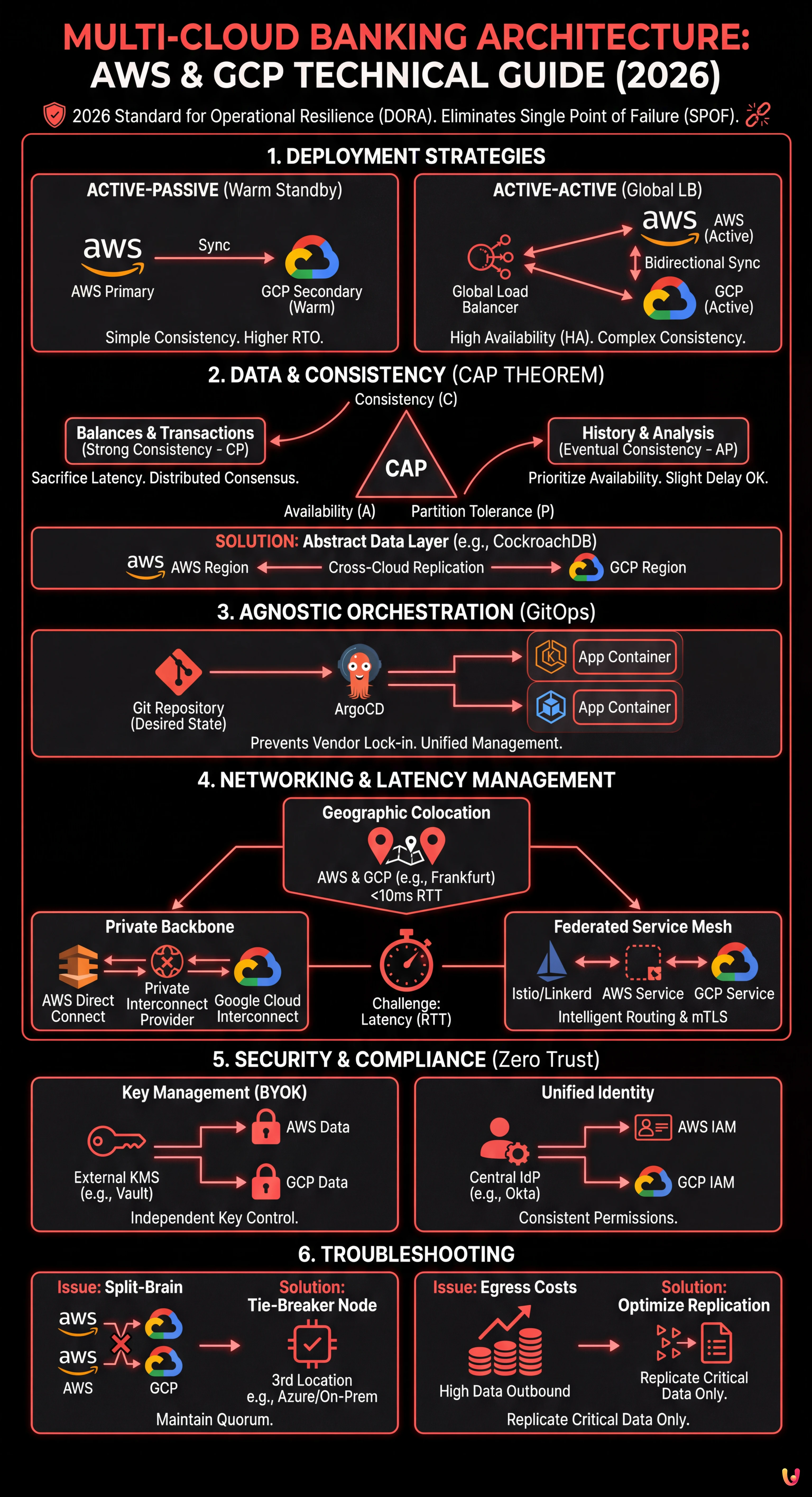

Adopting hybrid architectures on AWS and GCP ensures the operational resilience required by regulations such as DORA.

Data management requires a strategic balance between consistency and availability by applying the CAP theorem to Active-Active or Active-Passive configurations.

Orchestration via Kubernetes and the GitOps approach ensure agnostic and efficient deployment, mitigating latency risks between different providers.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the fintech landscape of 2026, multi-cloud banking architecture is no longer an exotic option, but a de facto standard to ensure the operational resilience required by international regulations (such as the DORA regulation in the EU). Dependence on a single Cloud Provider today represents an unacceptable Single Point of Failure (SPOF) for critical services such as real-time mortgage comparators or Core Banking Systems.

This technical guide explores how to design, implement, and maintain a hybrid infrastructure distributed between Amazon Web Services (AWS) and Google Cloud Platform (GCP). We will analyze the engineering challenges related to data synchronization, orchestration via Kubernetes, and the application of fundamental distributed systems theorems to balance consistency and availability.

Prerequisites and Technology Stack

To implement the described strategies, knowledge and use of the following components is assumed:

- Orchestration: Kubernetes (EKS on AWS, GKE on GCP).

- IaC (Infrastructure as Code): Terraform or OpenTofu for agnostic provisioning.

- CI/CD & GitOps: ArgoCD or Flux for cluster state synchronization.

- Networking: AWS Direct Connect and Google Cloud Interconnect, managed via BGP.

- Database: Distributed NewSQL solutions (e.g., CockroachDB) or custom replication strategies.

1. Deployment Strategies: Active-Active vs. Active-Passive

The choice between an Active-Active and Active-Passive configuration defines the entire multi-cloud banking architecture. In the financial context, where the RPO (Recovery Point Objective) must tend toward zero, the challenges change drastically.

Active-Passive Scenario (Warm Standby)

In this scenario, AWS might handle the primary traffic while GCP maintains a synchronized replica of the infrastructure, ready to scale in case of failover. It is the conservative choice to reduce costs and the complexity of managing write conflicts.

- Pros: Simplicity in managing data consistency (writing to a single master).

- Cons: Higher RTO (Recovery Time Objective) times due to the “warm-up” time of the secondary region.

Active-Active Scenario (Global Load Balancing)

Both providers serve traffic in real-time. This is the ideal configuration for High Availability (HA), but it introduces the complex challenge of bidirectional data consistency.

2. The Data Challenge: CAP Theorem and Eventual Consistency

According to the CAP Theorem (Consistency, Availability, Partition Tolerance), in the presence of a network partition (P) between AWS and GCP, a banking system must choose between Consistency (C) and Availability (A).

For a banking system, the choice is not binary but contextual:

- Balances and Transactions (Strong Consistency): We cannot allow a user to spend the same money twice on two different clouds. Here, latency or availability is sacrificed to guarantee consistency (CP). Distributed consensus protocols like Raft or Paxos are used.

- Transaction History or Mortgage Analysis (Eventual Consistency): It is acceptable for history to appear with a few milliseconds of delay on the secondary region. Here we prioritize availability (AP).

Technical Implementation of Synchronization

To mitigate latency and split-brain risks, the modern approach involves using an abstract Data Layer. Instead of using RDS (AWS) and Cloud SQL (GCP) natively, geographically distributed database clusters like CockroachDB or YugabyteDB are implemented, operating across cloud providers and natively managing synchronous and asynchronous replication.

3. Agnostic Orchestration with Kubernetes

To avoid vendor lock-in, the application must be containerized and agnostic regarding the underlying infrastructure. Kubernetes acts as the abstraction layer.

Multi-Cluster Management with GitOps

We will not manage clusters imperatively. By using a GitOps approach with ArgoCD, we can define the desired state of the application in a Git repository. ArgoCD will take care of applying the configurations simultaneously on EKS (AWS) and GKE (GCP).

# Conceptual example of ApplicationSet in ArgoCD

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: banking-core-app

spec:

generators:

- list:

elements:

- cluster: aws-eks-prod

region: eu-central-1

- cluster: gcp-gke-prod

region: europe-west3

template:

# Deployment configuration...4. Networking and Latency Management

Latency between cloud providers is the number one enemy of distributed architectures. A transaction requiring a synchronous commit on two different clouds will inevitably suffer the latency of the “round-trip time” (RTT) between data centers.

Mitigation Strategies

- Geographic Colocation: Select AWS regions (e.g., Frankfurt) and GCP regions (e.g., Frankfurt) that are physically close to minimize RTT to < 10ms.

- Private Backbone: Avoid the public internet for database synchronization. Use Site-to-Site VPNs or dedicated interconnection solutions via carrier-neutral partners (e.g., Equinix Fabric) that connect AWS Direct Connect and Google Cloud Interconnect.

- Service Mesh (Istio/Linkerd): Implement a federated Service Mesh to manage intelligent traffic routing, automatic API call failover, and cross-cloud mTLS (Mutual TLS) for security.

5. Security and Compliance (DORA & GDPR)

In a multi-cloud banking architecture, the attack surface increases. Security must be managed according to the Zero Trust model.

- Key Management (BYOK): Use an external key management system (HSM in colocation or services like HashiCorp Vault) to maintain control of encryption keys independently of the cloud provider.

- Unified Identity: Federate identities (IAM) using a central Identity Provider (e.g., Okta or Azure AD) to ensure permissions are consistent across AWS and GCP.

6. Troubleshooting and Common Issue Resolution

Issue: Split-Brain in the Database

Symptom: The two clouds lose connection with each other and both accept divergent writes.

Solution: Implement a “Tie-Breaker” or an observer node in a third location (e.g., Azure or an on-premise data center) to maintain the odd quorum required by consensus protocols.

Issue: Egress Costs (Data Outbound)

Symptom: High invoices due to continuous data synchronization between AWS and GCP.

Solution: Optimize data replication. Replicate only critical transactional data in real-time. Use compression and deduplication. Negotiate dedicated egress rates with providers for inter-region traffic.

Conclusions

Building a multi-cloud banking architecture requires a paradigm shift: moving from managing servers to managing distributed services. Although operational complexity increases, the gain in terms of resilience, data sovereignty, and bargaining power with vendors justifies the investment for modern financial institutions. The key to success lies in rigorous automation (GitOps) and a deep understanding of data consistency models.

Frequently Asked Questions

Adopting a multi-cloud architecture has become a de facto standard for financial institutions primarily to ensure operational resilience and regulatory compliance. Regulations like DORA in the European Union require mitigating risks associated with dependence on a single technology provider. By using multiple providers like AWS and GCP, banks eliminate the «Single Point of Failure», ensuring that critical services such as Core Banking Systems remain operational even in the event of severe outages of an entire cloud provider, thus increasing data sovereignty and service continuity.

The choice between these two strategies defines the balance between costs, complexity, and recovery times. In the Active-Passive configuration, one cloud handles traffic while the other maintains a replica ready to take over, offering simpler data consistency management but higher recovery times. Conversely, the Active-Active scenario distributes traffic in real-time across both providers; this solution is ideal for high availability and eliminating downtime but requires complex management of bidirectional data synchronization to avoid write conflicts.

Data management in a distributed environment is based on the CAP Theorem, which imposes a contextual choice between consistency and availability in the event of a network partition. For critical data like balances and transactions, strong consistency must be prioritized by sacrificing latency, using distributed consensus protocols. For less sensitive data, such as transaction history, one can opt for eventual consistency. Technologically, this is often resolved by abstracting the data layer with geographically distributed databases, such as CockroachDB, which natively manage replication between different providers.

Latency is the main challenge in distributed architectures. To mitigate it, geographic colocation is fundamental, meaning selecting regions of different providers that are physically close, such as Frankfurt for both, to keep response time under 10 milliseconds. Furthermore, using the public internet for database synchronization is discouraged; private backbones or dedicated interconnection solutions via neutral partners are preferred. Finally, using a federated Service Mesh helps manage intelligent traffic routing to optimize performance.

«Split-Brain» occurs when two clouds lose connection with each other and begin accepting divergent writes independently. The standard technical solution involves implementing an observer node or «Tie-Breaker» positioned in a neutral third location, which can be another cloud provider like Azure or an on-premise data center. This third node serves to maintain the odd quorum necessary for consensus protocols, allowing the system to decide which version of the data is correct and preventing database corruption.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.