In the digital landscape of 2026, competition in the fintech sector is no longer played out solely on interest rates, but on the ability to intercept hyper-specific user demand. **Programmatic SEO** represents the only scalable lever for mortgage comparison portals that need to rank for thousands of long-tail queries like “Fixed rate mortgage Milan 200,000 euros” without the manual intervention of an army of copywriters. This technical guide explores the engineering architecture necessary to implement a secure, high-performance, data-driven programmatic SEO strategy, abandoning old monolithic CMSs in favor of Headless and Serverless solutions.

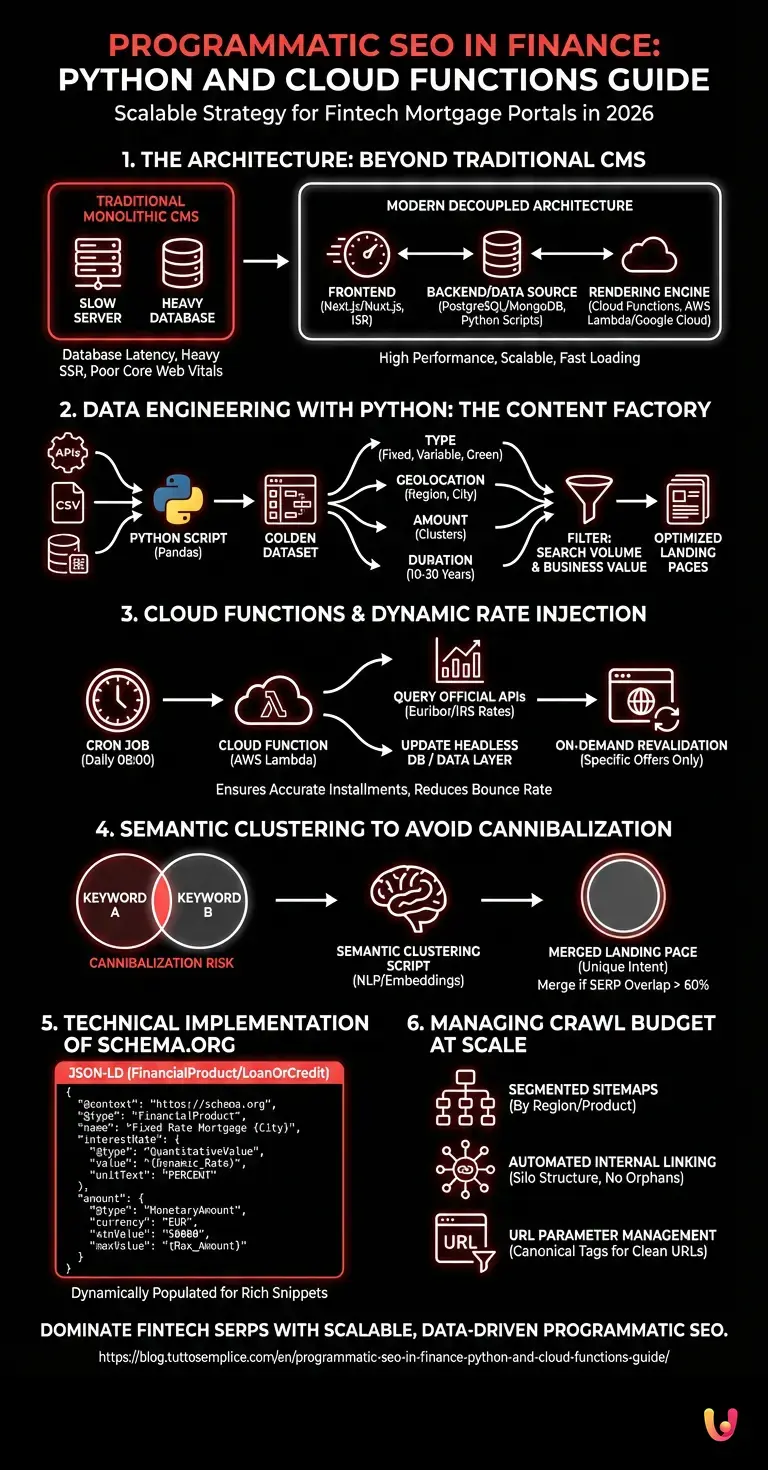

1. The Architecture: Beyond the Traditional CMS

To manage tens of thousands of dynamic landing pages, a standard WordPress installation is not sufficient. Database latency and the weight of traditional server-side rendering (SSR) would compromise **Core Web Vitals**, a now critical ranking factor. The modern approach requires a decoupled architecture:

- Frontend: Frameworks like Next.js or Nuxt.js to leverage ISR (Incremental Static Regeneration).

- Backend/Data Source: A structured database (PostgreSQL or MongoDB) fed by Python scripts.

- Rendering Engine: Cloud Functions (AWS Lambda or Google Cloud Functions) for content generation and updates.

2. Data Engineering with Python: The Content Factory

The heart of **programmatic SEO** lies in the quality of the dataset. It is not about spamming Google’s index with empty pages, but about creating unique value. Using **Python** and libraries like Pandas, we can cross-reference different data sources to generate the “Golden Dataset”.

Generating Permutations

The Python script must manage combinatorial variables. For a mortgage portal, the key variables are:

- Type: Fixed Rate, Variable, Mixed, Green.

- Geolocation: Region, Province, City (focusing on capitals).

- Amount: Amount clusters (e.g., 100k, 150k, 200k).

- Duration: 10, 20, 30 years.

A simple iterative script could generate 50,000 combinations. However, software engineering requires us to filter these combinations by Search Volume and Business Value. It makes no sense to generate a page for “Mortgage 500 euros in Nowhere”.

3. Cloud Functions and Dynamic Rate Injection (Euribor/IRS)

The main problem with SEO in the credit sector is data obsolescence. A static article written two months ago with a fixed rate of 2.5% is useless today if the IRS has risen. This is where **Cloud Functions** (e.g., AWS Lambda) come into play.

Instead of regenerating the entire site every day, we can configure a serverless function that:

- Is triggered by a Cron Job every morning at 08:00.

- Queries official APIs (e.g., ECB or financial data providers) to get updated Euribor and IRS rates.

- Updates only the JSON fields in the Headless database or Data Layer.

- Triggers a selective regeneration (On-demand Revalidation) only for pages showing specific offers.

This ensures the user always sees the correctly calculated installment, increasing Time on Page and reducing Bounce Rate.

4. Semantic Clustering to Avoid Cannibalization

One of the biggest risks of **programmatic SEO** is keyword cannibalization and the creation of “Thin Content” (low-value content). If we create a page for “Mortgage Milan” and one for “Home mortgage Milan”, Google might not understand which one to rank.

Algorithmic Solution

Before publishing, a **Semantic Clustering** script must be run. Using NLP (Natural Language Processing) APIs or local embedding models, we can group keywords that share the same search intent. If two permutations have a SERP overlap greater than 60%, they must be merged into a single landing page.

5. Technical Implementation of Schema.org (FinancialProduct)

To dominate SERPs in 2026, structured markup is mandatory. The classic Article schema is not enough. For credit, we must implement **FinancialProduct** and **LoanOrCredit**.

Here is how to structure the JSON-LD dynamically within templates:

{

"@context": "https://schema.org",

"@type": "FinancialProduct",

"name": "Fixed Rate Mortgage {City}",

"interestRate": {

"@type": "QuantitativeValue",

"value": "{Dynamic_Rate}",

"unitText": "PERCENT"

},

"amount": {

"@type": "MonetaryAmount",

"currency": "EUR",

"minValue": "50000",

"maxValue": "{Max_Amount}"

}

}

This code must be automatically injected by the backend at page rendering time, populating the variables in curly braces with fresh data retrieved from Cloud Functions.

6. Managing Crawl Budget at Scale

When publishing 10,000 pages, **Crawl Budget** becomes the bottleneck. Googlebot will not crawl everything immediately. Essential strategies:

- Segmented Sitemaps: Do not use a single sitemap.xml. Create a sitemap index pointing to specific sitemaps by region or product type (e.g.,

sitemap-mortgages-lombardy.xml). - Automated Internal Linking: Use scripts to create a silo structure. The “Mortgages Lombardy” page must automatically link to all Lombardy provincial capitals, and each city must link to the region and neighboring cities. No page should be an orphan.

- URL Parameter Management: Ensure search filters (e.g., ?min_price=…) do not generate duplicate indexable URLs by using the self-referencing

canonicaltag on clean programmatic pages.

In Brief (TL;DR)

Decoupled and serverless architecture outperforms traditional CMSs for managing thousands of hyper-specific and high-performance financial landing pages.

Python scripts and Cloud Functions automate content generation and real-time updates of variable interest rates.

Semantic clustering and dynamic structured data optimize SERP ranking, preventing cannibalization and ensuring value for the user.

Conclusions

**Programmatic SEO** in the credit sector is not a shortcut to generate easy traffic, but a complex engineering discipline. It requires the fusion of backend development skills (Python, Cloud), data management, and advanced technical SEO. Those who manage to master automation while ensuring updated data (IRS/Euribor rates) and a fast user experience will build an unbridgeable competitive advantage over manually managed portals.

Frequently Asked Questions

A traditional monolithic CMS struggles to manage tens of thousands of dynamic pages without compromising load speed and Core Web Vitals. The Headless architecture, combined with modern frameworks like Next.js and Serverless functions, allows the frontend to be decoupled from the data. This ensures high performance thanks to incremental static regeneration and reduces database latency, which are crucial factors for ranking on Google in the competitive mortgage market and for handling high traffic volumes.

To avoid the obsolescence of financial data, such as Euribor or IRS rates, Cloud Functions triggered by scheduled processes are used. These functions query official APIs daily and update only specific fields in the database. Thanks to selective on-demand regeneration, the system updates exclusively the pages impacted by the rate change, ensuring the user always sees the correct installment without having to rebuild the entire portal every day, thus saving server resources.

Massive page creation carries the risk that Google may not know which URL to rank for similar search intents. The solution lies in preventive semantic clustering: using Python scripts and natural language processing algorithms, keywords are analyzed to group those with the same intent. If two variants show a significant SERP overlap, greater than 60 percent, it is necessary to merge them into a single comprehensive resource, also filtering out combinations with low search volume or poor commercial value.

To gain visibility in financial SERPs, generic markup for articles is not enough. It is fundamental to implement the FinancialProduct and LoanOrCredit types within the JSON-LD code. This structured data must be populated dynamically by the backend at the time of rendering, including precise information such as the variable or fixed interest rate, the currency, and minimum and maximum amount limits, thus facilitating the understanding of the specific product by search engines.

When publishing high volumes of URLs, Googlebot needs clear paths for efficient crawling. It is essential to segment XML Sitemaps by region or product type instead of using a single huge file. Furthermore, an automated silo internal linking structure must be implemented, where regional pages link to capitals and vice versa, ensuring that no orphan pages exist and using correct canonical tags to manage filter parameters in the URL and avoid duplicates.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.