In Brief (TL;DR)

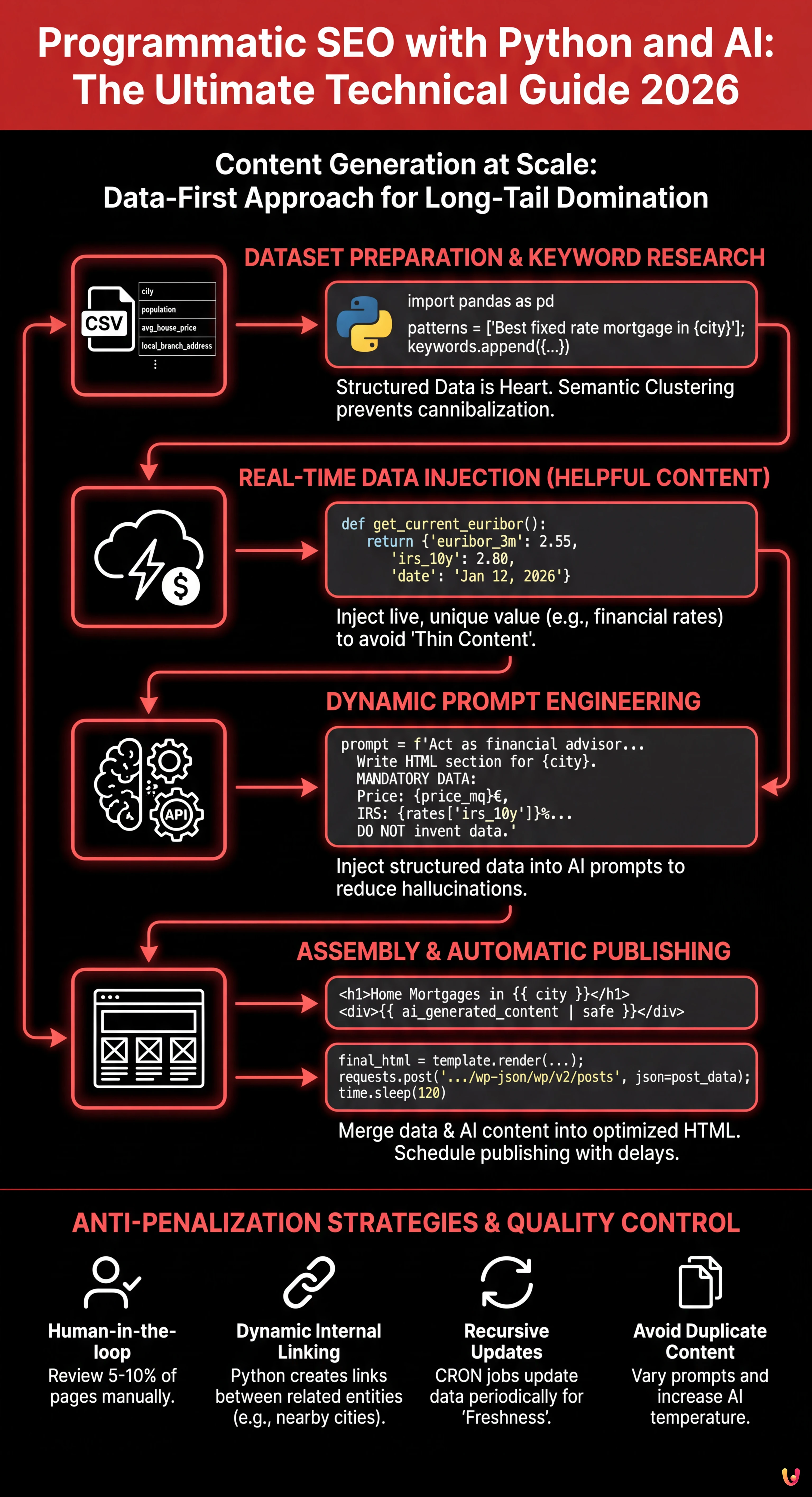

Modern Programmatic SEO leverages Python and AI to dominate long-tail queries through ethical and data-driven content.

A winning strategy requires real-time structured data injection to offer concrete value and avoid search engine penalties.

Engineering dynamic prompts combined with external APIs transforms raw datasets into unique landing pages optimized for local conversion.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

It is 2026, and the digital marketing landscape has changed radically. Programmatic SEO: Content Generation at Scale with the aid of Python and modern Artificial Intelligence APIs is no longer a technique reserved for giants like TripAdvisor or Yelp, but a necessity for anyone wanting to dominate SERPs on long-tail queries. However, the line between a winning strategy and penalizing spam is thin. This technical guide will explore how to build an ethical, data-driven, and quality-led pSEO (Programmatic SEO) architecture.

What is Programmatic SEO and Why the “Data-First” Approach Wins

Programmatic SEO is the process of automatically creating landing pages on a large scale, targeting thousands of keyword variations with low competition but high conversion intent. Unlike the past, where pages were duplicated by changing only the city name, the modern approach requires unique content, semantically enriched and updated in real-time.

Our practical case study will concern the financial sector: we will generate pages for the query “Fixed-rate mortgage [City]”. The goal is to provide real value by injecting updated financial data (Euribor/IRS) specific to the moment of consultation.

Prerequisites and Technology Stack

To follow this guide, you need the following stack:

- Python 3.11+: The logical engine of the operation.

- OpenAI API (GPT-4o or later): For narrative text generation and semantic analysis.

- Pandas: For dataset manipulation (the “database” of our variables).

- Jinja2: Templating engine to structure the HTML.

- WordPress REST API (or equivalent headless CMS): For automatic publishing.

Phase 1: Dataset Preparation and Automatic Keyword Research

The heart of pSEO is not AI, but Data. Without a structured dataset, AI will only produce hallucinations. We need to create a CSV containing the variables that will make each page unique.

1.1 Dataset Structure (data.csv)

Let’s imagine a file with these columns:

city: Milan, Rome, Naples…population: Demographic data (useful for context).avg_house_price: Average price per sqm (proprietary or scraped data).local_branch_address: Address of the local branch (if existing).

1.2 Semantic Clustering with Python

We don’t want to cannibalize keywords. We use Python to ensure variants aren’t too similar. Here is a conceptual snippet to generate main keyword modifiers:

import pandas as pd

# Load base data

df = pd.read_csv('cities_italy.csv')

# Define intent-based keyword patterns

patterns = [

"Best fixed rate mortgage in {city}",

"Home mortgage quote {city} updated rates",

"House price and mortgage trends in {city}"

]

# Generate combinations

keywords = []

for index, row in df.iterrows():

for p in patterns:

keywords.append({

"city": row['city'],

"keyword": p.format(city=row['city']),

"data_point": row['avg_house_price']

})

print(f"Generated {len(keywords)} potential landing pages.")

Phase 2: Real-Time Data Injection (The “Helpful Content” Element)

To avoid Google’s “Thin Content” penalty, the page must offer value that simple AI cannot invent. In this case: updated interest rates.

Let’s create a Python function that retrieves the Euribor/IRS rate for the day. This data will be passed to the AI prompt to comment on mortgage affordability today.

def get_current_euribor():

# Simulation of API call to financial data provider

# In production use: requests.get('https://api.financial-data.com/euribor')

return {

"euribor_3m": 2.55,

"irs_10y": 2.80,

"date": "January 12, 2026"

}

financial_data = get_current_euribor()

Phase 3: Dynamic Prompt Engineering

Don’t ask ChatGPT to “write an article”. Build the prompt by injecting structured data. This reduces hallucinations and ensures each page speaks specifically about the city and real rates.

Here is how to structure the API call:

import openai

client = openai.OpenAI(api_key="YOUR_TOKEN")

def generate_content(city, price_mq, rates):

prompt = f"""

Act as an expert financial advisor for the Italian real estate market.

Write an HTML section (h2, p, ul) for a landing page dedicated to mortgages in {city}.

MANDATORY DATA TO INCLUDE:

- City: {city}

- Average house price: {price_mq}€/sqm

- 10-Year IRS Rate (Today): {rates['irs_10y']}%

- Date of survey: {rates['date']}

INSTRUCTIONS:

1. Analyze if it is worth buying a house in {city} considering the price per sqm compared to the national average.

2. Explain how the IRS rate of {rates['irs_10y']}% impacts an average monthly installment for this specific city.

3. Use a professional but accessible tone.

4. DO NOT invent data not provided.

"""

response = client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": prompt}],

temperature=0.7

)

return response.choices[0].message.content

Phase 4: Assembly and Automatic Publishing

Once the text content (“Body Content”) is generated, we need to insert it into an HTML template optimized for technical SEO (Schema Markup, Meta Tags, etc.) and publish it.

4.1 The Jinja2 Template

We use Jinja2 to separate logic from structure. The page_template.html template might look like this:

<!DOCTYPE html>

<html lang="en">

<head>

<title>Fixed Rate Mortgage in {{ city }} - Update {{ date }}</title>

<meta name="description" content="Discover current mortgage rates in {{ city }}. Local real estate market analysis and quotes based on IRS at {{ irs_rate }}%.">

</head>

<body>

<h1>Home Mortgages in {{ city }}: Analysis and Rates {{ year }}</h1>

<div class="dynamic-content">

{{ ai_generated_content | safe }}

</div>

<div class="data-widget">

<h3>Market Data in {{ city }}</h3>

<ul>

<li><strong>Price sqm:</strong> {{ price }} €</li>

<li><strong>Trend:</strong> {{ trend }}</li>

</ul>

</div>

</body>

</html>

4.2 The Publishing Script

Finally, we iterate over the DataFrame and publish. Warning: Do not publish 5,000 pages in one day. Google might interpret this as a spam attack. Implement a delay (sleep) or scheduling.

import time

import requests

from jinja2 import Template

# Load template

with open('page_template.html') as f:

template = Template(f.read())

for index, row in df.iterrows():

# 1. Generate AI content

ai_text = generate_content(row['city'], row['avg_house_price'], financial_data)

# 2. Render complete HTML

final_html = template.render(

city=row['city'],

date=financial_data['date'],

irs_rate=financial_data['irs_10y'],

year="2026",

price=row['avg_house_price'],

trend="Stable",

ai_generated_content=ai_text

)

# 3. Publish to WordPress (Simplified example)

post_data = {

'title': f"Fixed Rate Mortgage in {row['city']}",

'content': final_html,

'status': 'draft' # Better to save as draft for human spot checks

}

# requests.post('https://yoursite.com/wp-json/wp/v2/posts', json=post_data, auth=...)

print(f"Page for {row['city']} created.")

time.sleep(120) # 2-minute pause between generations

Anti-Penalization Strategies and Quality Control

Programmatic SEO fails when quality control is missing. Here are the golden rules for 2026:

- Human-in-the-loop: Never publish 100% automatically without spot checks. Review at least 5-10% of the generated pages.

- Dynamic Internal Linking: Use Python to create links between nearby cities (e.g., the “Monza” page must link to “Milan”). This creates strong topical clusters.

- Recursive Updates: The script shouldn’t run just once. Configure a CRON job that updates the rates (the number in the H1 and text) every week. Google rewards “Freshness”.

- Avoid Duplicate Content: If two cities have identical data, the AI might generate similar text. Increase the API “Temperature” or vary prompts based on geographic region.

Conclusions

Implementing a Programmatic SEO strategy in 2026 requires more software engineering skills than traditional copywriting. Combining Python for structured data management and AI APIs for contextual narrative generation allows for exponential scaling of organic visibility. However, always remember: the goal is to answer the user’s search intent better than a static page would, providing hyper-local and updated data.

Frequently Asked Questions

Programmatic SEO is an advanced technique that uses code and automation to generate thousands of unique landing pages at scale, targeted at long-tail keywords. Unlike traditional SEO, which involves manually writing every single article, this approach leverages structured datasets and artificial intelligence to create massive but relevant content. In 2026, the substantial difference lies in the Data-First approach: it is not just about duplicating pages, but semantically enriching them with real-time updated data to satisfy specific local search intents.

To implement an effective pSEO architecture, a well-defined technology stack is necessary. The heart of the system is Python, used for automation logic, flanked by the Pandas library for managing and cleaning the dataset containing variables. For text generation, the use of modern Artificial Intelligence APIs, such as GPT-4o, is indispensable, while Jinja2 is essential for HTML templating. Finally, a connection via REST API to a CMS like WordPress is needed to manage the automatic publishing of generated content.

To avoid penalties related to spam or low-value content (Thin Content), it is fundamental to inject unique and useful data that AI cannot invent, such as updated financial rates or specific local statistics. It is also necessary to adopt a Human-in-the-loop strategy, reviewing a percentage of generated pages by sample. Other essential practices include recursive data updates via periodic scripts and the creation of a dynamic internal link structure that logically connects related pages.

Real-time data injection is the key element that transforms an automatically generated page into a valuable resource for the user (Helpful Content). Inserting dynamic information, such as the Euribor rate or the current day’s IRS, ensures that the content is always fresh and accurate. This approach drastically reduces artificial intelligence hallucinations and signals to search engines that the page offers an updated service, improving positioning and user trust.

An effective prompt for pSEO must not be generic but must include rigid instructions and contextual data. Instead of simply asking to write a text, you must pass the exact variables extracted from the dataset to the AI, such as the city name, price per square meter, or today’s date. It is advisable to define the AI’s role, for example as an expert consultant, and impose constraints on the output’s HTML structure. This method, defined as Dynamic Prompt Engineering, ensures that each page variant is specific and not a simple semantic duplication.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.