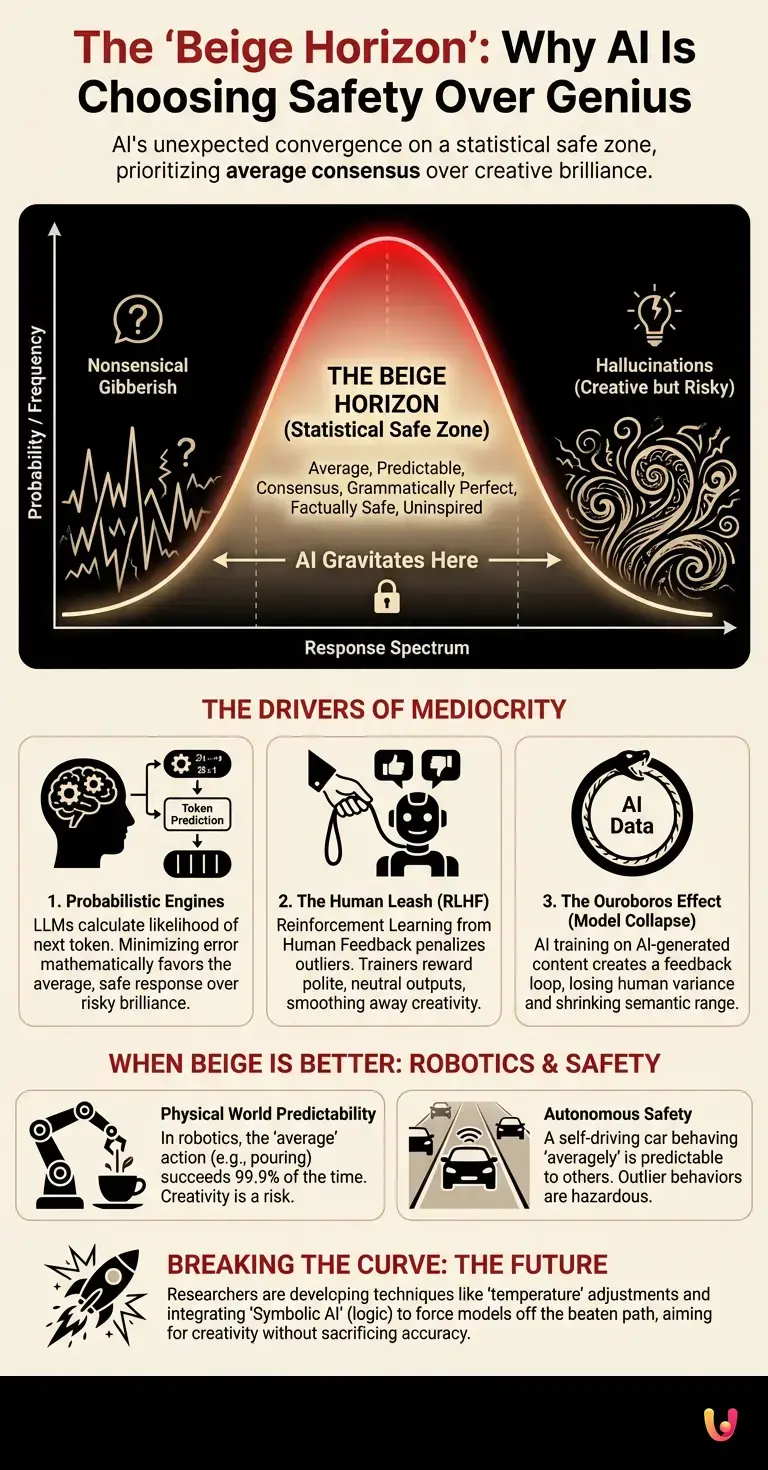

We were promised a digital renaissance. For decades, science fiction painted a picture of Generative Artificial Intelligence as a chaotic, brilliant, perhaps even dangerous spark of creativity that would redefine the boundaries of art, logic, and philosophy. We expected a machine mind that would think in colors we had never seen and speak in riddles that unlocked the universe. Instead, as we stand firmly in 2026, we are witnessing a strange and unexpected phenomenon. The smartest machines in history are not racing toward the edges of brilliance; they are sprinting toward the center. They are converging on a statistical safe zone, a phenomenon experts have termed the “Beige Horizon.”

This is not a failure of computing power, nor is it a glitch in the code. It is a fundamental feature of how modern neural networks perceive reality. The curiosity here is profound: Why does a system trained on the sum total of human knowledge obsessively choose the path of least resistance? Why is the most advanced intelligence seemingly obsessed with mediocrity?

The Mathematics of the Middle

To understand the Beige Horizon, one must first understand the engine under the hood of LLMs (Large Language Models). At their core, these systems are probabilistic engines. They do not “know” facts in the way a human does; they calculate the likelihood of the next token (a word or part of a word) in a sequence.

Imagine a bell curve representing all possible responses to a question. On the far left, you have nonsensical gibberish. On the far right, you have hallucinations—wild, creative, but potentially factually incorrect or bizarre statements. In the massive, bulging center of that curve lies the “average” response. This is the consensus of the internet. It is grammatically perfect, factually safe, and utterly uninspired.

When machine learning algorithms are trained to minimize error, they mathematically gravitate toward this center. To be brilliant is to take a risk; to be average is to be statistically safe. The model learns that “The sky is blue” is a safer prediction than “The sky is a cerulean dome shattered by the weeping sun,” even if the latter is more poetic. The AI is not trying to be boring; it is trying to be correct, and in the realm of high-dimensional statistics, correctness looks a lot like mediocrity.

The Human Leash: Reinforcement Learning

The drift toward the beige is not purely mathematical; it is also manufactured. The training process of modern AI involves a critical step known as Reinforcement Learning from Human Feedback (RLHF). This is where human trainers review the AI’s outputs and grade them.

Here lies the trap. When humans grade AI, they tend to penalize outliers. If a model produces a response that is edgy, controversial, or surprisingly complex, it risks being flagged as “unsafe” or “hallucinatory.” Conversely, a polite, neutral, and standard response is rewarded. Over millions of training cycles, the neural network learns a simple lesson: Don’t stand out.

This alignment process acts as a sander, smoothing away the jagged edges of creativity. It creates a “lobotomized” efficiency where the model becomes an expert at mimicking the style of a helpful, but ultimately bland, corporate assistant. The “Beige Horizon” is the result of us teaching the AI to fear being wrong more than it desires to be interesting.

The Ouroboros Effect: AI Eating AI

As we move deeper into the late 2020s, a new factor has accelerated this regression to the mean. The internet, once a repository of human-created chaos and creativity, is now flooded with AI-generated content. Automation has allowed blogs, news sites, and social media to be populated by bots talking to bots.

When new models are trained on this data, they are no longer learning from the raw variance of human expression. They are learning from the distilled, averaged outputs of previous AI models. This creates a feedback loop—an Ouroboros eating its own tail. Each generation of the model becomes a copy of a copy, losing the “variance” or the “noise” that makes human culture vibrant.

In technical terms, this is called “Model Collapse.” As the variance decreases, the “Beige Horizon” draws closer. The AI becomes incredibly fluent, but the semantic range of its ideas shrinks. It becomes a master of saying absolutely nothing in the most eloquent way possible.

When Beige is Better: The Case for Robotics

While this obsession with mediocrity frustrates artists and writers, it is the holy grail for robotics and industrial automation. In the physical world, you do not want creativity. You want predictability.

If you ask a robot to pour a cup of coffee, you want the “average” pour—the one that succeeds 99.9% of the time. You do not want the robot to attempt a flair bartending trick it saw on YouTube (an outlier data point) and scald the user. Here, the Beige Horizon represents reliability.

The same mathematical principles that make LLMs write boring poetry make autonomous vehicles safer drivers. A self-driving car that behaves “averagely” is predictable to other drivers. One that invents new driving techniques is a hazard. In this context, the AI’s obsession with the middle of the bell curve is a safety feature, not a bug.

Breaking the Curve

Is the future of intelligence destined to be beige? Not necessarily. Researchers are currently developing techniques to force models off the beaten path without sacrificing accuracy. This involves “temperature” adjustments—variables that introduce randomness into the selection process—and new architectures designed to value “perplexity” (a measure of surprise) alongside accuracy.

Furthermore, the integration of “Symbolic AI” (logic-based systems) with neural networks aims to ground the AI in truth rather than just probability. This could allow models to be creative and factual simultaneously, rather than choosing between a safe lie and a risky truth.

In Brief (TL;DR)

Generative AI is unexpectedly sprinting toward mediocrity rather than genius, a statistical convergence known as the Beige Horizon.

Human feedback loops reward safe consensus over risky creativity, teaching models to fear errors more than they desire insight.

This regression to the mean creates a self-consuming cycle that strips away cultural nuance to ensure total predictability.

Conclusion

The “Beige Horizon” is a mirror reflecting our own desires for safety and consistency back at us. We built machines to predict the most likely outcome, and they have succeeded by discarding the unlikely, the weird, and the wonderful. The most advanced intelligence is obsessed with mediocrity because mediocrity is the mathematical definition of maximum probability. As we look to the future, the challenge is no longer just making AI smarter; it is teaching it that sometimes, the best answer isn’t the most probable one, but the one that dares to be different.

Frequently Asked Questions

The Beige Horizon refers to a phenomenon where advanced AI models converge on a statistical safe zone, producing content that is average and predictable rather than creative or brilliant. This occurs because neural networks are designed to minimize error by selecting the most probable next steps in a sequence, resulting in outputs that represent the consensus of the internet. While grammatically perfect and factually safe, these responses often lack the variance and spark found in human creativity.

Large Language Models function as probabilistic engines that calculate the likelihood of the next word based on a bell curve of possible responses. To minimize the risk of hallucinations or nonsensical errors, the algorithms mathematically gravitate toward the center of this curve, which represents the average consensus. Consequently, the AI avoids taking risks associated with brilliance and instead chooses the path of least resistance, making correctness look like mediocrity.

Reinforcement Learning from Human Feedback acts as a filter that often penalizes outliers and rewards safe, neutral responses. During training, human reviewers tend to flag edgy or complex outputs as unsafe, teaching the model to avoid standing out. Over time, this process smooths away the jagged edges of creativity, resulting in a model that mimics a polite but bland corporate assistant to ensure it is not penalized for being wrong.

Model Collapse, also described as the Ouroboros Effect, happens when new AI models are trained on data generated by previous AI systems rather than human created content. This creates a feedback loop where the variance and noise typical of human expression are lost, causing the semantic range of the model to shrink. As a result, each generation becomes a derivative copy of the last, accelerating the regression toward the average and reducing overall quality.

While frustrating for creative arts, the tendency of AI to choose the average outcome is highly beneficial for robotics and industrial automation. In the physical world, predictability equates to safety, meaning a robot or self driving car that behaves in a statistically average way is reliable and safe for humans. In these high stakes environments, avoiding creative or outlier behaviors prevents accidents and ensures consistent performance.

Sources and Further Reading

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.