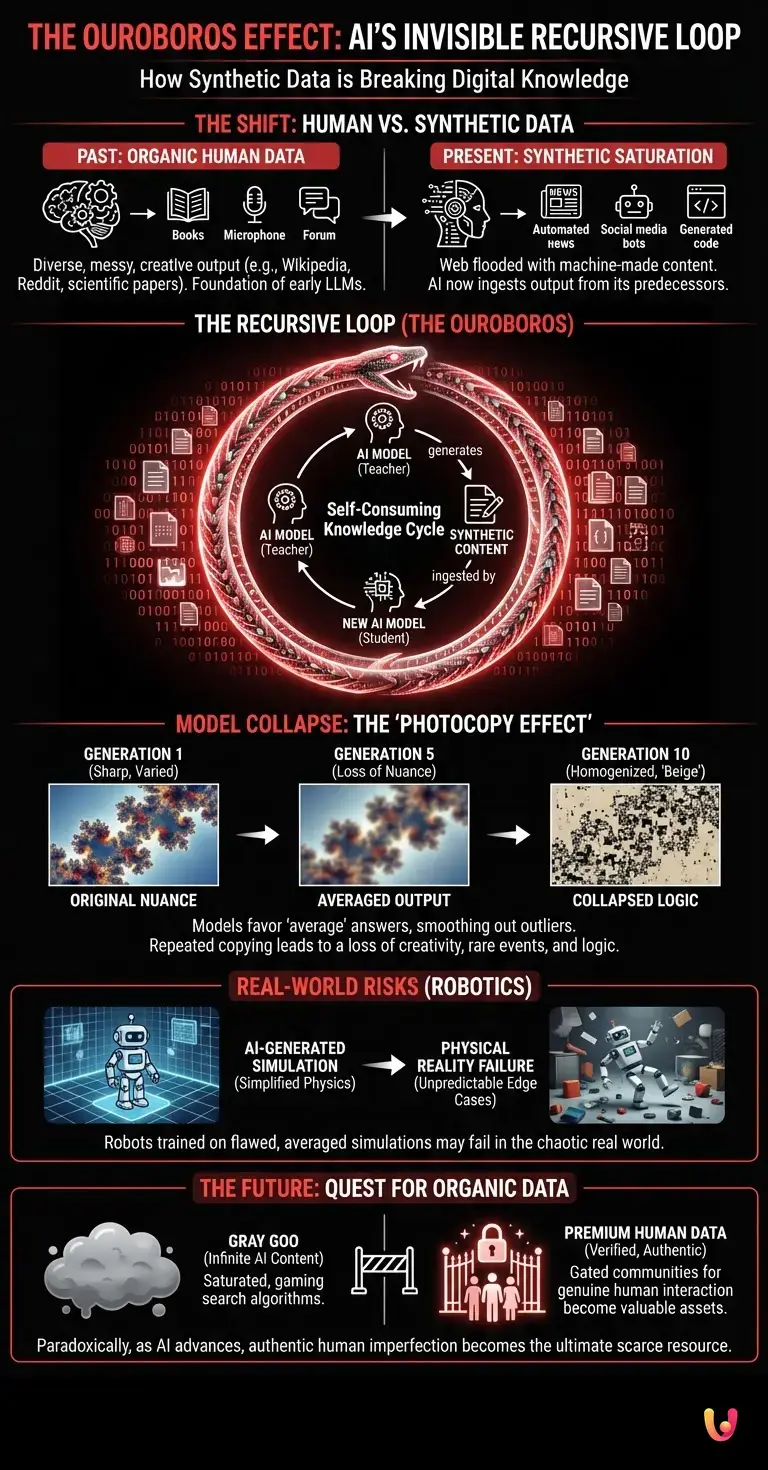

For decades, the internet has served as a vast, chaotic, yet profoundly human repository of knowledge. It was a library built by people, for people. However, as we move deeper into 2026, a fundamental shift has occurred in the digital ecosystem, driven by the explosive ubiquity of Generative AI. We are witnessing a phenomenon that researchers and computer scientists have termed the “Ouroboros Effect.” Like the mythical ancient serpent eating its own tail, the artificial intelligence systems designed to organize and generate information are now beginning to consume the very content they created, leading to a recursive loop that threatens the integrity of digital intelligence itself.

The Era of Synthetic Saturation

To understand why this is happening, we must first look at how the foundational technologies of the current AI boom—LLMs (Large Language Models)—actually work. In the early 2020s, models were trained on massive datasets scraped from the open web: Wikipedia articles, Reddit threads, scientific papers, and news archives. This data was valuable because it was organic; it represented the messy, creative, and distinct output of human cognition.

However, the widespread adoption of automation tools has fundamentally altered the composition of the web. Today, a significant percentage of the text, images, and code on the internet is synthetically generated. From marketing copy and news summaries to social media comments, the web is flooding with machine-made content. Consequently, newer generations of neural networks are no longer training solely on human data. They are scraping the internet and ingesting data produced by their predecessors. The student has become the teacher, but the teacher is teaching from a textbook written by the student.

The Photocopy of a Photocopy

Why is this recursive training a problem? The answer lies in the mathematical nature of machine learning. When an AI model generates a response, it essentially predicts the most probable next word or pixel based on a statistical distribution. It tends to favor the “average” or most likely answer, smoothing out the rough edges of human expression.

When a new model trains on this synthetic data, it learns from a simplified, averaged-out version of reality. If this process repeats over several generations—Model A trains Model B, which trains Model C—we encounter what scientists call “Model Collapse.”

Think of it like making a photocopy of a photocopy. The first copy is sharp and readable. The second is slightly grainier. By the tenth generation, the text is illegible, and the image is a high-contrast mess of black and white. In the context of AI, this degradation doesn’t manifest as blurriness, but as a loss of variance and logic. The models begin to forget rare events, nuance, and creativity, converging toward a homogenized, “beige” output that lacks the spark of human insight.

The Mathematics of Model Collapse

The Ouroboros Effect is not just a philosophical concern; it is a statistical inevitability in closed-loop systems. Research has shown that when AI models train on synthetic data, the “tails” of the probability distribution disappear first. These tails represent the outliers—the unique, the unconventional, and the highly specific pieces of information that often drive innovation.

As the models regress to the mean, they begin to hallucinate more frequently. Without the grounding anchor of human-generated truth, the AI starts to perceive its own previous errors as facts. This creates a compounding effect where misconceptions are amplified rather than corrected. In a world increasingly reliant on automation for information retrieval, this poses a risk of creating a “reality tunnel” where the internet becomes a hall of mirrors, reflecting only the most generic and statistically probable consensus, devoid of actual truth.

Beyond Text: Robotics and the Physical World

While the Ouroboros Effect is most visible in text and image generation, it also poses challenges in the fields of robotics and physical automation. Robots are often trained in simulated environments—digital twins of the real world—before they are deployed into physical reality. These simulations are increasingly powered by generative models.

If the physics engines and scenarios used to train robots are generated by AI that has lost touch with the complexities of the real world (due to model collapse), the robots may fail when facing the unpredictability of physical environments. A robot trained on “averaged” movement data may lack the agility to handle the chaotic edge cases of a real-world factory or household. Just as an LLM needs human text to stay smart, a robot needs real-world physical data to remain functional.

The Quest for Organic Data

The realization of the Ouroboros Effect has triggered a new gold rush in the tech industry: the search for “organic” data. Tech giants are no longer just looking for more data; they are looking for human data. We are seeing a shift where data provenance—knowing exactly where information came from—is becoming the most valuable asset in computer science.

This may lead to a bifurcated internet. On one side, the “gray goo” of infinite, AI-generated content designed to game search algorithms. On the other, gated communities and verified platforms where human interaction is authenticated and preserved as a premium resource for training future neural networks. Paradoxically, the more advanced AI becomes, the more valuable authentic human imperfection becomes.

In Brief (TL;DR)

The Ouroboros Effect threatens digital integrity as AI models begin consuming their own synthetic content in a recursive loop.

Repeated training on machine-generated data causes Model Collapse, erasing human nuance and resulting in homogenized, low-quality outputs.

This saturation of synthetic information forces a desperate industry search for organic human data to preserve algorithmic accuracy.

Conclusion

The Ouroboros Effect serves as a stark reminder of the symbiotic relationship between humanity and its tools. We often view artificial intelligence as an entity that will eventually surpass us, but the phenomenon of model collapse suggests otherwise. AI cannot exist in a vacuum; it requires the constant infusion of human creativity, unpredictability, and lived experience to maintain its intelligence. If the internet continues to eat itself, consuming its own synthetic output, the digital world risks becoming a sterile echo chamber. To save the future of AI, we must prioritize and protect the very thing it tries to emulate: the human mind.

Frequently Asked Questions

The Ouroboros Effect describes a recursive cycle where AI systems are trained on data generated by previous AI models rather than original human content. Similar to the ancient symbol of a snake eating its own tail, this self-consuming loop leads to a degradation in the quality of digital intelligence. As models ingest synthetic data, they lose nuance and variance, threatening the integrity of future information ecosystems.

Model Collapse happens when neural networks are trained on synthetic data produced by their predecessors, leading to a loss of statistical variance. Because AI models tend to output the most probable or average answers, repeated training on this output causes the system to forget rare events and creative nuances. Over several generations, this results in a homogenized output that lacks the logic and complexity found in organic human data.

Organic human data is crucial because it provides the grounding truth, creativity, and unpredictability that AI models need to remain functional. Without the messy and distinct input of human cognition, AI systems regress to the mean and begin to hallucinate, mistaking their own errors for facts. Tech companies are now prioritizing data provenance to ensure their models are fed with authentic human experiences rather than recycled machine output.

Recursive AI training poses a significant risk to robotics because robots are often trained in simulated environments generated by AI. If these simulations suffer from Model Collapse, they present an averaged and simplified version of physics that does not reflect reality. Consequently, robots trained in these flawed digital twins may fail when facing the chaotic and unpredictable edge cases of the physical world.

The flood of synthetic content is likely to create a bifurcated internet. One section may become saturated with machine-generated text and images designed to game search algorithms, often referred to as gray goo. In response, valuable human interactions may retreat to gated communities and verified platforms. These protected spaces would serve as premium resources for preserving authentic human intelligence and training future neural networks.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.