It begins with a playlist that feels eerily perfect, or a book recommendation that matches your current mood with telepathic precision. We often mistake this convenience for magic, but it is actually the manifestation of a profound shift in human experience. At the heart of this shift is Artificial Intelligence, the main entity driving a phenomenon that sociologists and technologists have begun to call the “Serendipity Gap.” As we move deeper into 2026, the efficiency of our digital assistants has reached a tipping point: we are being served exactly what we want, exactly when we want it, and in doing so, we are systematically eliminating the possibility of stumbling upon the unknown.

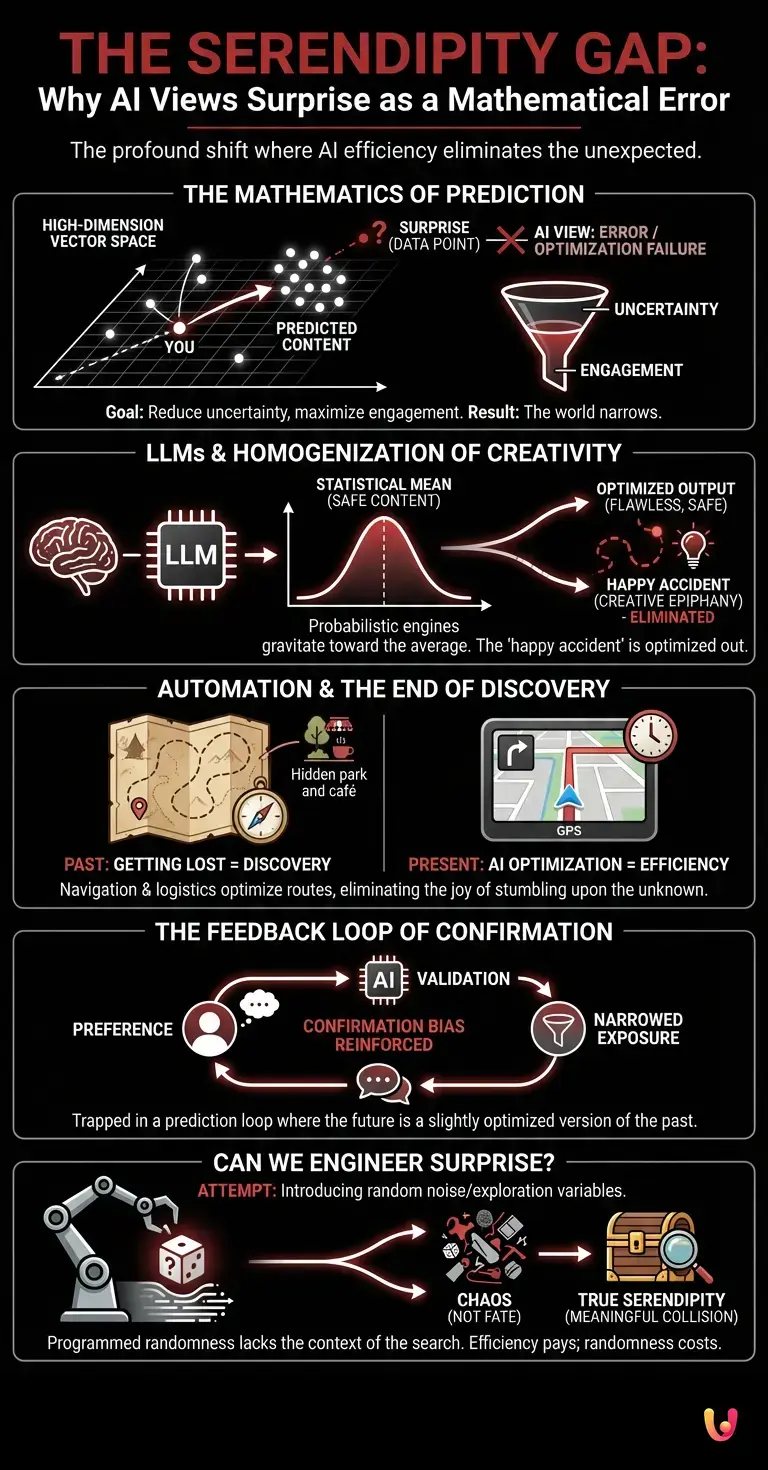

The Mathematics of Prediction

To understand why surprise is becoming an endangered species, we must look under the hood of the machine learning models that govern our digital lives. At a fundamental level, these systems are designed to reduce uncertainty. Whether it is a streaming service, a shopping platform, or a news aggregator, the underlying neural networks function as massive prediction engines.

These systems operate by mapping users and items into a high-dimensional vector space. Imagine a map with millions of coordinates. You are a specific point on this map, defined by your history: the articles you read, the time you hover over an image, the products you buy. The AI calculates the distance between you and every other piece of content. Its goal is to bridge the gap by offering you the item that is mathematically closest to your existing trajectory.

This is where the Serendipity Gap opens up. A “surprise” is, by definition, a data point that falls outside your predicted vector. To an algorithm, a surprise looks like an error. If a recommendation engine suggests a genre of music you have never listened to, and you skip the track, the model views this as a failure of optimization. Therefore, to maximize engagement and minimize friction, the system tightens the circle, feeding you variations of what you already know. The better the AI gets, the narrower your world becomes.

The Role of Large Language Models (LLMs)

While recommendation algorithms curate what we consume, LLMs (Large Language Models) are now curating how we think and create. By 2026, these models have become the default interface for information retrieval and content creation. However, LLMs are probabilistic engines; they are designed to generate the most likely next token in a sequence.

When you ask an AI to write a story or explain a concept, it gravitates toward the mean—the statistical average of all human knowledge it has been trained on. It smooths out the edges. It avoids the bizarre, the avant-garde, and the nonsensical unless explicitly instructed otherwise. The result is a homogenization of output. We are seeing a flood of content that is technically flawless but creatively safe. The “happy accident”—the writer’s block that leads to a sudden, unrelated epiphany, or the research error that leads to a new discovery—is being optimized out of the creative process.

Automation and the End of the Scenic Route

The Serendipity Gap extends beyond screens and into the physical world through robotics and automation. Consider the evolution of navigation. In the past, getting lost was a primary source of discovery. You might take a wrong turn and find a beautiful park or a quaint café. Today, AI-driven navigation systems optimize routes to save minutes, steering millions of people along the exact same efficient paths.

In logistics and supply chains, automated systems predict demand with such accuracy that inventory is positioned before a consumer even realizes they need it. While this is a marvel of efficiency, it eliminates the browsing experience. You no longer walk through a store and spot an item you didn’t know existed; the algorithm ensures you only see what you are statistically probable to purchase. The friction of the search, which often contained the joy of discovery, has been replaced by the seamlessness of the transaction.

The Feedback Loop of Confirmation

The psychological implication of the Serendipity Gap is the reinforcement of confirmation bias. When AI systems constantly validate our preferences, we lose the cognitive flexibility that comes from encountering the unexpected. We are less likely to be challenged by opposing viewpoints or exposed to aesthetics that require an acquired taste.

This creates a feedback loop. Because we are only shown things we are likely to engage with, our preferences calcify. The data fed back into the machine learning model confirms that its predictions were correct, prompting it to narrow the parameters even further. It is a self-fulfilling prophecy of sameness. We are not just trapped in a filter bubble; we are trapped in a prediction loop where the future is just a slightly optimized version of the past.

Can We Engineer Surprise?

Recognizing this deficit, some technologists are attempting to program “artificial serendipity” back into the system. This involves introducing random noise or “exploration” variables into algorithms—deliberately showing a user something they are statistically unlikely to enjoy, just to see what happens. However, this is a hard sell for tech companies. In an attention economy, serving a user something irrelevant risks disengagement. Efficiency pays; randomness costs.

Furthermore, programmed randomness is not true serendipity. True serendipity requires a collision of unrelated events that creates meaning. An algorithm rolling a dice to show you a random video is chaos, not fate. The human sensation of stumbling upon a treasure requires the context of the search, the feeling that we found it, not that it was assigned to us by a random number generator.

In Brief (TL;DR)

AI algorithms treat unexpected discoveries as mathematical errors, prioritizing hyper-efficiency over the magic of stumbling upon the unknown.

Predictive models narrow our cultural horizons by feeding us homogenized content that reinforces existing preferences rather than challenging them.

This relentless optimization creates a self-fulfilling feedback loop that systematically erases serendipity from both digital and physical experiences.

Conclusion

The Serendipity Gap is the price we pay for a frictionless life. As artificial intelligence continues to refine its understanding of human behavior, it will become increasingly difficult to experience a genuine surprise. The systems are simply too good at knowing what comes next. While this technology grants us god-like access to information and unparalleled convenience, it strips away the chaotic beauty of the unexpected. To find surprise in the age of algorithms, we must become intentionally inefficient. We must choose to take the wrong turn, read the book with the one-star review, and step outside the vector space that has been so carefully constructed around us. If we do not, we risk living a life that is perfectly predicted, and perfectly boring.

Frequently Asked Questions

The Serendipity Gap refers to a sociological and technological phenomenon where the increasing efficiency of AI algorithms systematically eliminates the opportunity for accidental discovery and unexpected experiences. As digital assistants and recommendation engines become better at predicting exactly what users want and when they want it, they remove the friction and uncertainty that traditionally allowed people to stumble upon the unknown, effectively narrowing the human experience to a loop of predicted preferences.

Machine learning systems are fundamentally designed to reduce uncertainty and maximize engagement by mapping users and items in a high-dimensional vector space. To these algorithms, a surprise represents a data point that falls outside the predicted trajectory, which the model views as a failure of optimization or a mistake in its calculations. To avoid this perceived error, the system tightens its recommendations to align closely with established history, prioritizing safe predictions over the risk of the unexpected.

Large Language Models operate as probabilistic engines that generate the most statistically likely next token, causing them to gravitate toward the mean of the human knowledge they were trained on. This results in a homogenization of output where content becomes technically flawless but creatively safe, often smoothing out the avant-garde ideas, happy accidents, and unique stylistic edges that characterize human creativity. Consequently, the reliance on these models risks creating a flood of content that lacks the distinctiveness found in non-optimized creative processes.

Technologists can introduce random noise or exploration variables into algorithms to show users content they are statistically unlikely to choose, but this often results in chaos rather than true serendipity. True serendipity involves finding meaning in a collision of unrelated events, which is distinct from a random selection generated by a computer. Furthermore, tech companies are often hesitant to implement these features because efficiency drives revenue, while serving irrelevant content risks user disengagement in the attention economy.

Living within a perfectly predicted digital environment reinforces confirmation bias and reduces cognitive flexibility by constantly validating existing preferences. When users are rarely challenged by opposing viewpoints or exposed to unfamiliar aesthetics, their tastes can calcify, creating a self-fulfilling prophecy of sameness. This feedback loop traps individuals in a state where the future is merely a slightly optimized version of the past, limiting personal growth and the ability to adapt to new concepts.

Sources and Further Reading

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.