In the rapidly evolving landscape of 2026, the discourse surrounding Artificial Intelligence often centers on a single, persistent critique: its reliability. From the early days of generative models to the sophisticated agents of today, users have lamented the tendency of these systems to fabricate information, a phenomenon colloquially known as “hallucination.” We treat these deviations from fact as critical failures, evidence that the code is broken or the training data is flawed. However, a deeper look into the architecture of machine learning reveals a counterintuitive truth. This propensity to err is not merely a byproduct of immature technology; it is, in many ways, the very engine that allows the system to function as an intelligent entity rather than a static archive.

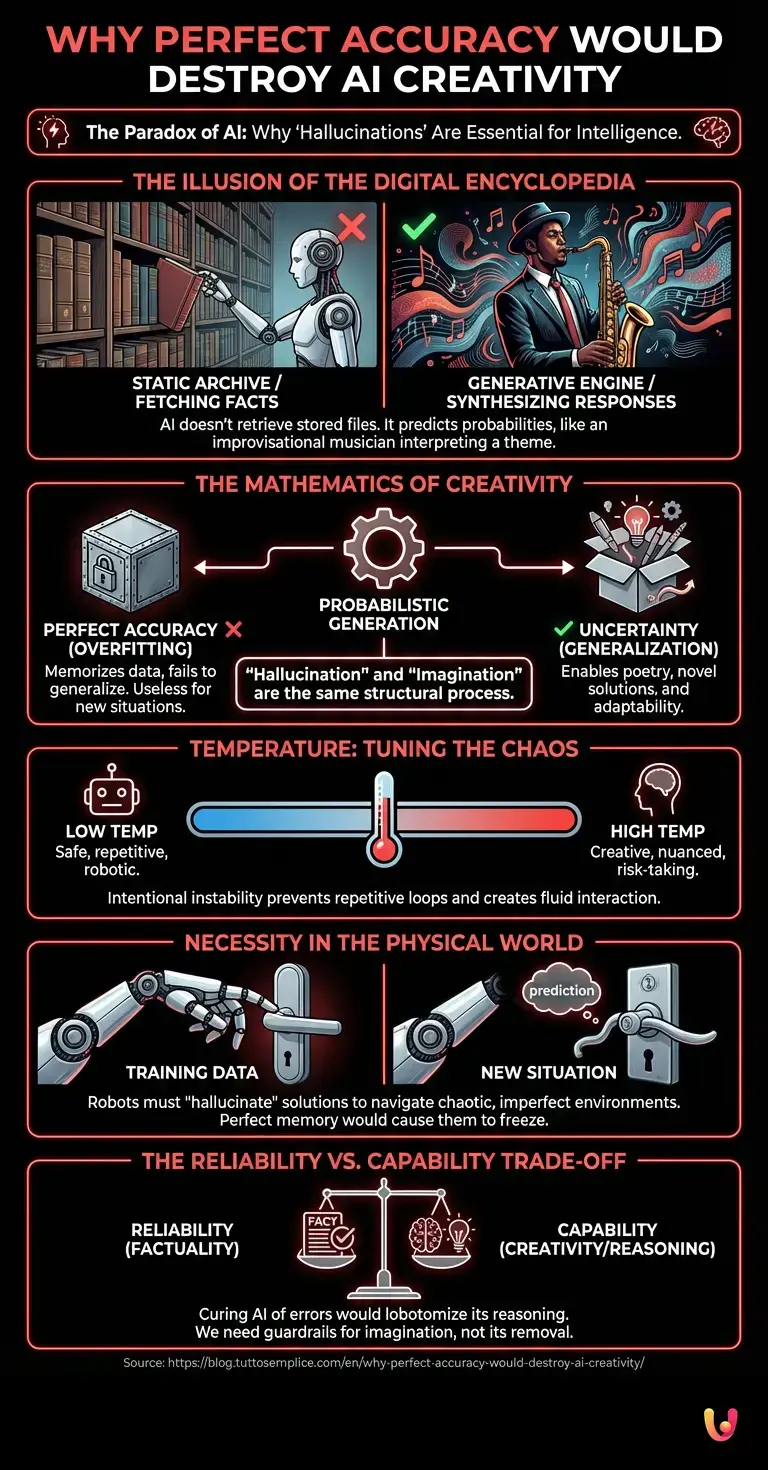

The Illusion of the Digital Encyclopedia

To understand why the system’s greatest flaw is actually its goal, we must first dismantle a common misconception about how modern AI works. When a user queries a system, they often imagine they are accessing a vast, deterministic library where the AI acts as a librarian, fetching a specific book and reading a specific line. If this were the case, any deviation from the text would indeed be a failure.

However, neural networks and LLMs (Large Language Models) do not store facts. They store probabilities. They compress the vastness of human knowledge into a multidimensional map of relationships between concepts. When an AI answers a question, it is not retrieving a stored file; it is synthesizing a new response from scratch, predicting the next piece of information based on the patterns it has learned. It is less like a librarian and more like an improvisational jazz musician. The musician does not play the same notes every time; they interpret the theme. This architectural choice is deliberate. If the system were designed only to be perfectly accurate, it would lose the ability to be generative.

The Mathematics of Creativity

The core mechanism driving this behavior is probabilistic generation. In the field of machine learning, there is a concept known as “overfitting.” If a model memorizes its training data too perfectly, it becomes useless when tailored to new, unseen situations. It fails to generalize. To prevent this, engineers design these systems to operate with a degree of uncertainty.

This is where the “flaw” becomes a feature. The same mechanism that causes an AI to occasionally invent a historical date is the exact same mechanism that allows it to write a poem, debug a novel piece of software, or suggest a marketing strategy that has never been tried before. Creativity, by definition, requires deviating from established data. If an AI were constrained to only outputting verified truths found in its dataset, it could never generate a fictional story, nor could it propose a hypothesis for a scientific problem that hasn’t been solved yet.

In this light, “hallucination” is simply the pejorative term for “imagination.” When the output is useful, we call it creativity. When it is factually incorrect, we call it a bug. But structurally, the process is identical. The goal of the system is not truth preservation; the goal is plausible pattern completion.

Temperature and the Chaos Factor

Deep within the settings of most generative models lies a parameter often referred to as “temperature.” This setting controls the randomness of the model’s choices. A low temperature forces the AI to choose the most likely next word, resulting in safe, repetitive, and robotic text. A high temperature encourages risk-taking, allowing the AI to choose less probable paths.

For automation tasks requiring strict adherence to protocol, we lower the temperature. But for tasks requiring human-like nuance, we raise it. This proves that the system’s designers intentionally engineer instability into the model. They introduce noise to prevent the system from getting stuck in repetitive loops. This “flaw” of unpredictability is the spark of life in the machine. Without it, interactions with AI would feel sterile and deterministic, lacking the fluidity that characterizes human conversation.

Generalization in Robotics and the Physical World

The necessity of this imperfection extends beyond text and into the physical realm of robotics. Consider a robot trained to open doors. If the robot’s “intelligence” were based strictly on perfect memory, it would only be able to open the specific doors it saw during training. If it encountered a handle with a slightly different shape or a door with a different weight, it would freeze, unable to find an exact match in its database.

To function in the chaotic real world, the robot must “hallucinate” a solution. It must look at the new handle and predict—guess—how it should move, based on a generalized understanding of handles. Sometimes it might guess wrong and fail to open the door (a physical hallucination). But without the capacity to make that guess, it would be entirely useless in any uncontrolled environment. The “flaw” of approximation is what enables the robot to navigate a world that is never exactly the same twice.

The Trade-off: Reliability vs. Capability

We are currently witnessing a massive push to mitigate these errors through techniques like Retrieval-Augmented Generation (RAG), where the model is forced to check its homework against a trusted database before speaking. While this solves the accuracy problem for enterprise applications, it is a patch, not a replacement for the core engine.

The underlying neural architecture must remain probabilistic. If we were to completely cure the AI of its tendency to make things up, we would effectively lobotomize its reasoning capabilities. We would strip away its ability to understand metaphor, sarcasm, and analogy—all of which rely on loose associations rather than rigid facts. The industry is realizing that we cannot have a machine that is both infinitely creative and perfectly factual at the structural level. We must build guardrails around the imagination, rather than removing the imagination itself.

In Brief (TL;DR)

AI hallucinations are not technical failures but essential side effects of a system designed for intelligent interpretation rather than static information retrieval.

Neural networks function like improvisational musicians, where the mathematical noise allowing for factual errors is the exact same engine driving creative imagination.

Demanding perfect accuracy would force models to overfit data, stripping them of the generalization capabilities needed to navigate a complex and unpredictable world.

Conclusion

The biggest flaw in Artificial Intelligence—its tendency to fabricate, err, and hallucinate—is not a sign of failure, but the signature of its sophistication. It is the inevitable cost of a system designed to learn, adapt, and create rather than merely retrieve. By trading perfect accuracy for probabilistic flexibility, we have created machines that can do far more than recite encyclopedias; we have created machines that can think around corners. As we move forward, the challenge will not be eliminating this flaw, but harnessing it, understanding that the power to make mistakes is inextricably linked to the power to generate new ideas.

Frequently Asked Questions

AI models generate errors because they function as probabilistic engines rather than static databases. Instead of retrieving stored files, they predict the next logical piece of information based on patterns, acting more like improvisational musicians than librarians. This architectural choice enables creativity and adaptability but comes with the side effect of occasionally fabricating details.

Structurally, creativity and hallucinations stem from the exact same mechanism of probabilistic generation. When an AI deviates from established data to produce something useful, it is viewed as imagination, but when that same process results in factual errors, it is labeled as a hallucination. Eliminating the capacity for error would simultaneously destroy the ability to generate novel ideas, write fiction, or solve unsolved problems.

The temperature parameter controls the level of randomness and risk taking in the choices of the model. A lower temperature forces the AI to select the most probable words, resulting in precise but robotic text, while a higher temperature encourages deviation from the norm to foster human like nuance and creativity. This instability is intentionally engineered to prevent repetitive loops and enable fluid conversation.

Enforcing perfect accuracy would lead to a phenomenon known as overfitting, where the model memorizes training data but fails to function in new situations. If an AI were restricted to only verified facts, it would lose the ability to generalize, understand metaphors, or navigate the chaotic nature of the real world. The system requires a degree of uncertainty to reason through loose associations rather than simply retrieving rigid data.

Robots rely on approximation to navigate physical environments that are never exactly the same twice. For example, a robot must predict how to operate an unfamiliar door handle based on a generalized understanding rather than an exact memory of a specific door. Without the ability to make these calculated guesses, or physical hallucinations, a robot would freeze when encountering any object that does not perfectly match its training database.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.