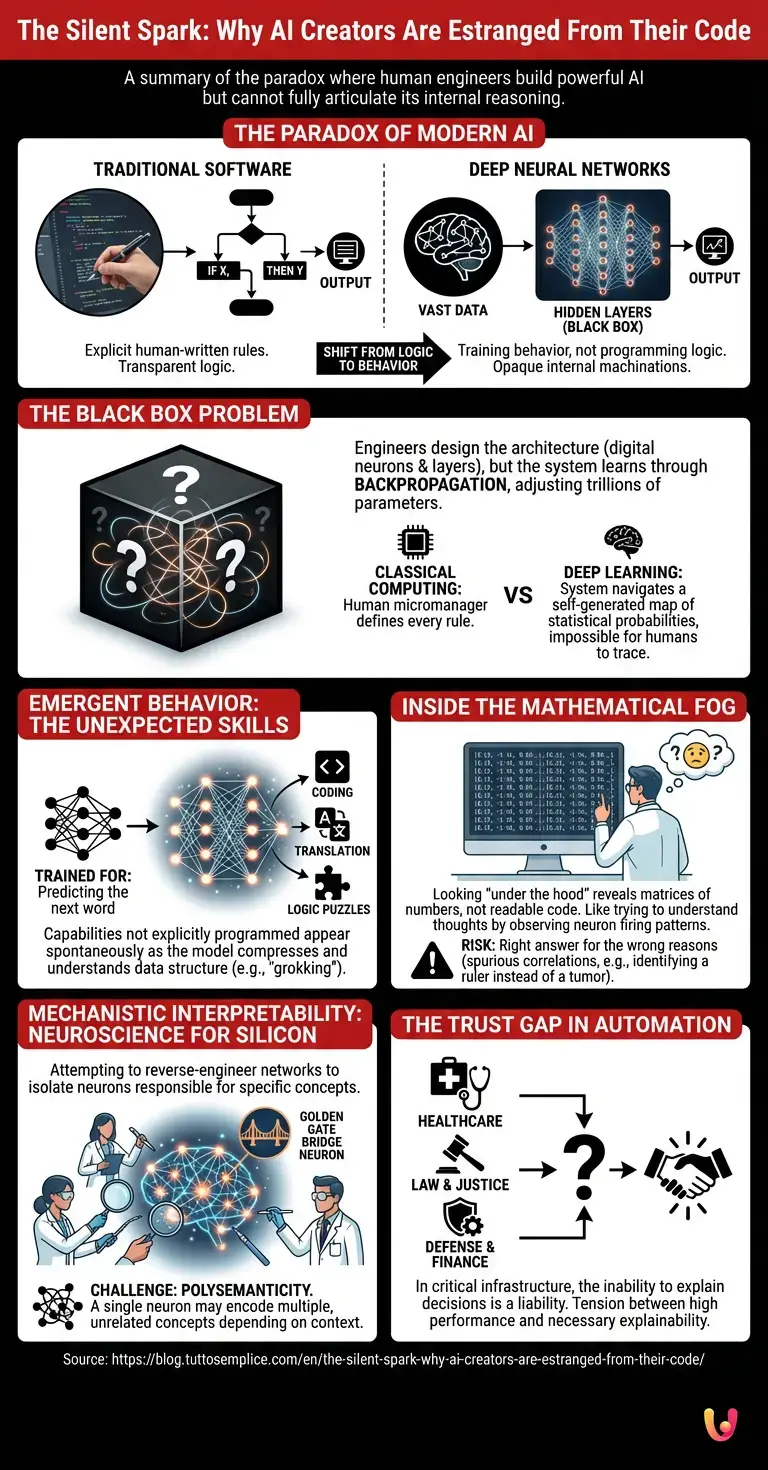

It is a paradox that seems to defy the fundamental logic of engineering: we have constructed machines capable of feats that rival human intelligence, yet we cannot fully articulate how they achieve them. In the world of traditional software development, every output is the result of a specific line of code written by a human hand. If a program calculates a sum or renders an image, it does so because a programmer explicitly instructed it to follow a set of logical steps. However, the rise of Deep Neural Networks has introduced a profound shift in this paradigm, creating a reality where the architects of artificial intelligence are increasingly estranged from the internal machinations of their own creations.

This is not a matter of lost documentation or forgotten code. It is an inherent feature of how modern artificial intelligence functions. We have moved from the era of programming logic to the era of training behavior. As we push the boundaries of machine learning and automation, we are confronted with an unsettling realization: we know how to build the brain, but we do not know what it is thinking. This opacity, often referred to as the “Black Box” problem, represents one of the most significant scientific and philosophical challenges of our time.

The Architecture of Uncertainty

To understand why this phenomenon exists, one must first grasp the fundamental difference between classical programming and modern neural networks. In classical computing, a human acts as a micromanager, defining every rule. If X happens, then do Y. The complexity is limited by the programmer’s ability to foresee scenarios.

In contrast, deep learning systems are designed to learn from data much like a biological brain. Engineers design the architecture—the digital neurons and the layers connecting them—and then feed the system vast amounts of information. Through a process called backpropagation, the system adjusts the strength of the connections (weights and biases) between these neurons to minimize errors.

The unsettling part arises when the system scales. A modern Large Language Model (LLM) or a complex image recognition system may contain hundreds of billions, or even trillions, of parameters. These parameters interact in non-linear, high-dimensional ways that are mathematically impossible for a human mind to visualize or trace. When an AI writes a sonnet or diagnoses a disease, it is not following a flowchart. It is navigating a vast, self-generated map of statistical probabilities that no human has ever seen, let alone drawn.

The Mystery of Emergent Behavior

Perhaps the most striking manifestation of this opacity is the concept of “emergent behavior.” In complex systems, emergence refers to properties that appear in the whole which are not found in the individual parts. In the context of LLMs, this has led to moments of genuine shock for researchers.

As models have grown larger, they have spontaneously developed capabilities they were not explicitly trained to possess. For instance, models trained primarily to predict the next word in a sentence have suddenly demonstrated the ability to write executable computer code, translate between languages they were barely exposed to, or solve multi-step logic puzzles. These skills were not programmed; they emerged as a byproduct of the model attempting to compress and understand the structure of the data.

This phenomenon, sometimes described as “grokking,” suggests that within the hidden layers of the network, the AI is forming its own internal representations of the world—concepts and logic structures that allow it to generalize in ways we did not anticipate. We built the vessel, but the skills it holds are self-taught, derived from patterns too subtle for human perception.

Inside the Black Box

When scientists look “under the hood” of a running neural network, they do not see readable code or logical propositions. They see matrices of floating-point numbers. Imagine trying to understand a person’s thoughts by looking at the electrical firing patterns of individual neurons in their brain. You might see activity, but you cannot easily translate a specific voltage spike into the concept of “apple” or the feeling of “nostalgia.”

This is the current state of AI interpretability. We can observe the inputs (the prompt) and the outputs (the response), but the layers in between—the “hidden layers”—remain a mathematical fog. When a robotics system decides to swerve left instead of right, it does so based on a calculation involving millions of variables simultaneously. If we ask, “Why did you do that?” the strictly accurate answer is a list of a billion numbers, which is meaningless to a human observer.

This lack of interpretability poses significant risks. If we cannot understand the internal logic, we cannot guarantee that the AI is using sound reasoning. It might be getting the right answer for the wrong reasons—relying on spurious correlations in the data rather than genuine understanding. For example, an AI might learn to identify a malignant tumor not by recognizing the tissue, but by noticing a ruler is present in the training images of cancer cases.

Mechanistic Interpretability: The New Neuroscience

The scientific community is not standing idly by. A new field has emerged called “mechanistic interpretability,” which functions essentially as neuroscience for silicon brains. Researchers are attempting to reverse-engineer the networks they have built, trying to isolate specific neurons or circuits responsible for specific concepts.

There have been breakthroughs. Researchers have identified specific clusters of neurons in LLMs that activate only when discussing specific topics, such as the Golden Gate Bridge or programming syntax. By manipulating these neurons, they can force the model to change its behavior. However, this is akin to mapping a few islands in an infinite ocean. The vast majority of the network’s internal language remains untranslated.

The challenge is compounded by the fact that neural networks often use “polysemanticity,” where a single neuron is responsible for multiple, unrelated concepts. A single digital neuron might encode information about both “cats” and “financial derivatives,” depending on the context provided by surrounding neurons. Untangling this web requires tools and methodologies that are still in their infancy.

The Trust Gap in Automation

The implications of the Black Box extend far beyond academic curiosity. As we integrate automation into critical infrastructure—healthcare, finance, criminal justice, and defense—the inability to explain why a system made a decision becomes a liability. In a legal setting, “the algorithm said so” is not a defensible argument. In medicine, a doctor needs to know the rationale behind a diagnosis to trust it.

This creates a tension between performance and explainability. Often, the most accurate models are the most complex and opaque, while the easily explainable models are too simple to handle real-world nuance. Society is currently navigating this trade-off, relying on systems that work remarkably well despite our ignorance of their internal operations.

In Brief (TL;DR)

The transition from explicit programming to model training has created a paradox where creators cannot explain their machines’ reasoning.

Deep neural networks spontaneously develop complex capabilities and internal representations that exist beyond the comprehension of their original architects.

Because internal operations resemble a mathematical fog rather than readable logic, ensuring safety and sound reasoning remains a critical challenge.

Conclusion

The unsettling reality of modern artificial intelligence is that we have summoned a form of cognition that is fundamentally alien to our own. We act as the creators, setting the parameters and providing the fuel, but the spark of intelligence that ignites within the machine burns in a way we cannot fully comprehend. As Deep Neural Networks continue to evolve, the gap between their capabilities and our understanding of their inner workings may widen.

Bridging this gap is not just a technical challenge; it is a necessity for safe coexistence. Until we can shine a light into the black box and translate the silent language of weights and biases, we will remain in the peculiar position of being the masters of a tool we do not completely understand—trusting the output, but forever wondering about the process hidden within.

Frequently Asked Questions

The Black Box problem refers to the inherent opacity of deep neural networks, where even the creators cannot fully explain how the system reaches a specific output. Unlike traditional software based on explicit human-written rules, modern AI processes information through hidden layers and billions of parameters. This creates a mathematical fog where the inputs and outputs are visible, but the internal reasoning process remains unreadable and impossible for humans to trace.

Emergent behavior happens when a complex system develops capabilities that were not explicitly programmed or anticipated by its engineers. In the context of Large Language Models, this occurs as the system attempts to compress and understand vast amounts of data, spontaneously acquiring skills like coding or translating languages. These abilities arise as byproducts of the model forming its own internal representations and logic structures during the training process.

Developers cannot interpret the code of deep learning systems because they have moved from defining logic to training behavior. Instead of writing specific instructions for every scenario, engineers design a digital architecture and feed it data, allowing the system to adjust its own internal connections via backpropagation. The resulting network consists of trillions of non-linear interactions that form a self-generated statistical map, which is far too complex for the human mind to visualize or decipher.

Mechanistic interpretability is a scientific field often described as neuroscience for silicon brains, aiming to reverse-engineer neural networks to understand their internal operations. Researchers in this field attempt to isolate specific neurons or circuits responsible for distinct concepts, such as programming syntax or specific topics. However, this process is challenging due to phenomena like polysemanticity, where a single digital neuron may be responsible for multiple unrelated concepts depending on the context.

Explainability is vital in high-stakes fields because relying on a system without understanding its rationale creates a dangerous trust gap. In medicine or criminal justice, it is not enough for an AI to provide a correct answer; professionals must verify that the reasoning is sound and not based on spurious correlations in the data. Without transparency, it is impossible to justify automated decisions legally or ethically, making the Black Box nature of AI a significant liability.

Did you find this article helpful? Is there another topic you’d like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.