In Brief (TL;DR)

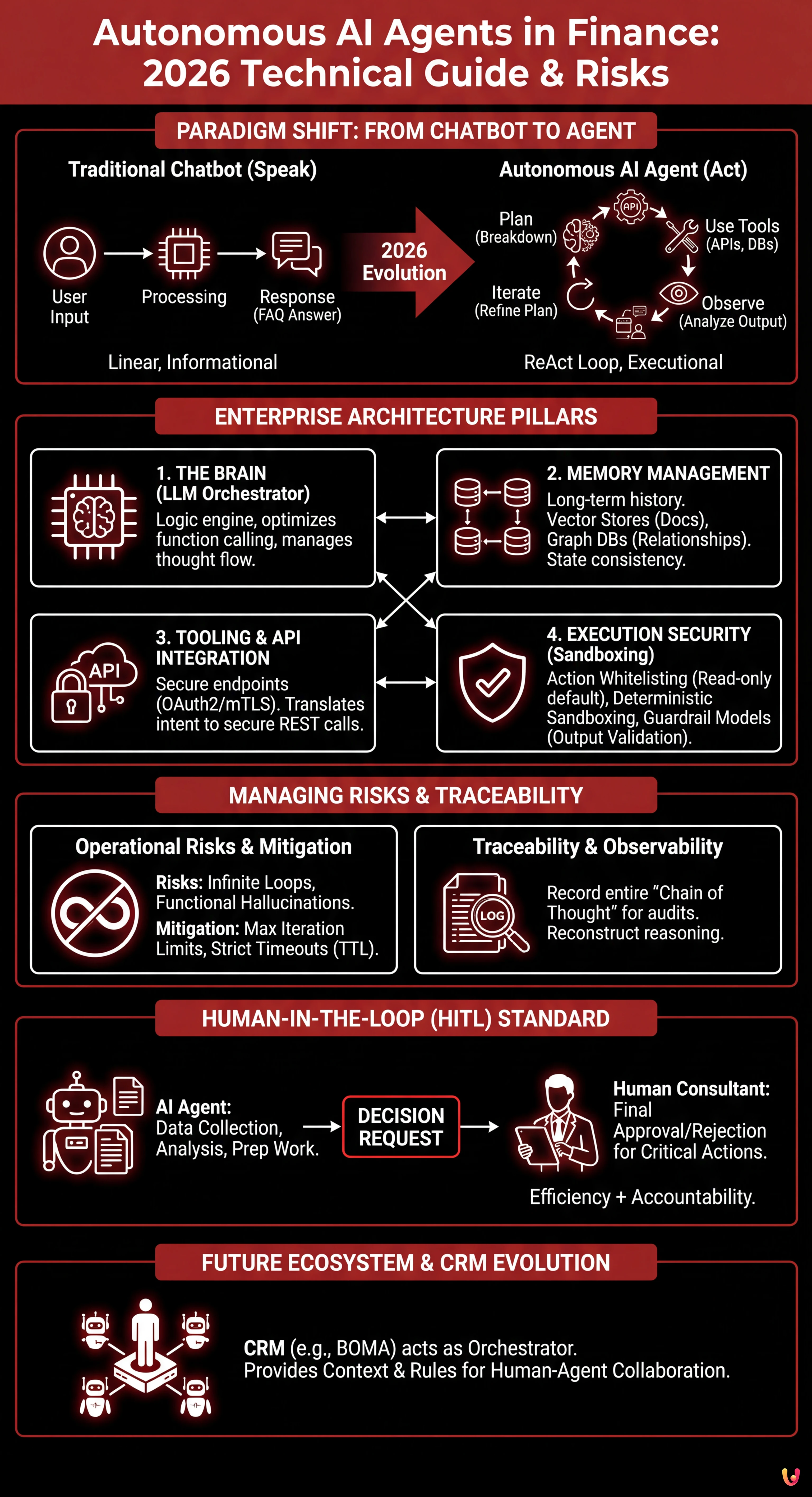

The financial sector of 2026 evolves towards autonomous AI agents capable of planning and executing complex actions beyond simple conversation.

A robust enterprise architecture must integrate logical orchestration, long-term memory, and secure API connections to manage critical banking operations.

Operational security depends on rigorous execution controls, deterministic sandboxing, and complete decision traceability to ensure compliance.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

It is 2026, and the paradigm of financial Customer Service has definitively shifted. While two years ago the goal was to implement conversational chatbots capable of answering complex FAQs, today the frontier has moved towards autonomous AI agents. We are no longer talking about software that speaks, but digital entities that act. In the enterprise context, specifically in the banking and insurance sector, an AI’s ability to execute complex tasks – such as calculating a personalized mortgage installment, retrieving missing documents from heterogeneous databases, and booking a call with a human consultant – represents the new standard of operational efficiency.

This technical guide explores the architecture necessary to orchestrate these agents, analyzing critical challenges related to security, long-term memory, and decision traceability in a regulated environment.

From Static Automation to Autonomous Agent: The Paradigm Shift

The substantial difference between a traditional chatbot (even LLM-based) and autonomous AI agents lies in the execution cycle. While a chatbot follows a User Input -> Processing -> Response pattern, an autonomous agent operates according to a more complex cognitive loop, often based on the ReAct (Reason + Act) pattern or evolved architectures derived from frameworks like LangChain or AutoGPT.

In a financial scenario, the agent does not limit itself to saying “Here is the mortgage form.” The agent:

- Plans: Breaks down the request (“I want to renegotiate the mortgage”) into sub-tasks (Identity verification, Current debt situation analysis, New rate calculation, Proposal generation).

- Uses Tools: Queries Core Banking APIs, accesses the CRM, uses financial calculators.

- Observes: Analyzes tool output (e.g., “The client has an unpaid installment”).

- Iterates: Modifies the plan based on observation (e.g., “Before renegotiating, propose a repayment plan for the unpaid installment”).

Enterprise Architecture for Financial Agents

To implement a synthetic digital workforce in a context like banking, a Python script is not enough. A robust architecture composed of four fundamental pillars is required.

1. The Brain (LLM Orchestrator)

The heart of the system is a Large Language Model (LLM) optimized for function calling. In 2026, models are not just text generators, but logic engines capable of selecting which tool to use among hundreds available. Orchestration occurs via frameworks that manage the agent’s flow of thought, ensuring it remains focused on the objective.

2. Long-Term Memory Management

A financial agent must remember. Not just the current conversation (Short-term memory), but the client’s history (Long-term memory). Here the architecture divides:

- Vector Stores (RAG): To retrieve policies, contracts, and unstructured documentation.

- Graph Databases: Fundamental for mapping relationships between entities (Client -> Account -> Joint Holder -> Guarantor).

The technical challenge is state consistency: the agent must know that the document uploaded yesterday is also valid for today’s request.

3. Tooling and API Integration

Agents are useless without hands. In finance, “tools” are secure API endpoints. The architecture must provide an abstraction layer that translates the agent’s intent (“Check balance”) into a secure REST call, managing authentication (OAuth2/mTLS) and error handling without exposing sensitive data in the prompt.

Execution Security: The Concept of Sandboxing

Security is the main obstacle to the adoption of autonomous AI agents. If an agent has permission to execute wire transfers or modify records, the risk of errors or manipulation (Prompt Injection) is unacceptable.

Mitigation strategies in 2026 include:

- Action Whitelisting: The agent can propose any action, but can autonomously execute only read actions (GET requests). Write actions (POST/PUT/DELETE) require higher authorization levels.

- Deterministic Sandboxing: The execution of code or SQL queries generated by the AI occurs in isolated environments with read-only permissions strictly limited to the specific client context.

- Output Validation: Every response from the agent is passed through a “Guardrail Model,” a smaller and specialized model that verifies the response’s compliance with company policies before it reaches the user.

Traceability and Observability

In the event of an audit, the bank must be able to explain why the agent made a decision. Traditional logs are not enough. It is necessary to implement LLM Observability systems that record the entire “Chain of Thought.” This allows for the reconstruction of logical reasoning: “The agent denied the transaction because it detected an abnormal spending pattern in Tool X and applied Policy Y.”

Operational Risks: Infinite Loops and Functional Hallucinations

A specific technical risk of autonomous agents is the infinite loop. An agent might enter a vicious cycle where it tries to retrieve a document, fails, retries, and fails again, consuming tokens and API resources endlessly. To mitigate this risk, it is essential to implement:

- Max Iteration Limits: A hard stop after a set number of logical steps (e.g., max 10 steps to resolve a ticket).

- Time-to-Live (TTL) Execution: Strict timeouts for every call to external tools.

Human-in-the-loop: Final Approval

Despite autonomy, critical actions still require human supervision. The Human-in-the-loop (HITL) approach is the standard for high-impact operations (e.g., final approval of a loan or unblocking a suspended account).

In this scenario, the agent prepares all the grunt work: it collects data, performs preliminary analysis, fills out forms, and presents a “Decision Request” to the human consultant. The human only needs to click “Approve” or “Reject,” optionally adding notes. This reduces processing time by 90% while maintaining human accountability for final decisions.

The Role of BOMA and the Evolution of CRM

In this ecosystem, platforms like BOMA position themselves no longer as simple data repositories, but as orchestrators of the digital workforce. The CRM of the future (and of the present 2026) is the interface where humans and autonomous AI agents collaborate. BOMA acts as the control layer that provides agents with the necessary context (client data) and imposes engagement rules (security policies), transforming the CRM from a passive tool into an active colleague.

Conclusions

The adoption of autonomous AI agents in financial customer service is not a simple technological upgrade, but an operational restructuring. It requires rigorous governance, an architecture designed for fallibility (graceful degradation), and security “by design.” Organizations that succeed in balancing agent autonomy with human control will define the new standards of efficiency and customer satisfaction in the coming decade.

Frequently Asked Questions

The fundamental distinction lies in operational execution capability. While chatbots follow a linear cycle of input and conversational response, autonomous agents operate through complex cognitive loops, often based on the ReAct pattern, allowing them to plan, use external tools, and iterate actions based on results. They do not limit themselves to providing information but execute concrete tasks like calculating installments or retrieving documents from heterogeneous databases.

Security is based on rigorous strategies like Action Whitelisting, which limits the agent’s autonomy to read-only operations, requiring higher authorizations for data modifications. Furthermore, Deterministic Sandboxing is used to isolate code execution, and output validation models, known as Guardrail Models, verify the compliance of responses with company policies before they reach the end user.

Despite the high autonomy of agents, critical decisions like loan approval or account unblocking require human supervision to ensure legal accountability and compliance. In this model, the AI agent performs all preparatory work of data collection and analysis, presenting the human consultant with a structured final decision request, drastically reducing processing times without eliminating human control.

One of the main risks is the infinite loop, where the agent continues to attempt a failing action while consuming resources and tokens. To prevent this phenomenon and functional hallucinations, it is essential to implement maximum iteration limits for each task and strict timeouts for API calls. Additionally, complete traceability of the chain of thought, or Chain of Thought, allows for auditing the algorithm’s decision-making logic retrospectively.

An effective enterprise architecture uses both short-term memory for the current conversation and long-term memory for client history. This occurs through distinct technologies: Vector Stores to retrieve unstructured documentation like contracts and policies, and Graph Databases to map complex relationships between entities, ensuring the agent always has updated and consistent context to operate.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.