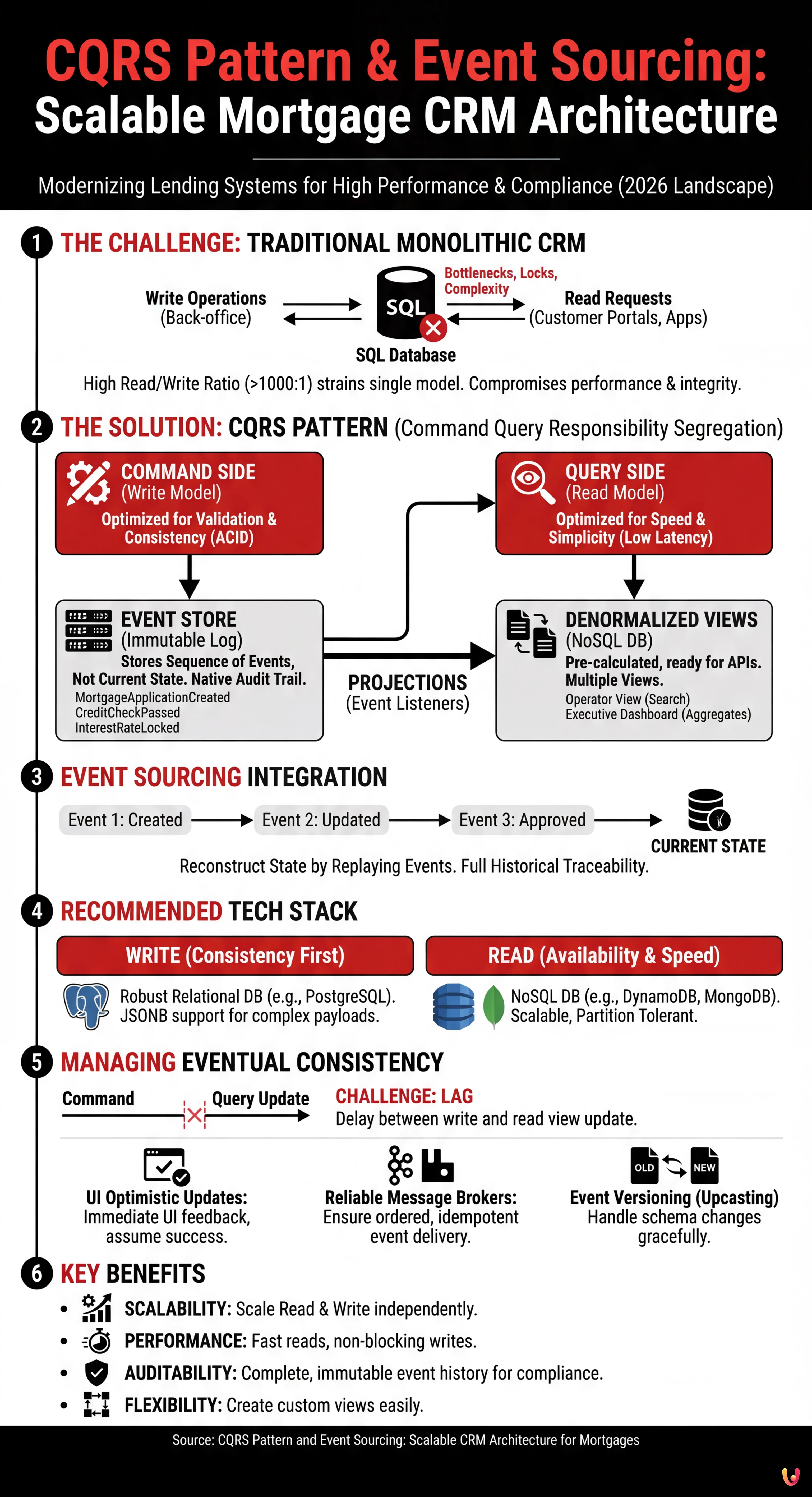

In the software engineering landscape of 2026, building CRM (Customer Relationship Management) systems for the lending sector requires a paradigm shift from traditional monolithic architectures. The main challenge is no longer just data management, but the ability to serve millions of read requests (rate consultations, simulations) without compromising the transactional integrity of write operations (application entry, processing). This is where the CQRS pattern (Command Query Responsibility Segregation) becomes not just useful, but indispensable.

In this technical article, we will explore how to decouple read operations from write operations to build a resilient, auditable, and high-performance infrastructure specifically for mortgage management.

What is the CQRS Pattern and Why is it Vital in Fintech

The CQRS pattern is based on a fundamental principle defined by Bertrand Meyer: a method should be a command that performs an action or a query that returns data to the caller, but never both. In a modern architectural context, this means physically and logically separating the write model (Command) from the read model (Query).

The Single Model Problem in Mortgages

Let’s imagine a traditional banking CRM based on a single relational database (e.g., SQL Server or Oracle). The MortgageApplications table is subject to two types of stress:

- Write: Back-office operators update the application status, upload documents, and modify applied rates. These operations require strict ACID transactions.

- Read: Customer portals, mobile apps, and external comparators continuously query the system to obtain the application status or updated rates. The Read/Write ratio can easily exceed 1000:1.

Using the same data model for both purposes leads to database locks, performance bottlenecks, and complexity in managing complex queries. CQRS solves this problem by creating two distinct stacks.

CQRS + Event Sourcing Architecture: The Heart of the System

For a mortgage management system, CQRS performs best when paired with Event Sourcing. Instead of saving only the current state of a file (e.g., «Status: Approved»), we save the sequence of events that led to that state.

The Command Side (Write)

The write model is responsible for validating business rules. It does not care how the data will be displayed, only that it is correct.

- Input: Commands (e.g.,

CreateMortgageApplication,ApproveIncome,LockRate). - Persistence: Event Store. Here we do not save updatable records, but an immutable series of events.

- Recommended Technology: Robust relational databases like PostgreSQL or databases specific for time-series/events like EventStoreDB.

Example of event flow for a single application:

MortgageApplicationCreated(payload: personal data, requested amount)CreditCheckPassed(payload: credit score)InterestRateLocked(payload: rate 2.5%, expiration 30 days)

This approach guarantees a Native Audit Trail, a fundamental requirement for banking compliance (ECB/Bank of Italy). It is possible to reconstruct the state of the application at any past moment simply by replaying the events up to that date.

The Query Side (Read)

The read model is optimized for speed and simplicity of access. Data is denormalized and ready to be consumed by APIs.

- Update: Occurs via «Projections». A component listens to events emitted by the Command side and updates the read views.

- Recommended Technology: NoSQL databases like MongoDB or Amazon DynamoDB.

Thanks to this separation, if the customer portal requests the list of active applications, it queries a pre-calculated MongoDB collection without ever touching the transactional database where critical writes occur.

Tech Stack: Relational vs NoSQL in the CQRS Context

The choice of stack in 2026 is no longer «one or the other», but «the best for the specific purpose».

For the Write Model (Consistency First)

Here the priority is referential integrity and strong consistency. PostgreSQL remains the choice of excellence for its reliability and native support for JSONB, which allows saving complex event payloads while maintaining ACID guarantees.

For the Read Model (Availability & Partition Tolerance)

Here the priority is low latency. DynamoDB (or Cassandra for on-premise installations) excels. We can create different «Views» (Materialized Views) based on the same data:

- Operator View: Optimized for search by Surname/Tax Code.

- Executive Dashboard View: Pre-calculated aggregates on disbursement volumes by region.

Engineering Challenges: Synchronization and Eventual Consistency

Implementing the CQRS pattern introduces non-negligible complexity: Eventual Consistency. Since there is a delay (often in the order of milliseconds, but potentially seconds) between writing the event and updating the read view, the user might not immediately see the changes.

Mitigation Strategies

1. User Interface Management (UI Optimistic Updates)

Do not wait for the server to confirm the read view update. If the command returns 200 OK, the frontend interface should update the local state assuming the operation was successful.

2. Reliable Message Brokers

To synchronize Command and Query, a robust message bus is needed. Apache Kafka or RabbitMQ are industry standards. The architecture must guarantee event ordering (to prevent an «Approval» event from being processed before «Creation») and idempotency (processing the same event twice must not corrupt data).

3. Event Versioning

In the lifecycle of CRM software, data structure changes. What happens if we add an «Energy Class» field to the PropertyDetailsUpdated event? It is necessary to implement Upcasting strategies, where the system is able to read old versions of events and convert them on the fly into the new format before applying them to projections.

Practical Implementation: Example of Command Handler

Here is logical pseudocode of how a Command Handler manages a rate change request in a CQRS architecture:

class ChangeRateHandler {

public void Handle(ChangeRateCommand command) {

// 1. Load the event stream for this Mortgage ID

var eventStream = _eventStore.LoadStream(command.MortgageId);

// 2. Reconstruct current state (Replay)

var mortgage = new MortgageAggregate(eventStream);

// 3. Execute domain logic (Validation)

// Throws exception if state does not allow rate change

mortgage.ChangeRate(command.NewRate);

// 4. Save generated new events

_eventStore.Append(command.MortgageId, mortgage.GetUncommittedChanges());

// 5. Publish event to Bus to update Read Models

_messageBus.Publish(mortgage.GetUncommittedChanges());

}

}

In Brief (TL;DR)

CQRS architecture overcomes the limits of monolithic systems by logically and physically separating consultation flows from modification operations.

The integration of Event Sourcing ensures complete traceability of mortgage files, guaranteeing regulatory compliance and historical data resilience.

The strategic use of differentiated technologies for reading and writing offers high performance and scalability indispensable for the modern Fintech sector.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

Conclusions

Adopting the CQRS pattern in a mortgage CRM is not a decision to be taken lightly, given the increase in infrastructural complexity. However, for financial institutions aiming to scale beyond the limitations of monolithic relational databases and requiring unassailable audit trails via Event Sourcing, it represents the state of the art in software engineering.

The clear separation between who writes data and who reads it allows optimizing each side of the application with the most suitable technologies (PostgreSQL for security, NoSQL for speed), ensuring a system ready for the future of digital banking.

Frequently Asked Questions

CQRS clearly separates the write model from the read model, unlike monolithic systems that use a single database for everything. This allows managing high volumes of rate and file consultations without blocking critical data entry operations, drastically improving banking CRM performance.

Instead of saving only the final state of a file, the Event Sourcing methodology records every single event in chronological sequence. This guarantees complete and immutable tracking of all operations, a requirement often indispensable for regulatory compliance and for reconstructing the exact history of every mortgage.

A hybrid approach leveraging the best of each technology is recommended. For the write side, a robust relational database like PostgreSQL is ideal to ensure data integrity, while for the read side, NoSQL solutions like MongoDB or DynamoDB are preferable to guarantee immediate responses to API queries.

The lag, known as Eventual Consistency, is mitigated by optimistically updating the user interface and using robust message brokers like Apache Kafka. These tools synchronize the read and write models, ensuring that data is aligned correctly and in chronological order without information loss.

This architecture allows scaling resources dedicated to reading and writing independently based on real load. Furthermore, it facilitates the creation of custom views for different users, such as back-office operators and end customers, without complex queries slowing down the main transactional system.

Sources and Further Reading

- Command–query separation (CQS) Principle – Wikipedia

- European Banking Authority (EBA) – Guidelines on ICT and Security Risk Management

- CAP Theorem (Consistency, Availability, Partition Tolerance) – Wikipedia

- NIST Special Publication 800-204: Security Strategies for Microservices-based Application Systems

- ACID (Atomicity, Consistency, Isolation, Durability) Transactions – Wikipedia

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.