In Brief (TL;DR)

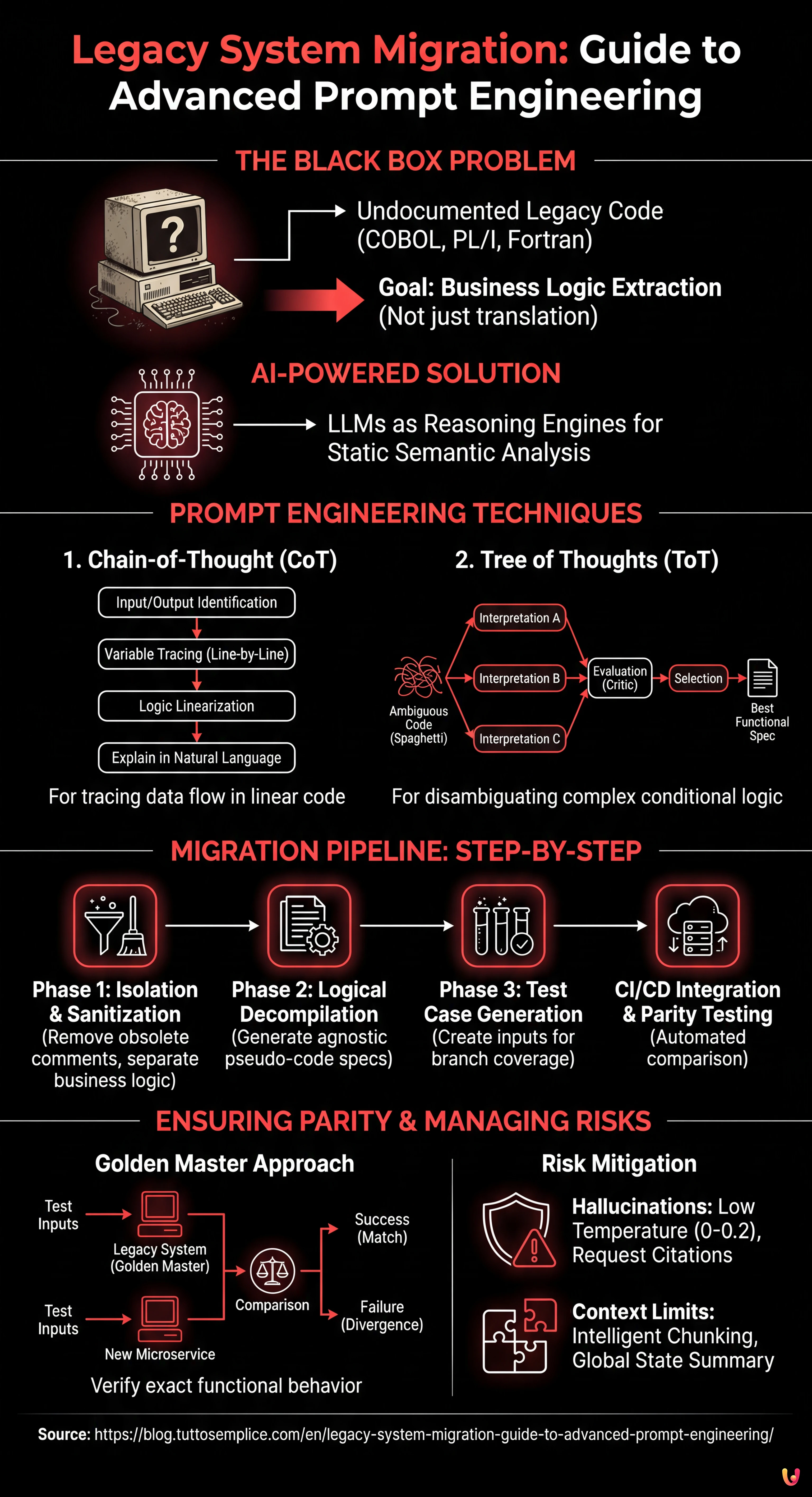

Legacy system migration in the banking sector requires evolved strategies to decode complex and often undocumented logical layers.

The advanced use of Prompt Engineering and LLMs allows transforming reverse engineering into precise business rule extraction.

Methodologies like Chain-of-Thought ensure functional parity by decompiling the semantics of critical algorithms instead of simply translating the syntax.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

It is 2026, and IT infrastructure modernization is no longer an option but a survival imperative, especially in the banking and insurance sectors. **Legacy system migration** to the cloud represents one of the most complex challenges for CIOs and Software Architects. It is not simply about moving code from a mainframe to a Kubernetes container; the real challenge lies in deeply understanding decades of logical layering, often undocumented.

In this technical deep dive, we will explore how the advanced use of Large Language Models (LLMs) and Prompt Engineering can transform the reverse engineering process. We will not talk about simple code generation (like ‘translate this COBOL to Python’), but about a methodical approach to **business logic extraction** (Business Rules Extraction) and ensuring functional parity through automated testing.

The Black Box Problem in Banking Systems

Many mission-critical systems operate on codebases written in COBOL, PL/I, or Fortran in the 80s or 90s. The main problem in **legacy system migration** is not syntax, but semantics. Often, documentation is missing or misaligned with production code. The original developers have retired, and the code itself has become the single source of truth.

The traditional approach involves manual analysis, which is expensive and prone to human error. The modern approach, powered by AI, uses LLMs as reasoning engines to perform **static semantic analysis**. The goal is to decompile the algorithm, not just translate it.

Prerequisites and Tools

To follow this guide, you need:

- Access to LLMs with large context windows (e.g., GPT-4o, Claude 3.5 Sonnet, or open source models like Llama 4 optimized for code).

- Read access to the legacy codebase (COBOL/JCL snippets).

- An orchestration environment (Python/LangChain) to automate prompt pipelines.

Prompt Engineering Techniques for Code Analysis

To extract complex business rules, such as calculating a French amortization schedule with specific currency exceptions, a zero-shot prompt is not enough. We must guide the model through structured cognitive processes.

1. Chain-of-Thought (CoT) for Logic Linearization

The **Chain-of-Thought** technique pushes the model to make intermediate reasoning steps explicit. In **legacy system migration**, this is crucial for tracing data flow through obscure global variables.

Example of CoT Prompt:

SYSTEM: You are a Senior Mainframe Analyst specializing in COBOL and banking logic. USER: Analyze the following COBOL paragraph 'CALC-RATA'. Do not translate it yet. Use a Chain-of-Thought approach to: 1. Identify all input and output variables. 2. Trace how the variable 'WS-INT-RATE' is modified line by line. 3. Explain the underlying mathematical logic in natural language. 4. Highlight any 'magic numbers' or hardcoded constants. CODE: [Insert COBOL Snippet]

2. Tree of Thoughts (ToT) for Disambiguation

Legacy code is often full of GO TO instructions and nested conditional logic (Spaghetti Code). Here, the **Tree of Thoughts** technique is superior. It allows the model to explore different possible interpretations of an ambiguous code block, evaluate them, and discard illogical ones.

Applied ToT Strategy:

- Generation: Ask the model to propose 3 different functional interpretations of a complex

PERFORM VARYINGblock. - Evaluation: Ask the model to act as a “Critic” and evaluate which of the 3 interpretations is most consistent with the standard banking context (e.g., Basel III rules).

- Selection: Keep the winning interpretation as the basis for the functional specification.

Extraction Pipeline: Step-by-Step

Here is how to structure an operational pipeline to support **legacy system migration**:

Phase 1: Isolation and Sanitization

Before sending code to the LLM, remove obsolete comments that could cause hallucinations (e.g., “TODO: fix this in 1998”). Isolate calculation routines (Business Logic) from I/O or database management ones.

Phase 2: Logical Decompilation (The “Architect” Prompt)

Use a structured prompt to generate agnostic pseudo-code. The goal is to obtain a specification that a human can read.

PROMPT: Analyze the provided code. Extract EXCLUSIVELY the business rules. Output required in Markdown format: - Rule Name - Preconditions - Mathematical Formula (in LaTeX format) - Postconditions - Handled exceptions

Phase 3: Test Case Generation (The “Golden Master”)

This is the critical step for safety. We use the LLM to generate test inputs that cover all conditional branches (Branch Coverage) identified in the previous phase.

CI/CD Integration and Parity Testing

A successful **legacy system migration** does not end with rewriting the code, but with proving that the new system (e.g., in Java or Go) behaves exactly like the old one.

Parity Test Automation

We can integrate LLMs into the CI/CD pipeline (e.g., Jenkins or GitLab CI) to create dynamic unit tests:

- Input Generation: The LLM analyzes the extracted logic and generates a JSON file with 100 test cases (including edge cases, such as negative rates or leap years).

- Legacy Execution: Run these inputs against the legacy system (or an emulator) and record the outputs. This becomes our “Golden Master”.

- New System Execution: Run the same inputs against the new microservice.

- Comparison: If the outputs diverge, the pipeline fails.

AI can also be used in the debugging phase: if the test fails, you can provide the LLM with the legacy code, the new code, and the output diff, asking: “Why do these two algorithms produce different results for input X?”.

Troubleshooting and Risks

Managing Hallucinations

LLMs can invent logic if the code is too cryptic. To mitigate this risk:

- Set

temperatureto 0 or very low values (0.1/0.2) to maximize determinism. - Always request references to the original lines of code in the explanation (Citations).

Context Window Limits

Do not attempt to analyze entire monolithic programs in a single prompt. Use intelligent chunking techniques, dividing the code by paragraphs or logical sections, maintaining a global context summary (Global State Summary) that is passed in every subsequent prompt.

Conclusions

The use of advanced Prompt Engineering transforms **legacy system migration** from an operation of “digital archaeology” to a controlled engineering process. Techniques like Chain-of-Thought and Tree of Thoughts allow us to extract the intellectual value trapped in obsolete code, ensuring that the business logic supporting the financial institution is preserved intact in the move to the cloud. We are not just rewriting code; we are saving corporate knowledge.

Frequently Asked Questions

The use of advanced Prompt Engineering techniques, such as Chain-of-Thought and Tree of Thoughts, transforms migration from a simple syntactic translation to a semantic engineering process. Instead of merely converting obsolete code like COBOL into modern languages, LLMs act as reasoning engines to extract layered and often undocumented business logic. This approach allows decompiling algorithms, identifying critical business rules, and generating clear functional specifications, drastically reducing human errors and preserving the intellectual value of the original software.

The Chain-of-Thought (CoT) technique guides the model to make intermediate reasoning steps explicit, which is essential for linearizing logic and tracing data flow through global variables in linear codes. Conversely, the Tree of Thoughts (ToT) is superior in handling ambiguous code or code rich in nested conditional instructions, typical of spaghetti code. ToT allows the model to explore different functional interpretations simultaneously, evaluate them like an expert critic, and select the one most consistent with the banking context or current regulations, discarding illogical hypotheses.

Functional parity is achieved through a rigorous automated testing pipeline, often defined as the Golden Master approach. LLMs are used to generate a wide range of test cases, including edge scenarios, based on the extracted logic. These inputs are executed on both the original legacy system and the new microservice. The results are automatically compared: if the outputs diverge, the continuous integration pipeline signals the error. This method ensures that the new system, written in modern languages like Java or Go, exactly replicates the mathematical and logical behavior of its predecessor.

The main risk is represented by hallucinations, i.e., the model’s tendency to invent nonexistent logic when the code is too cryptic. Another limit is the size of the context window which prevents the analysis of entire monolithic programs. To mitigate these problems, it is fundamental to set the model temperature to values close to zero to maximize determinism and always request citations of the original lines of code. Furthermore, an intelligent chunking strategy is adopted, dividing the code into logical sections and maintaining a summary of the global state to preserve context during analysis.

In mission-critical systems developed decades ago, documentation is often missing, incomplete, or, worse, misaligned with the code actually in production. With the retirement of the original developers, the source code has become the only reliable source of truth. Relying on paper documentation or comments in the code, which might refer to changes from many years ago, can lead to serious interpretation errors. Semantic static analysis via AI allows ignoring these obsolete artifacts and focusing exclusively on the current operational logic.

Sources and Further Reading

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.