In Brief (TL;DR)

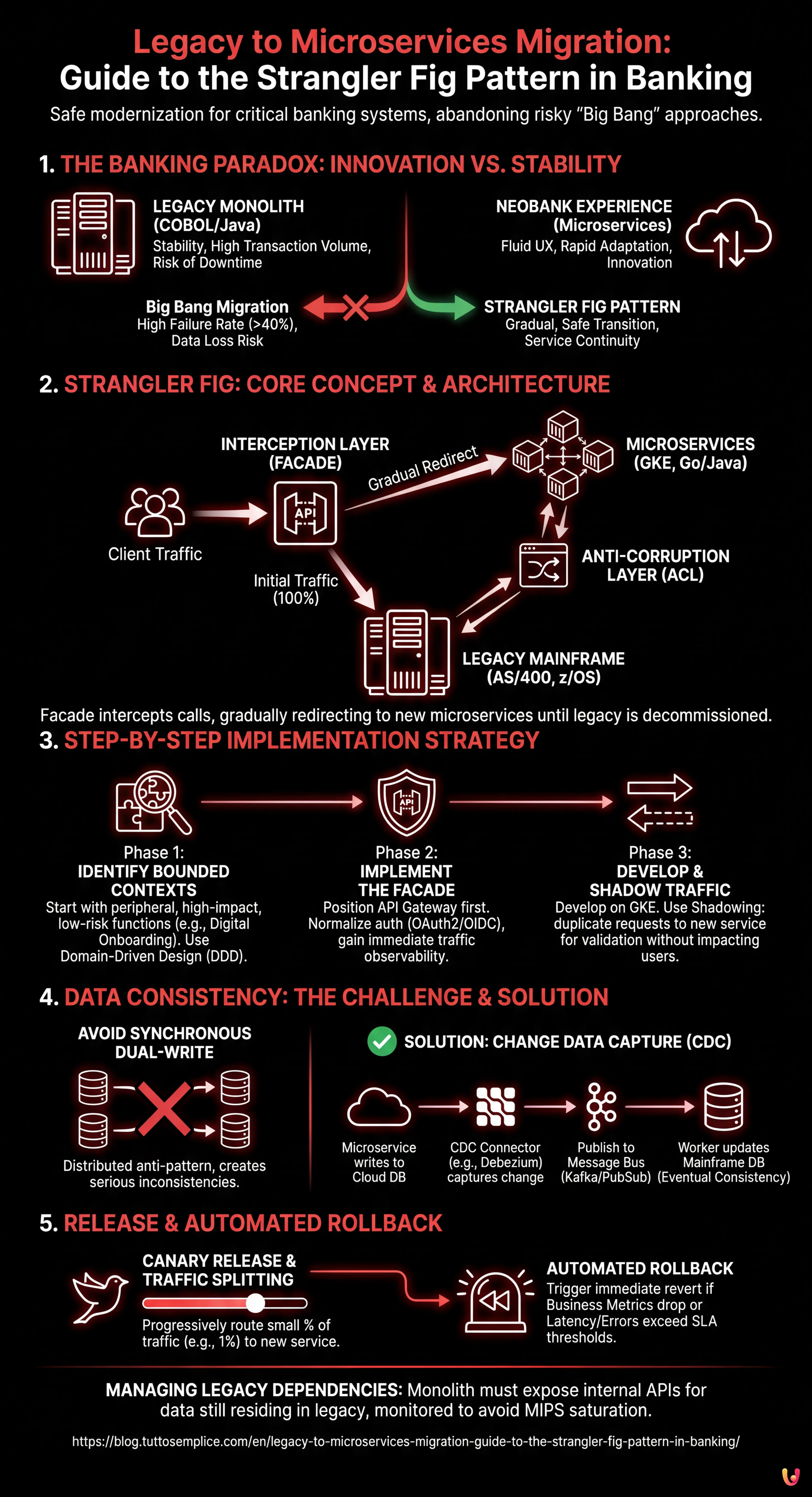

The Strangler Fig pattern offers banks a safe strategy to modernize legacy monoliths while avoiding the operational risks of the Big Bang.

Integrating a Facade layer orchestrates the gradual transition to cloud-native microservices while keeping existing critical systems operational.

Advanced techniques like Change Data Capture ensure data consistency between mainframe and cloud, overcoming the pitfalls of dual-write.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

In the 2026 fintech landscape, speed of adaptation is the most valuable currency. However, for established financial institutions, innovation is often held back by decades of technical layering on mainframes. **Legacy to microservices migration** is no longer just an architectural choice, but a survival imperative. Abandoning the risky “Big Bang” approach in favor of the **Strangler Fig** pattern represents the safest strategy to modernize critical systems without disrupting banking operations.

This technical guide is aimed at CTOs and Lead Architects who must orchestrate the transition from COBOL/Java monoliths towards cloud-native architectures on Kubernetes (GKE), managing the complexity of data consistency and service continuity.

1. The Banking Paradox: Innovating Without Breaking

The banking sector lives a paradox: it must offer user experiences as fluid as those of Neobanks, while maintaining the stability of core banking systems that process millions of transactions per day. “Big Bang” type migrations (complete rewrite and immediate switch) have historically had failure rates exceeding 40%, with unacceptable risks of downtime and data loss.

The **Strangler Fig** pattern, theorized by Martin Fowler, offers the alternative: wrapping the old system with a new structure, intercepting calls and gradually redirecting them towards new microservices, until the old system can be safely shut down.

2. Reference Architecture: The Interception Layer (The Facade)

The heart of the Strangler Fig strategy is the **Facade** (or interception layer). In a modern banking context on Google Cloud Platform (GCP), this role is typically performed by an evolved API Gateway or a Service Mesh.

Key Architecture Components

- Legacy Mainframe (AS/400 or z/OS): The current System of Record (SoR).

- Strangler Facade (API Gateway/Ingress): The single entry point for all client traffic. It decides whether to route the request to the old monolith or the new microservice.

- Microservices (GKE): The new services developed in Go or Java (Quarkus/Spring Boot), containerized and orchestrated on Google Kubernetes Engine.

- Anti-Corruption Layer (ACL): A translation layer that prevents the old system’s data model from polluting the design of the new microservices.

3. Step-by-Step Implementation Strategy

Phase 1: Identifying Bounded Contexts

Do not start by migrating the “Core Ledger”. Choose peripheral functionalities with high customer impact but low systemic risk, such as **Digital Onboarding** or **Balance Viewing**. Use Domain-Driven Design (DDD) to isolate these contexts.

Phase 2: Implementing the Facade

Before writing a single line of code for the new microservice, position the API Gateway in front of the monolith. Initially, the Gateway will act as a simple pass-through proxy (100% of traffic to legacy). This allows you to:

- Normalize authentication (e.g., moving from proprietary protocols to OAuth2/OIDC).

- Obtain immediate observability on existing traffic.

Phase 3: Development and Shadow Traffic

Develop the new microservice on GKE. Instead of activating it immediately, use a **Shadowing** (or Dark Launching) strategy. The Facade duplicates incoming traffic: one request goes to the Mainframe (which responds to the user), the other goes to the Microservice (which processes the request but its response is discarded or logged for comparison).

This allows verifying the correctness of the new service’s business logic on real data without impacting the customer.

4. The Data Consistency Problem: Dual-Write and CDC

The biggest challenge in **legacy to microservices migration** in the banking sector is data management. During the transition, data must reside in both the Mainframe DB2 and the new cloud-native database (e.g., Cloud Spanner or Cloud SQL), and must be synchronized.

Why Avoid Synchronous Dual-Write

Having the application write to both databases simultaneously is a distributed anti-pattern. If the write on GKE succeeds but the one on Mainframe fails, a serious inconsistency is created.

The Solution: Change Data Capture (CDC)

The recommended approach involves using an asynchronous event pipeline:

- The microservice writes to its own database.

- A CDC connector (e.g., Debezium or native GCP tools like Datastream) captures the change.

- The event is published to a message bus (Kafka or Pub/Sub).

- A worker consumes the event and updates the Mainframe (or vice versa, depending on who is the Data Owner in that phase).

This guarantees **Eventual Consistency**. For critical operations where consistency must be immediate, the SAGA pattern can be evaluated, but complexity increases significantly.

5. Release and Automatic Rollback

Once the microservice is validated in Shadow Mode, proceed to progressive release (Canary Release).

Configuring Traffic Splitting

Configure the API Gateway to route a minimum percentage of traffic (e.g., 1% or only internal users) to the new service on GKE.

Automated Rollback Strategy

In a banking environment, manual rollback is too slow. Implement advanced health checks:

- Business Metrics: If the conversion rate (e.g., account openings) drops drastically on the new service, the rollback must trigger automatically.

- Latency and Errors: If latency exceeds the threshold (SLA) or 5xx errors increase, the Gateway must immediately revert 100% of traffic to the Mainframe.

6. Managing Legacy Dependencies

Often the new microservice will need data that still resides in the monolith and has not been migrated. In this case, the monolith must expose internal APIs (or be queried via JDBC/ODBC through an abstraction layer) to serve this data to the microservice. It is crucial to monitor the additional load these calls generate on the Mainframe to avoid saturating available MIPS.

Conclusions

The **legacy to microservices migration** via the Strangler Fig pattern is not a “one-off” project, but a continuous refactoring process. For banks, this approach transforms an existential risk (technological obsolescence) into a competitive advantage, allowing the release of new onboarding or instant payment features while the backend is progressively healed. The key to success lies not only in technology (Kubernetes, Kafka), but in the operational discipline of managing the hybrid period with total observability and automated safety mechanisms.

Frequently Asked Questions

The Strangler Fig pattern is an architectural modernization strategy that gradually replaces a monolithic legacy system. Instead of an immediate complete rewrite, the old system is wrapped with a new structure, intercepting calls via a Facade and progressively redirecting them to new microservices. This approach drastically reduces operational risks typical of the banking sector, ensuring service continuity while the old system is decommissioned piece by piece.

Data consistency management is critical and must not rely on synchronous dual-write, which can cause misalignments. The recommended solution involves using Change Data Capture (CDC) combined with an asynchronous event pipeline. Specific tools capture changes in the source database and publish them to a message bus, allowing the secondary system to be updated in near real-time and guaranteeing Eventual Consistency without blocking transactions.

The Big Bang method, which involves the instant replacement of the entire system, historically carries high failure rates and unacceptable risks of service interruption or data loss. Conversely, gradual migration allows value to be released incrementally, starting with low-risk functionalities. This method allows testing new architectures on Kubernetes with limited real traffic, facilitating automatic recovery in case of anomalies.

Shadow Traffic, or Dark Launching, is a fundamental technique for validating the business logic of new services without impacting the end customer. The API Gateway duplicates the incoming request, sending it to both the legacy system and the new microservice. While the legacy response is sent to the user, the microservice response is discarded or analyzed only for comparison. This allows verifying the correctness and performance of the new code on real production data before the actual release.

The API Gateway, or Facade, acts as a single entry point and plays the crucial role of routing traffic between the old monolith and the new microservices. By positioning it in front of the existing system before writing new code, it allows normalizing authentication and obtaining immediate visibility into traffic. It is the component that makes the gradual redirection of requests and the management of release strategies like Canary Release possible.

Sources and Further Reading

- Wikipedia: Strangler Fig Pattern Definition and Origins

- NIST Special Publication 800-204: Security Strategies for Microservices-based Application Systems

- FFIEC IT Examination Handbook: Architecture, Infrastructure, and Operations

- Basel Committee on Banking Supervision: Principles for Operational Resilience

- GOV.UK Service Manual: Managing Legacy Technology and Migration Strategies

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.