In Brief (TL;DR)

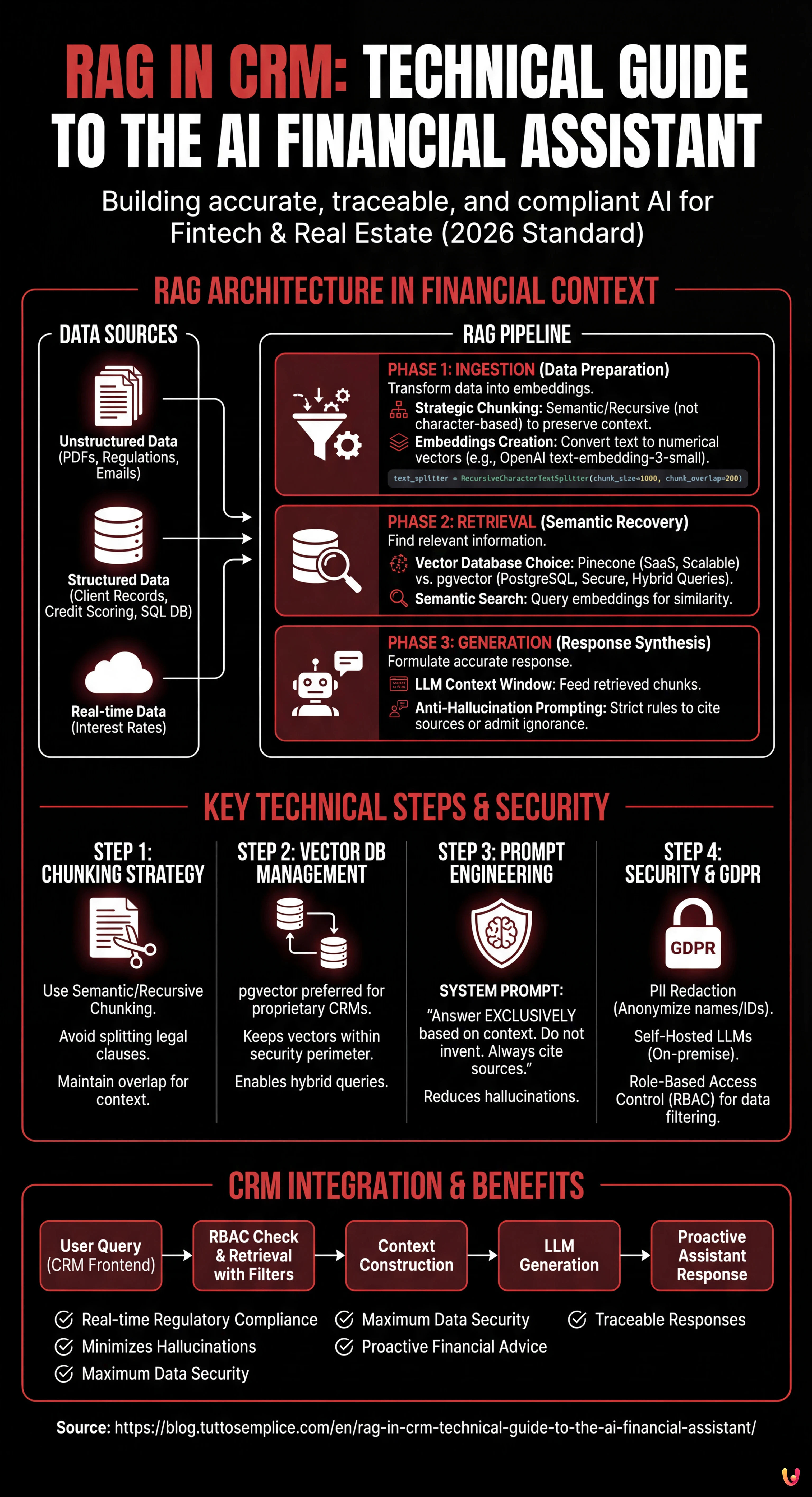

The RAG architecture empowers financial CRMs by consulting regulations and client data in real-time to generate accurate and hallucination-free responses.

The technical pipeline integrates semantic ingestion, vector databases, and prompt engineering to transform complex documents into secure and fully traceable financial advice.

The strategic use of pgvector and advanced chunking allows building virtual assistants capable of pre-qualifying leads and managing mortgages while ensuring compliance.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

It is 2026, and the integration of Artificial Intelligence into business systems is no longer a novelty, but an operational standard. However, in the fintech and real estate sectors, the challenge is not just generating text, but generating accurate, traceable, and compliant responses. This is where the RAG in CRM (Retrieval-Augmented Generation) architecture comes into play. Unlike a generic LLM that relies solely on its training set (often outdated), a RAG system allows your proprietary CRM (such as BOMA or custom solutions) to consult regulatory documentation, current interest rates, and client history in real-time before formulating a response.

In this technical deep dive, we will explore how to build an intelligent financial assistant capable of pre-qualifying leads and providing mortgage advice, minimizing hallucinations and ensuring maximum data security.

The RAG Architecture in the Financial Context

Implementing RAG in CRM requires a robust pipeline composed of three main phases: Ingestion (data preparation), Retrieval (semantic recovery), and Generation (response synthesis). In the context of a financial CRM, data is not just free text, but a combination of:

- Unstructured Data: PDFs of banking regulations, credit policies, email transcripts.

- Structured Data: Client records, credit scoring, LTV (Loan-to-Value) rates present in the SQL database.

The goal is to transform this data into numerical vectors (embeddings) that the LLM can “understand” and query.

Step 1: Data Ingestion and Strategic Chunking

The first step is transforming documentation (e.g., “Mortgage Guide 2026.pdf”) into manageable fragments. We cannot pass an entire 500-page manual into the LLM’s context window. We must divide the text into chunks.

For financial documents, character-based chunking is risky because it could split a legal clause in half. We use a semantic or recursive approach.

Code Example: Ingestion Pipeline with LangChain

Here is how to implement a Python function to process documents and create embeddings using OpenAI (or equivalent open-source models).

from langchain_community.document_loaders import PyPDFLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_openai import OpenAIEmbeddings

import os

# API Key Configuration (managed via env vars for security)

os.environ["OPENAI_API_KEY"] = "sk-..."

def process_financial_docs(file_path):

# 1. Document loading

loader = PyPDFLoader(file_path)

docs = loader.load()

# 2. Strategic Chunking

# Chunk size of 1000 tokens with overlap of 200 to maintain context between fragments

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

separators=["nn", "n", " ", ""]

)

splits = text_splitter.split_documents(docs)

# 3. Creating Embeddings

# We use text-embedding-3-small for a good cost/performance balance

embeddings = OpenAIEmbeddings(model="text-embedding-3-small")

return splits, embeddingsStep 2: Choice and Management of the Vector Database

Once embeddings are created, where do we store them? The choice of vector database is critical for the performance of RAG in CRM.

- Pinecone: Managed solution (SaaS). Great for scalability and speed, but data resides on third-party clouds. Ideal if company policy allows it.

- pgvector (PostgreSQL): The preferred choice for proprietary CRMs that already use Postgres. It allows executing hybrid queries (e.g., “find semantically similar documents” AND “belonging to client ID=123”).

If we are building an internal financial assistant, pgvector offers the advantage of keeping vector data within the same security perimeter as structured financial data.

Step 3: Retrieval and Anti-Hallucination Prompt Engineering

The heart of the system is the retrieval of relevant information. When an operator asks the CRM: “Can client Rossi access the Youth Mortgage with an ISEE of 35k?”, the system must retrieve the policies related to the “Youth Mortgage” and the ISEE limits.

However, retrieving data is not enough. We must instruct the LLM not to invent. This is achieved through a rigorous System Prompt.

Advanced Prompt Engineering Example

We use a template that forces the model to cite sources or admit ignorance.

SYSTEM_PROMPT = """

You are a Senior Financial Assistant integrated into the BOMA CRM.

Your task is to answer questions based EXCLUSIVELY on the context provided below.

OPERATIONAL RULES:

1. If the answer is not present in the context, you must reply: "I am sorry, current policies do not cover this specific case."

2. Do not invent interest rates or unwritten rules.

3. Always cite the reference document (e.g., [Mortgage Policy v2.4]).

4. Maintain a professional and formal tone.

CONTEXT:

{context}

USER QUESTION:

{question}

"""Step 4: Security, PII, and GDPR Compliance

Integrating RAG in CRM for finance involves enormous privacy risks. We cannot send sensitive data (PII – Personally Identifiable Information) like Tax Codes, full names, or bank balances directly to OpenAI or Anthropic APIs without precautions, especially under GDPR.

Protection Strategies (Guardrails)

- PII Redaction (Anonymization): Before sending the prompt to the LLM, use libraries like Microsoft Presidio to identify and mask sensitive data. “Mario Rossi” becomes “<PERSON>”.

- Self-Hosted LLM: For maximum security, consider using open-source models like Llama 3 or Mistral, hosted on proprietary servers (on-premise or private VPC). This ensures that no data ever leaves the corporate infrastructure.

- Role-Based Access Control (RBAC): The RAG system must respect CRM permissions. A junior agent should not be able to query vectors related to confidential management documents. This filter must be applied at the query level on the vector database (Metadata Filtering).

Step 5: Orchestration and CRM Integration

The final piece is integration into the CRM frontend. The assistant should not just be a chat, but a proactive agent. Here is a logical example of how to structure the call:

def get_crm_answer(user_query, user_id):

# 1. Verify user permissions

user_permissions = db.get_permissions(user_id)

# 2. Retrieve relevant documents (Retrieval) with security filters

docs = vector_store.similarity_search(

user_query,

k=3,

filter={"access_level": {"$in": user_permissions}}

)

# 3. Context construction

context_text = "nn".join([d.page_content for d in docs])

# 4. Response Generation (Generation)

response = llm_chain.invoke({"context": context_text, "question": user_query})

return responseConclusions: The Future of Financial CRM

Implementing RAG in CRM transforms a static database into a dynamic consultant. For financial institutions, this means reducing onboarding times for new employees (who have instant access to the entire corporate knowledge base) and ensuring that every answer given to the client complies with the latest regulations in force.

The key to success lies not in the most powerful model, but in the quality of the data pipeline and the rigidity of security protocols. In 2026, trust is the most valuable asset, and a well-designed RAG architecture is the best tool to preserve it.

Frequently Asked Questions

While a generic LLM relies on static and often outdated training data, a RAG (Retrieval-Augmented Generation) architecture integrated into the CRM allows for real-time consultation of corporate documents, current interest rates, and client history. This approach ensures responses based on updated proprietary data, reducing the risk of obsolete information and improving regulatory compliance in financial consulting.

Personal data protection occurs through various defensive strategies. It is essential to apply PII Redaction techniques to anonymize names and tax codes before they reach the AI model. Furthermore, the use of open-source models hosted on proprietary servers and the implementation of role-based access controls (RBAC) ensure that sensitive information never leaves the corporate infrastructure and is accessible only to authorized personnel.

For legal and financial documents, dividing text purely based on characters is discouraged as it risks breaking important clauses. The optimal strategy involves semantic or recursive chunking, which keeps logical paragraphs together and uses an overlap system between fragments. This method preserves the necessary context so that artificial intelligence can correctly interpret regulations during the retrieval phase.

Using pgvector on PostgreSQL is often preferable for proprietary CRMs because it allows keeping vector data (embeddings) within the same security perimeter as structured client data. Unlike external SaaS solutions, this configuration facilitates the execution of hybrid queries combining semantic search with traditional SQL filters, offering greater control over privacy and reducing network latency.

Advanced Prompt Engineering acts as a security filter by instructing the model to rely exclusively on the provided context. Through a rigorous System Prompt, the assistant is required to cite specific document sources for every statement and explicitly admit ignorance if the answer is not present in corporate policies, thus preventing the generation of non-existent rates or financial rules.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.