In Brief (TL;DR)

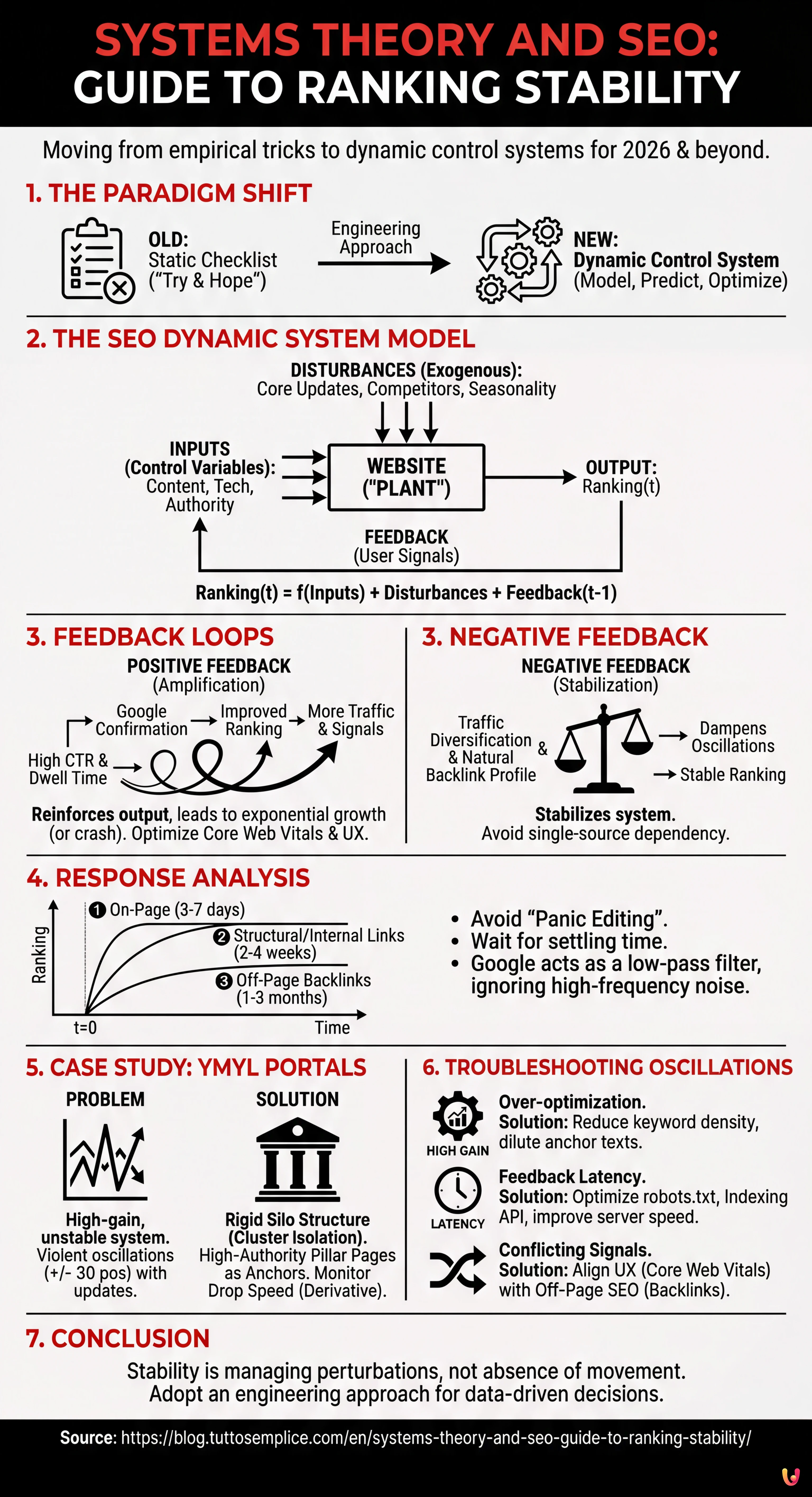

To compete in today’s SERPs, it is necessary to abandon empiricism and adopt an engineering approach based on Systems Theory principles.

Positioning must be managed as a dynamic system where technical inputs and user feedback interact to determine ranking stability.

Using mathematical models and respecting settling times allows for predicting oscillations and optimizing performance control.

The devil is in the details. 👇 Keep reading to discover the critical steps and practical tips to avoid mistakes.

It is 2026 and the empirical approach (“try and hope”) to SEO is no longer sufficient to compete in SERPs dominated by AI Overviews and high-frequency predictive algorithms. For Senior SEO Specialists and software engineers managing complex portals, a paradigm shift is required: treating organic positioning not as a series of tricks, but as a dynamic control system. In this technical guide, we will explore how SEO ranking stability can be modeled, predicted, and optimized using the principles of Systems Theory and Automatic Control.

1. Introduction: SEO as a Dynamic System

Traditionally, SEO is viewed as a static checklist. In Systems Theory, however, a website is a “plant” subject to inputs, disturbances, and feedback. The goal is not just to reach the first position, but to minimize the error between the desired state (Top 1) and the current state, ensuring the system does not enter resonance or instability following an algorithm update.

SEO ranking stability thus becomes a function of the system’s ability to absorb disturbances (Google Core Updates) and respond to inputs (on-page/off-page optimizations) without destructive oscillations.

2. Prerequisites and Analysis Tools

To apply this framework, it is necessary to abandon vanity metrics and equip oneself with tools that allow for the analysis of time series and complex correlations:

- Server-Side Log Analyzer: To monitor crawl frequency (the system’s “sampling rate”).

- Google Search Console (API): To extract raw data on impressions and CTR.

- Data Visualization Tools (e.g., Looker Studio or Tableau): To track response curves over time.

- Basic knowledge of Control Theory: Concepts such as PID (Proportional-Integral-Derivative) and Transfer Function.

3. The Mathematical Model of Ranking

We can model the positioning of a page $P$ at time $t$ as a state function:

Ranking(t) = f(Content(t), Tech(t), Authority(t)) + Disturbance(t) + Feedback(t-1)

Inputs (Control Variables)

These are the levers we can operate directly:

- Content: Semantic quality and relevance (Entity Coverage).

- Tech: Core Web Vitals, code structure, rendering speed.

- Internal Links: Internal PageRank distribution.

Disturbances (Exogenous Variables)

In engineering, a disturbance is an unwanted signal that alters the output. In SEO, the main disturbances are:

- Core Updates: Changes to the “controller” rules (Google).

- Competitor Activity: New content published by competitors.

- Seasonality: Variations in search demand.

4. Feedback Loop: Positive and Negative Feedback

The most powerful concept applicable to SEO ranking stability is the Feedback Loop. Google uses user signals to confirm or refute the validity of a ranking.

Positive Feedback Loops (Amplification)

Positive feedback occurs when the system output reinforces the input, leading to exponential growth (or a crash, if the sign is opposite but the dynamic is the same). Example:

- A page ranks well for a query.

- The CTR is higher than the expected average.

- The user spends a lot of time on the page (high Dwell Time) and interacts.

- Google interprets these signals as confirmation of quality.

- The ranking improves further, bringing more traffic and more signals.

Strategy: To trigger this loop, it is fundamental to optimize Core Web Vitals (INP, LCP, CLS) and UX. A slow site interrupts the positive loop at its inception.

Negative Feedback Loops (Stabilization)

In engineering, negative feedback is used to stabilize a system. In SEO, this happens through the diversification of traffic sources and the backlink profile. If a site depends 100% on a single keyword and that keyword fluctuates, the entire business collapses. A natural link profile acts as a “damper” for oscillations.

5. Response Analysis: Settling Time and Bode Plots

When we apply a change (e.g., revamping information architecture), the ranking does not change instantly. It follows a response curve.

Settling Time

This is the time required for the ranking to reach and remain within a certain tolerance band around the final value. According to observations on large portals in 2025-2026:

- On-Page Changes (Titles, H1): Rapid settling time (3-7 days).

- Structural Changes/Internal Links: Medium time (2-4 weeks).

- Off-Page Backlinks: Slow time (1-3 months).

Knowing these times avoids “panic editing”, i.e., the mistake of modifying a page again before the system has stabilized, introducing noise and instability.

Bode Diagrams (SEO Interpretation)

We can imagine Google’s response as a low-pass filter. High-frequency fluctuations (fast link spam, sudden keyword stuffing) are often cut or ignored by the algorithm, while low-frequency but constant signals (regular publishing, natural link growth) pass through and influence long-term ranking.

Practical Application: If you observe rapid ranking oscillations (daily), do not react immediately. It is often system noise or a temporary “Google Dance”. Intervene only if the trend (the low-frequency component) shows a consistent decline.

6. Case Study: Mortgage Comparison Portals

Financial portals (YMYL – Your Money Your Life) are high-gain systems but inherently unstable due to the sensitivity of the E-E-A-T algorithm.

The Problem

A mortgage portal undergoes violent oscillations (+/- 30 positions) with every Core Update. The system is underdamped.

The Engineering Solution

To increase SEO ranking stability, we implemented a rigid “silo” structure with strict internal linking control:

- Cluster Isolation: We reduced cross-links between unrelated topics (e.g., “Mortgages” vs. “Car Loans”) to avoid the propagation of negative signals (semantic contamination).

- Authority Buffer: Creation of high-authority “Pillar” pages that distribute link equity to transactional pages (the most volatile ones). Pillar Pages, being rich in informational content, are more stable and act as an anchor for commercial pages.

- Derivative Monitoring: We set alerts not on absolute traffic drop, but on the speed of the drop. A slow drop is physiological; a drop with a high derivative indicates a technical problem or an immediate algorithmic penalty.

7. Troubleshooting: Resolving Oscillations

If your site shows chronic instability, check these three points:

A. Over-optimization (High Gain Instability)

In a control system, if the Gain is too high, the system oscillates. In SEO, this equates to over-optimization (excessive keyword density, identical anchor texts).

Solution: Reduce the “gain”. Dilute anchor texts, make content more natural.

B. Feedback Latency

If Google takes too long to crawl the site (insufficient Crawl Budget), your corrections arrive late, causing an elastic effect.

Solution: Optimize the robots.txt file, use the Indexing API (where permitted), and improve server speed to reduce crawl latency.

C. Conflicting User Signals

If you have excellent backlinks (positive signal) but terrible Core Web Vitals (negative signal), the system receives contradictory inputs and oscillates.

Solution: Align UX and Off-Page SEO.

8. Conclusions

Moving from an artisanal to an engineering approach is the only way to guarantee SEO ranking stability in 2026. Understanding settling times, modeling feedback loops, and visualizing the site as a dynamic system allows for data-driven decisions rather than decisions based on fear of updates. Stability is not the absence of movement, but the ability to manage perturbations while maintaining the trajectory toward the goal.

Frequently Asked Questions

Modern SEO should not be treated as a static list, but as a dynamic control system where the website is a plant subject to inputs and external disturbances. To achieve stability, it is necessary to minimize the error between the desired position and the current one, managing user feedback and absorbing algorithm updates without generating destructive oscillations.

The settling time varies based on the type of intervention: on-page changes like titles usually require 3 to 7 days, while backlinks can take 1 to 3 months to stably influence the ranking. It is fundamental to wait for these technical times to avoid «panic editing», which would only introduce noise and instability into the system.

Feedback loops are mechanisms that Google uses to confirm the validity of a result via user signals. A positive loop is triggered when a high CTR and good user experience reinforce the ranking, while link diversification acts as negative feedback to dampen oscillations and stabilize traffic in the long term.

Violent oscillations often indicate an underdamped or over-optimized system, where the signal gain is too high due to keyword stuffing or repetitive anchor text. Other causes include conflicting signals, such as having excellent backlinks but poor Core Web Vitals, or high latency in crawling that delays the effect of corrections.

For financial or sensitive sites, it is crucial to implement a rigid silo structure that reduces semantic contamination between unrelated topics. The use of high-authority informative Pillar pages acts as a stability anchor for the more volatile commercial pages, while monitoring traffic drop speed allows distinguishing physiological fluctuations from serious technical problems.

Did you find this article helpful? Is there another topic you'd like to see me cover?

Write it in the comments below! I take inspiration directly from your suggestions.